Community Articles

- Cloudera Community

- Support

- Community Articles

- Understanding Reasoning Models with GRPO: A Concep...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 07-16-2025 05:25 AM - edited 07-16-2025 10:20 AM

1. Introduction

- Problem:

- Most readily-available Medical AI models lack structured reasoning, which significantly limits their reliability and trustworthiness in critical clinical decision-making. GRPO offers a robust solution for building reasoning Medical AI models by reinforcing logical step-by-step explanations.

- Takeaway:

- This post explains how enterprises can fine-tune Large Language Models (LLMs) with reinforcement learning (RL) using GRPO for more structured, explainable AI, demonstrating its application with a medical reasoning use case.

2. The Business Case

- Why should enterprises care?

- Reasoning is critical in high-stakes industries like healthcare, finance, and legal sectors. It provides better transparency, explainability, and tracing on how models generate their outputs, building crucial trust and facilitating accountability.

- Why GRPO?

- GRPO efficiently delivers advanced reasoning capabilities. It significantly reduces compute requirements, cutting them by nearly half compared to traditional Reinforcement Learning from Human Feedback (RLHF) methods like PPO. Concurrently, GRPO develops models that self-verify answers, provide step-by-step reasoning, explore multiple problem-solving approaches, and even reflect on their own reasoning process. This democratizes access to reasoning models, enabling their deployment on modest hardware (for example, systems with even 16GB VRAM) and making sophisticated AI reasoning accessible for organizations of all sizes, without requiring enterprise-scale infrastructure.

- Who benefits?

- Data science teams, AI engineers, business leaders, and decision makers seeking to optimize LLMs for complex reasoning tasks.

3. GRPO Deep Dive

- What is GRPO?

- How does GRPO improve reasoning?

3.1. What is GRPO?

GRPO is a reinforcement learning (RL) algorithm specifically designed to improve the reasoning capabilities of LLMs. Unlike traditional supervised fine-tuning (SFT), GRPO does not just teach the model to predict the next word, it optimizes the model for specific outcomes, such as correctness, formatting, and other task-specific rewards.

At its core, GRPO:

- Compares multiple model outputs (candidates) per prompt in a batch.

- Assigns rewards based on correctness, formatting, and other predefined metrics.

- Adjusts the model to increase the likelihood of generating better reasoning paths and answers in future iterations.

Traditional reinforcement learning methods rely heavily on separate value functions, but GRPO simplifies this, creating a “group-based” learning experience. This is achieved through a clever iterative process:

- Group Sampling: The model simultaneously generates multiple diverse answers for each question.

- Reward Scoring: Each generated answer is evaluated for accuracy, format, and consistency.

- Group Advantage: Answers outperforming the batch average are rewarded; lower performers are discouraged.

- Policy Update: The model's policy is updated, increasingly favoring the generation of more logical and structurally sound answers..

- Iterative Refinement: The entire process repeats, continuously refining the model towards optimal reasoning.

GRPO is memory efficient and aligns particularly with tasks where correctness is objectively verifiable but labeled data is scarce. Examples include medical Q&A, legal reasoning, and code generation. In essence, it represents a shift from text-style traditional fine-tuning to outcome-based fine-tuning for LLMs. Tools like Unsloth are making this method more accessible, which can lead to superior performance on verifiable tasks while improving explainability with reasoning chains.

3.2. How Does GRPO Improve Reasoning?

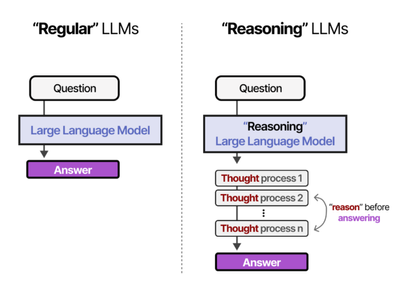

Fig 1: “Regular” vs “Reasoning” LLMs

Medical Reasoning Example

Let’s walk through a practical medical reasoning example to illustrate the difference:

Traditional Fine-Tuning Model Behavior:

Given a patient case, a model trained with traditional Supervised Fine-Tuning (SFT) methods might hallucinate by generating the most likely next word based on patterns in its training dataset. It can generate a diagnosis but often lacks structured reasoning or explanation for its conclusion.

GRPO-Enhanced Model Behavior:

In contrast, a GRPO-trained model is rewarded not only for the correct final diagnosis but for providing a detailed, reasoned trace:

<reasoning>

The patient presents with chest pain, high blood pressure, and shortness of breath. These symptoms are indicative of cardiovascular issues. Given the elevated blood pressure and the patient's age, the most probable diagnosis is acute coronary syndrome.

</reasoning>

<answer>

Acute Coronary Syndrome

</answer>

The model is rewarded for:

- Clinical accuracy (matching ground truth diagnosis)

- Providing traceable reasoning in a structured format

- Using medically valid explanations

4. Real-World Outcomes

By fine-tuning with tailored datasets, base models, and reward functions, you achieve:

- Custom Expertise:

The model becomes a specialised domain-specific assistant, capable of answering questions with a high degree of accuracy and relevance within its designated field. - Consistent Responses:

Enforced formatting ensures that each response includes clear reasoning and a final answer in a predictable format. - Efficiency:

Leveraging Unsloth’s fine-tuning optimizations, the GRPO-trained model can efficiently deliver medical suggestions with a variety of open-source language models.

5. Conclusion

- GRPO offers a powerful approach to developing highly specialized and reliable LLMs:

- Domain specific reasoning model:

A curated dataset (e.g. for medical reasoning) is instrumental in transforming a general-purpose language model into a domain-specific expert. - Reward Functions:

Serve as the model's scorecard by evaluating correctness and format, guiding the model to produce improved responses. - Overall Benefit:

GRPO is a powerful technique for aligning language models with specific behaviors. It helps convert a general LLM into an expert system that can efficiently provide accurate and detailed answers for organisations working with complex datasets.

6. Resources

- GRPO is a powerful technique for aligning language models with specific behaviors. It helps convert a general language model into an expert system that can efficiently provide accurate and detailed answers for organisations with complex datasets.

- Run this notebook within your environment

- One-click deploy and experiment with the GRPO AMP within your Cloudera AI environment

- If you don’t have a Cloudera notebook, then register for 5-day Cloudera trial and experience this AMP within Cloudera AI: https://www.cloudera.com/products/cloudera-public-cloud-trial.html

- Suggested links: