Community Articles

- Cloudera Community

- Support

- Community Articles

- Understanding basics of HDFS and YARN

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 08-30-2018 02:51 PM - edited 08-17-2019 06:35 AM

Hadoop Distributed File System

HDFS is a Java-based file system that provides scalable and reliable data storage, and it was designed to span large clusters of commodity servers. HDFS has demonstrated production scalability of up to 200 PB of storage and a single cluster of 4500 servers, supporting close to a billion files and blocks. When that quantity and quality of enterprise data is available in HDFS, and YARN enables multiple data access applications to process it, Hadoop users can confidently answer questions that eluded previous data platforms.

HDFS is a scalable, fault-tolerant, distributed storage system that works closely with a wide variety of concurrent data access applications, coordinated by YARN. HDFS will “just work” under a variety of physical and systemic circumstances. By distributing storage and computation across many servers, the combined storage resource can grow linearly with demand while remaining economical at every amount of storage.

Take Away

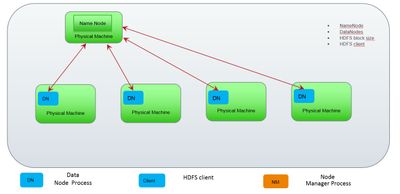

1. HDFS is based on a master Slave Architecture with Name Node (NN) being the master and Data Nodes (DN) being the slaves.

2. Name Node stores only the meta Information about the files, actual data is stored in Data Node.

3. Both Name Node and Data Node are processes and not any super fancy Hardware.

4. The Data Node uses the underlying OS file System to save the data.

4. You need to use HDFS client to interact with HDFS. The hdfs clients always talks to Name Node for meta Info and subsequently talks to Data Nodes to read/write data. No Data IO happens through Name Node.

5. HDFS clients never send data to Name Node hence Name Node never becomes a bottleneck for any Data IO in the cluster

6. HDFS client has "short-circuit" feature enabled hence if the client is running on a Node hosting Data Node it can read the file from the Data Node making the complete read/write Local.

7. To even make it simple imagine HDFSclient is a web client and HDFS as whole is a web service which has predefined task to GET, PUT, COPYFROMLOCAL etc.

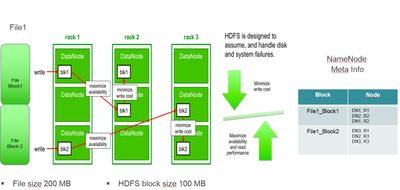

How is a 400 MB file Saved on HDFS with hdfs block size of 100 MB.

The diagram shows how first block is saved. In case of replication each block will be saved 3 on different Data Nodes.

The meta info saved on Name Node (Replication Factor of 3 is used hence each block is saved thrice)

Block Placement Strategy

- Place the first replica somewhere – either a random node (if the HDFS client is outside the Hadoop/DataNode cluster) or on the local node (if the HDFS client is running on a node where data Node is running. "short-circuit" optimization).

- Place the second replica in a different rack. (This ensures if power supply of one rock goes down still the block can be read from other rack.)

- Place the third replica in the same rack as the second replica. ( This ensures in case a yarn container can be allocated on a give host, the data will be served from a host in the same rack. Data transfer in same rack is faster as compared to across rack )

- If there are more replicas – spread them across the rest of the racks.

YARN (Yet Another Resource Negotiator )

"does it ring a bell 'Yet Another Hierarchically Organized Oracle' YAHOO"

YARN

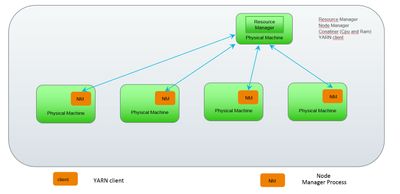

is essentially a system for managing distributed applications. It consists of a central Resource manager (RM),

which arbitrates all available cluster resources, and a per-node Node Manager (NM), which

takes direction from the Resource manager.

The Node manager

is responsible for managing available resources on a single node.

http://hortonworks.com/hadoop/yarn/

Take Away

1. YARN is based on a master Slave Architecture with Resource Manager being the master and Node Manager being the slaves.

2. Resource Manager keeps the meta info about which jobs are running on which Node Manage and how much memory and CPU is consumed and hence has a holistic view of total CPU and RAM consumption of the whole cluster.

3. The jobs run on the Node Manager and jobs never get execute on Resource Manager. Hence RM never becomes a bottleneck for any job execution. Both RM and NM are processes and not some fancy hardware

4. Container is logical abstraction for CPU and RAM.

5. YARN (Yet Another Resource Negotiator) is scheduling container (CPU and RAM ) over the whole cluster. Hence for end user if he needs CPU and RAM in the cluster it needs to interact with YARN

6. While Requesting for CPU and RAM you can specify the Host one which you need it.

7. To interact with YARN you need to use yarn-client which

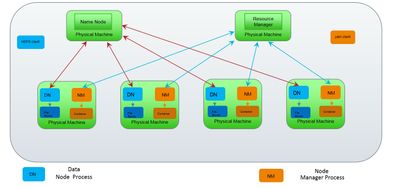

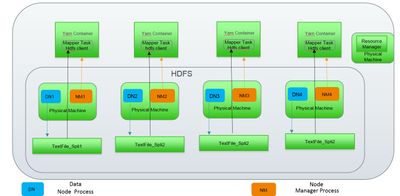

How HDFS and YARN work in TANDEM

1. Name Node and Resource Manager process are hosted on two different host. As they hold key meta information.

2. The Data Node and Node manager processes are co-located on same host.

3. A file is saved onto HDFS (Data Nodes) and to access a file in Distributed way one can write a YARN Application (MR2, SPARK, Distributed Shell, Slider Application) using YARN client and to read data use HDFSclient.

4. The Distributed application can fetch file location ( meta info From Name Node ) ask Resource Manager (YARN) to provide containers on the hosts which hold the file blocks.

5. Do remember the short-circuit optimization provided by HDFS, hence if the Distributed job gets a container on a host which host the file block and tries to read it, the read will be local and not over the network.

6. The same file If read sequentially would have taken 4 sec (100 MB/sec speed) can be read in 1 second as Distributed process is running parallely on different YARN container( Node Manager) and reading 100 MB/sec *4 in 1 second.