Community Articles

- Cloudera Community

- Support

- Community Articles

- Using Cloudbreak to deploy HDP 2.6 and Spark 2.1 o...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This tutorial will walk you through the process of using Cloudbreak to deploy an HDP 2.6 cluster with Spark 2.1. We'll copy and edit the existing hdp-spark-cluster blueprint which deploys Spark 1.6 to create a new blueprint which installs Spark 2.1. This tutorial is part one of a two-part series. The second tutorial walks you through using Zeppelin to verify the Spark 2.1 installation. You can find that tutorial here: HCC Article

Prerequisites

- You should already have a Cloudbreak v1.14.0 environment running. You can follow this article to create a Cloudbreak instance using Vagrant and Virtualbox: HCC Article

- You should already have updated Cloudbreak to support deploying HDP 2.6 clusters. You can follow this article to enable that functionality: HCC Article

Scope

This tutorial was tested in the following environment:

- Cloudbreak 1.14.4

- AWS EC2

- HDP 2.6

- Spark 2.1

Steps

Create Blueprint

Before we can deploy a Spark 2.1 cluster using Cloudbreak, we need to create a blueprint that specifies Spark 2.1. Cloudbreak ships with 3 blueprints out of the box:

- hdp-small-default: basic HDP cluster with Hive and HBase

- hdp-spark-cluster: basic HDP cluster with Spark 1.6

- hdp-streaming-cluster: basic HDP cluster with Kafka and Storm

We will use the hdp-spark-cluster as our base blueprint and edit it to deploy Spark 2.1 instead of Spark 1.6.

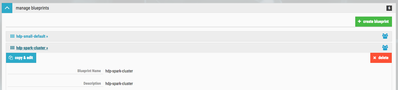

Click on the manage blueprints section of the UI. Click on the hdp-spark-cluster blueprint. You should see something similar to this:

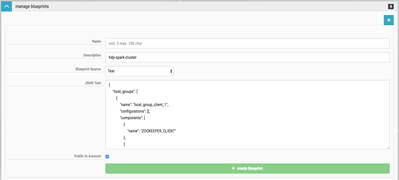

Click on the blue copy & edit button. You should see something similar to this:

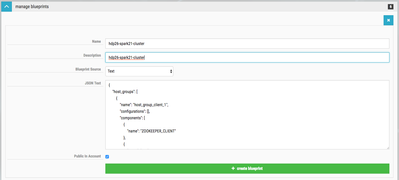

For the Name, enter hdp26-spark21-cluster. This tells us the blueprint is for an HDP 2.6 cluster using Spark 2.1. Enter the same information for the Description. You should see something similar to this:

Now, we need to edit the JSON portion of the blueprint. We need to change the Spark 1.6 components to Spark 2.1 components. We don't need change where they are deployed. The following entries within the JSON are for Spark 1.6:

"name": "SPARK_CLIENT""name": "SPARK_JOBHISTORYSERVER""name": "SPARK_CLIENT"

We will replace SPARK with SPARK2. These entries should look as follows:

"name": "SPARK2_CLIENT""name": "SPARK2_JOBHISTORYSERVER""name": "SPARK2_CLIENT"

NOTE: There are two entries for SPARK_CLIENT. Make sure you change both.

We are going to add an entry for the LIVY component. We will add it to the same node as the SPARK_JOBHISTORYSERVER. We are also going to add an entry for the SPARK2_THRIFTSERVER component. We will add it to the same node as the SPARK_JOBHISTORYSERVER. Let's add those two entries just below SPARK2_CLIENT in the host_group_master_2 section.

Change the following:

{

"name": "SPARK2_JOBHISTORYSERVER"

},

{

"name": "SPARK2_CLIENT"

},

to this:

{

"name": "SPARK2_JOBHISTORYSERVER"

},

{

"name": "SPARK2_CLIENT"

},

{

"name": "SPARK2_THRIFTSERVER"

},

{

"name": "LIVY2_SERVER"

},

We also need to update the blueprint_name to hdp26-spark21-cluster and the stack_version to 2.6. you should have something similar to this:

"Blueprints": {

"blueprint_name": "hdp26-spark21-cluster",

"stack_name": "HDP",

"stack_version": "2.6"

}

If you prefer, you can copy and paste the following blueprint JSON:

{

"host_groups": [

{

"name": "host_group_client_1",

"configurations": [],

"components": [

{

"name": "ZOOKEEPER_CLIENT"

},

{

"name": "PIG"

},

{

"name": "OOZIE_CLIENT"

},

{

"name": "HBASE_CLIENT"

},

{

"name": "HCAT"

},

{

"name": "KNOX_GATEWAY"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "FALCON_CLIENT"

},

{

"name": "TEZ_CLIENT"

},

{

"name": "SPARK2_CLIENT"

},

{

"name": "SLIDER"

},

{

"name": "SQOOP"

},

{

"name": "HDFS_CLIENT"

},

{

"name": "HIVE_CLIENT"

},

{

"name": "YARN_CLIENT"

},

{

"name": "METRICS_COLLECTOR"

},

{

"name": "MAPREDUCE2_CLIENT"

}

],

"cardinality": "1"

},

{

"name": "host_group_master_3",

"configurations": [],

"components": [

{

"name": "ZOOKEEPER_SERVER"

},

{

"name": "APP_TIMELINE_SERVER"

},

{

"name": "TEZ_CLIENT"

},

{

"name": "HBASE_MASTER"

},

{

"name": "HBASE_CLIENT"

},

{

"name": "HDFS_CLIENT"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "SECONDARY_NAMENODE"

}

],

"cardinality": "1"

},

{

"name": "host_group_slave_1",

"configurations": [],

"components": [

{

"name": "HBASE_REGIONSERVER"

},

{

"name": "NODEMANAGER"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "DATANODE"

}

],

"cardinality": "6"

},

{

"name": "host_group_master_2",

"configurations": [],

"components": [

{

"name": "ZOOKEEPER_SERVER"

},

{

"name": "ZOOKEEPER_CLIENT"

},

{

"name": "PIG"

},

{

"name": "MYSQL_SERVER"

},

{

"name": "HIVE_SERVER"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "SPARK2_JOBHISTORYSERVER"

},

{

"name": "SPARK2_CLIENT"

},

{

"name": "SPARK2_THRIFTSERVER"

},

{

"name": "LIVY2_SERVER"

},

{

"name": "TEZ_CLIENT"

},

{

"name": "HBASE_CLIENT"

},

{

"name": "HIVE_METASTORE"

},

{

"name": "ZEPPELIN_MASTER"

},

{

"name": "HDFS_CLIENT"

},

{

"name": "YARN_CLIENT"

},

{

"name": "MAPREDUCE2_CLIENT"

},

{

"name": "RESOURCEMANAGER"

},

{

"name": "WEBHCAT_SERVER"

}

],

"cardinality": "1"

},

{

"name": "host_group_master_1",

"configurations": [],

"components": [

{

"name": "ZOOKEEPER_SERVER"

},

{

"name": "HISTORYSERVER"

},

{

"name": "OOZIE_CLIENT"

},

{

"name": "NAMENODE"

},

{

"name": "OOZIE_SERVER"

},

{

"name": "HDFS_CLIENT"

},

{

"name": "YARN_CLIENT"

},

{

"name": "FALCON_SERVER"

},

{

"name": "METRICS_MONITOR"

},

{

"name": "MAPREDUCE2_CLIENT"

}

],

"cardinality": "1"

}

],

"Blueprints": {

"blueprint_name": "hdp26-spark21-cluster",

"stack_name": "HDP",

"stack_version": "2.6"

}

}

Once you have all of the changes in place, click the green create blueprint button.

Create Security Group

We need to create a new security group to use with our cluster. By default, the existing security groups only allow ports 22, 443, and 9443. As part of this tutorial, we will use Zeppelin to test Spark 2.1. We'll create a new security group that opens all ports to our IP address.

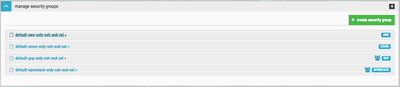

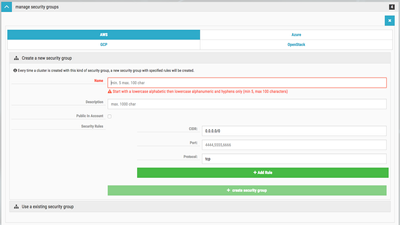

Click on the manage security groups section of the UI. You should see something similar to this:

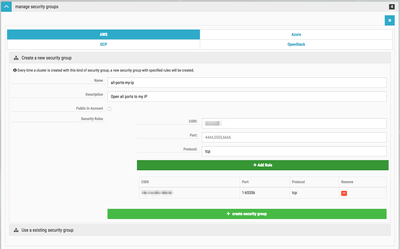

Click on the green create security group button. You should see something similar to this:

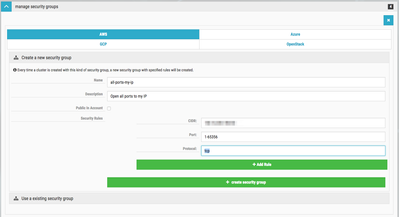

First you need to select the appropriate cloud platform. I'm using AWS, so that is what I selected. We need to provide a unique name for our security group. I used all-ports-my-ip. You should use something descriptive. Provide a helpful description as well. Now we need to enter our personal IP address CIDR. I am using #.#.#.#/32; your IP address will obviously be different. You need to enter the port range. There is a known issue in Cloudbreak that prevents you from using 0-65356, so we'll use 1-65356. For the protocol, use tcp. Once you have everything entered, you should see something similar to this:

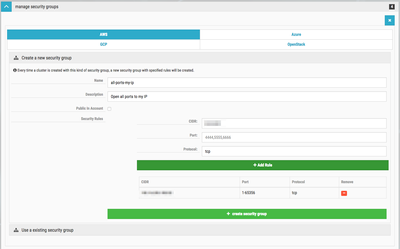

Click the green Add Rule button to add this rule to our security group. You can add multiple rules, but we have everything covered with our single rule. You should see something similar to this:

If everything looks good, click the green create security group button. This will create our new security group. You should see something like this:

Create Cluster

Now that our blueprint has been created and we have an new security group, we can begin building the cluster. Ensure you have selected the appropriate credential for your cloud environment. Then click the green create cluster button. You should see something similar to this:

Give your cluster a descriptive name. I used spark21test, but you can use whatever you like. Select an appropriate cloud region. I'm using AWS and selected US East (N. Virginia), but you can use whatever you like. You should see something similar to this:

Click on the Setup Network and Security button. You should see something similar to this:

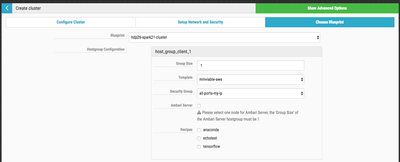

We are going to keep the default options here. Click on the Choose Blueprint button. You should see something similar to this:

Expand the blueprint dropdown menu. You should see the blueprint we created before, hdp26-spark21-cluster. Select the blueprint. You should see something similar to this:

You should notice the new security group is already selected. Cloudbreak did not automatically figure this out. The instance templates and security groups are selected alphabetically be default.

Now we need to select a node on which to deploy Ambari. I typically deploy Ambari on the master1 server. Check the Ambari check box on one of the master servers. If everything looks good, click on the green create cluster, You should see something similar to this:

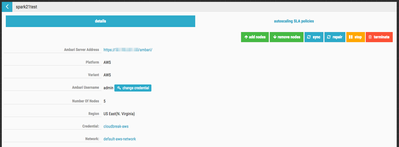

Once the cluster has finished building, you can click on the arrow for the cluster we created to get expanded details. You should see something similar to this:

Verify Versions

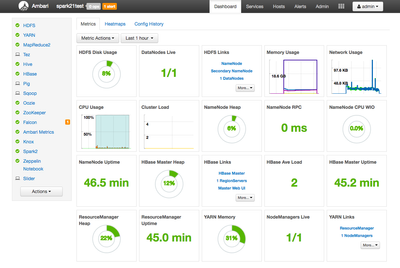

Once the cluster is fully deployed, we can verify the versions of the components. Click on the Ambari link on the cluster details page. Once you login to Ambari, you should see something similar to this:

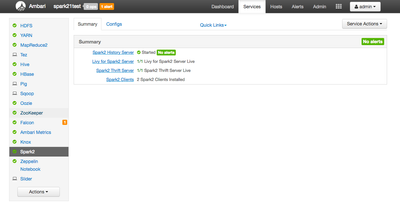

You should notice that Spark2 is shown in the component list. Click on Spark2 in the list. You should see something similar to this:

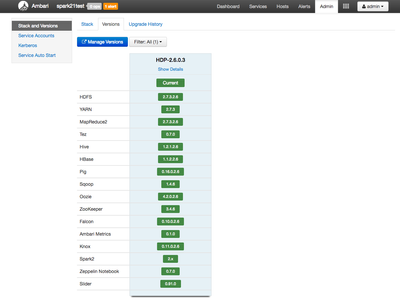

You should notice that both the Spark2 Thrift Server and the Livy2 Server have been installed. Now lets check the overall cluster verions. Click on the Admin link in the Ambari menu and select Stacks and Versions. Then click on the Versions tab. You should see something similar to this:

As you can see, HDP 2.6.0.3 was deployed.

Review

If you have successfully followed along with this tutorial, you should know how to create a new security group and blueprint. The blueprint allows you to deploy HDP 2.6 with Spark 2.1. The security group allows you to access all ports on the cluster from your IP address. Follow along in part 2 of the tutorial series to use Zeppelin to test Spark 2.1.