Community Articles

- Cloudera Community

- Support

- Community Articles

- Windows Share + Nifi + HDFS – A Practical Guide

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 04-06-2016 12:16 PM - edited 09-16-2022 01:34 AM

Recently I had a client ask about how would we go about connecting a windows share to Nifi to HDFS, or if it was even possible. This is how you build a working proof of concept to demo the capabilities!

You will need two Servers or Virtual machines. One for windows, one for Hadoop + Nifi. I personally elected to use these two

- The Sandbox http://hortonworks.com/products/hortonworks-sandbox/

- A windows VM running win 7 https://developer.microsoft.com/en-us/microsoft-edge/tools/vms/linux/

You then need to install nifi on the sandbox, I find this repo to be the easiest to follow. https://github.com/abajwa-hw/ambari-nifi-service

Be sure the servers can talk to each other directly, I personally used a bridged network connection in virtual box and looked up the IPs on my router's control panel.

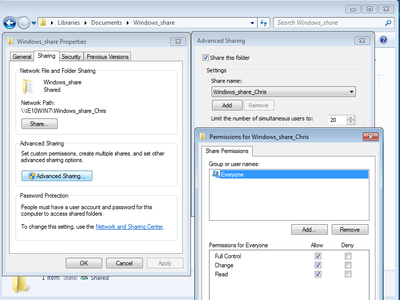

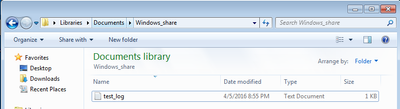

Next you need to setup a windows share of some format. This can be combined with active directory but I personally just enabled guest accounts and made an account called Nifi_Test. These instructions were the basis of creating a windows share http://emby.media/community/index.php?/topic/703-how-to-make-unc-folder-shares/ Keep in mind network user permissions may get funky and the example above will enforce a read only permission unless you do additional work.

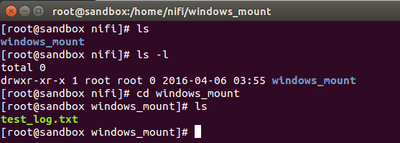

Now you have mount the share into the hadoop machine using CIFs+Samba. The instructions I followed are here http://blog.zwiegnet.com/linux-server/mounting-windows-share-on-centos/

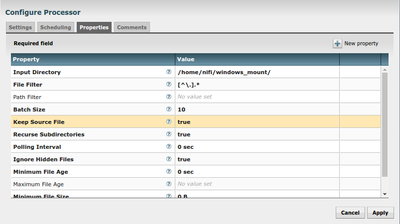

Finally we are able to setup nifi to read the mounted drive and post it to HDFS. The GetFile processor retrieves the files while the PutHDFS stores it.

To configure HDFS for the incoming data I ran the following commands on the sandbox: "su HDFS" ; “Hadoop dfs -mkdir /user/nifi” ; “Hadoop dfs -chmod 777 /user/nifi”

I elected to keep the source file for troubleshooting purposes so that every time the processor ran it would just stream the data in.

GetFile Configuration

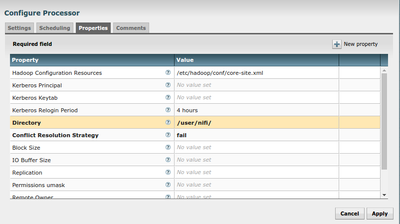

The PutHDFS Configuration for sandbox

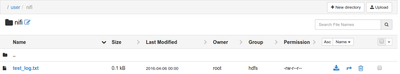

And finally run it and confirm it lands in HDFS!

Created on 08-26-2016 07:58 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Hi @Chris Gambino,

Thanks for sharing this article. I was working for one of our client but I couldn't achive this. They gave me a dfs path and I mounted it in linux using 'mount -t cifs //mydfsdomain.lan/namespaceroot/sharedfolder /mnt -o username=windowsuser'

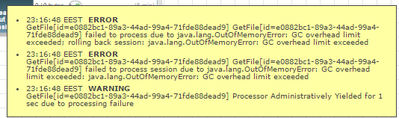

Then I create a directory as you do in hdfs. Then I create processes in nifi. But when I start flow, nothing happens. After waits for 2-3 minutes, GetFile throw an error as 'gc overhead limit exceeded'.

What shall I do to achive this? I found answer(https://community.hortonworks.com/questions/36416/how-to-getftp-file-and-putfile-in-to-linux-directo...) for this question and it says that it is impossible. On the other hand you made it. I'm confused.

Any suggestion?

Created on 08-26-2016 07:58 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

Its probably reading the same file repeatedly without permissions to delete it. On the get file processor configure it to only run every 5 seconds. Then in flow view right click and refresh the page and you will probably see the outbound que with a file. If you don't refresh the view you may not see the flow files building up and hten it builds up enough and you run out of memory.

Created on 08-26-2016 08:26 PM - edited 08-17-2019 12:53 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I made changes to run it every 5 sec and refresh page but nothing changed. Here is the error after 2-3 minutes later I stops the flow .

Created on 08-26-2016 08:31 PM

- Mark as Read

- Mark as New

- Bookmark

- Permalink

- Report Inappropriate Content

I write the inner path in mounted directory. Then I start and this time it wrote xml's to the HDFS. So it seems that Recurse Subdirectories property is not working. Could ypu use recurse subdirectories property correctly? Still can't achieve to write all xml's in all sub directories automatically!