Community Articles

- Cloudera Community

- Support

- Community Articles

- Workaround for RegionServer startup failure after ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Created on 07-13-2016 11:19 AM - edited 08-17-2019 11:23 AM

On Kerberos-secured clusters managed by Ambari versions prior to 2.0.0, the hdfs.headless.keytab file is deployed on all the nodes in the cluster. From Ambari version 2.0.0 onwards, this keytab file is not deployed by default on every node.

If the Ranger HBase plugin is enabled and set up to write to HDFS, Ambari's RegionServer start up script tries to access the hdfs.headless.keytab file. If the host that the RegionServer is running on does not also have the HDFS client role assigned to it, the hdfs.headless.keytab file will be absent and the RegionServer will fail to start with an error such as this in Ambari:

resource_management.core.exceptions.Fail: Execution of '/usr/bin/kinit -kt /etc/security/keytabs/hdfs.headless.keytab hdfs-examplecluster@KRBEXAMPLE.COM' returned 1. kinit: Key table file '/etc/security/keytabs/hdfs.headless.keytab' not found while getting initial credentials

This problem is expected to be resolved with the release of Ambari 2.4.0, but until then, the work-around is to install the HDFS client role on all of your RegionServers. There are two challenges to implement this work-around using the Ambari Web UI.

- The web UI does not allow you to only install the HDFS client role, it forces you to install all the service clients together.

- The web UI does not allow you to carry out a bulk install of the client role on all of your regionservers in one operation.

The Ambari REST API can be used to deploy the HDFS_CLIENT role on all your RegionServer nodes, even if your KDC admin credentials are not stored in Ambari. This article describes how to load your Ambari REST API session with KDC credentials. We'll use the same technique here.

The cluster is called examplecluster and the Kerberos realm is KRBEXAMPLE.COM. The first example node that we install the HDFS_CLIENT role on is slavenode01.hdp.example.com. The Ambari administrator username is admin. We are running all the curl commands on the Ambari server itself (ambnode), so we can use localhost in the URL for curl and none of the credentials are traversing the network.

-

Authenticate as an Ambari administrator and get an Ambari session ID (AMBARISESSIONID).

[19:11]:[nl@ambnode:~]$ curl -i -u admin -H 'X-Requested-By: ambari' -X GET 'http://localhost:8080/api/v1/clusters' Enter host password for user 'admin': HTTP/1.1 200 OK X-Frame-Options: DENY X-XSS-Protection: 1; mode=block User: admin Set-Cookie: AMBARISESSIONID=xqptqwi8qs4s13wcikxtu5gnb;Path=/;HttpOnly Expires: Thu, 01 Jan 1970 00:00:00 GMT Content-Type: text/plain Vary: Accept-Encoding, User-Agent Content-Length: 242 Server: Jetty(8.1.17.v20150415) { "href" : "http://localhost:8080/api/v1/clusters", "items" : [ { "href" : "http://localhost:8080/api/v1/clusters/examplecluster", "Clusters" : { "cluster_name" : "examplecluster", "version" : "HDP-2.4" } } ] }[19:11]:[nl@ambnode:~]$ -

Check the status of KDC administrator credentials in the session.

[19:12]:[nl@ambnode:~]$ curl -H 'Cookie: AMBARISESSIONID=xqptqwi8qs4s13wcikxtu5gnb' -H 'X-Requested-By: ambari' -X GET 'http://localhost:8080/api/v1/clusters/examplecluster/services/KERBEROS?fields=Services/attributes/kdc_validation_result,Services/attributes/kdc_validation_failure_details' { "href" : "http://localhost:8080/api/v1/clusters/examplecluster/services/KERBEROS?fields=Services/attributes/kdc_validation_result,Services/attributes/kdc_validation_failure_details", "ServiceInfo" : { "cluster_name" : "examplecluster", "service_name" : "KERBEROS" }, "Services" : { "attributes" : { "kdc_validation_failure_details" : "Missing KDC administrator credentials.\nThe KDC administrator credentials must be set as a persisted or temporary credential resource.This may be done by issuing a POST to the /api/v1/clusters/:clusterName/credentials/kdc.admin.credential API entry point with the following payload:\n{\n \"Credential\" : {\n \"principal\" : \"(PRINCIPAL)\", \"key\" : \"(PASSWORD)\", \"type\" : \"(persisted|temporary)\"}\n }\n}", "kdc_validation_result" : "MISSING_CREDENTIALS" } } }[19:13]:[nl@ambnode:~]$ - Load the KDC administrator credentials into the session and check again.

[19:14]:[nl@ambnode:~]$ curl -H 'Cookie: AMBARISESSIONID=xqptqwi8qs4s13wcikxtu5gnb' -H 'X-Requested-By: ambari' -X PUT 'http://localhost:8080/api/v1/clusters/examplecluster' -d '[{"session_attributes":{"kerberos_admin":{"principal":"kadmin/admin@KRBEXAMPLE.COM","password":"XXXXXXXXX"}}}]' [19:15]:[nl@ambnode:~]$ [19:15]:[nl@ambnode:~]$ curl -H 'Cookie: AMBARISESSIONID=xqptqwi8qs4s13wcikxtu5gnb' -H 'X-Requested-By: ambari' -X GET 'http://localhost:8080/api/v1/clusters/examplecluster/services/KERBEROS?fields=Services/attributes/kdc_validation_result,Services/attributes/kdc_validation_failure_details' { "href" : "http://localhost:8080/api/v1/clusters/examplecluster/services/KERBEROS?fields=Services/attributes/kdc_validation_result,Services/attributes/kdc_validation_failure_details", "ServiceInfo" : { "cluster_name" : "examplecluster", "service_name" : "KERBEROS" }, "Services" : { "attributes" : { "kdc_validation_failure_details" : "", "kdc_validation_result" : "OK" } } }[19:15]:[nl@ambnode:~]$ - Create the HDFS_CLIENT component on the node.

[19:19]:[nl@amb-host:~]$ curl -H 'X-Requested-By: ambari' -H 'Cookie: AMBARISESSIONID=xqptqwi8qs4s13wcikxtu5gnb' -X POST "http://localhost:8080/api/v1/clusters/examplecluster/hosts/slavenode01.hdp.example.com/host_components/HDFS_CLIENT" [19:19]:[nl@ambnode:~]$

- Check the Ambari web UI - on the node you will see that the HDFS client is added but there will be an exclamation mark next to it.

You can add the HDFS_CLIENT component on the rest of the RegionServer nodes using a for loop such as this (where /var/tmp/regionservers contains a list of nodes, one per line, that you want to install the HDFS client role on):for i in $(cat /var/tmp/regionservers) ; do echo $i ; curl -H 'X-Requested-By: ambari' -H 'Cookie: AMBARISESSIONID=xqptqwi8qs4s13wcikxtu5gnb' -X POST "http://localhost:8080/api/v1/clusters/examplecluster/hosts/${i}/host_components/HDFS_CLIENT" ; echo ; done - Install the HDFS_CLIENT component on the node.

[19:20]:[nl@ambnode:~]$ curl -H 'X-Requested-By: ambari' -H 'Cookie: AMBARISESSIONID=xqptqwi8qs4s13wcikxtu5gnb' -X PUT "http://localhost:8080/api/v1/clusters/examplecluster/hosts/slavenode01.hdp.example.com/host_components/HDFS_CLIENT" -d '[{"RequestInfo": {"context" :"Install HDFS_CLIENT component via REST"}, "Body": {"HostRoles":{"state":"INSTALLED"}}}]' { "href" : "http://localhost:8080/api/v1/clusters/examplecluster/requests/572", "Requests" : { "id" : 572, "status" : "Accepted" } }[19:20]:[nl@ambnode:~]$ - Check the Ambari web UI. If you completely reload the page for that host, you should see that the exclamation mark has gone from next to the HDFS Client listing.

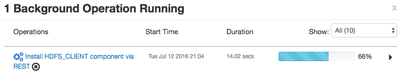

You will also see in the background operations view of Ambari a Install HDFS_CLIENT component via REST operation.

If the operation was successful, you will find that you can now start the RegionServer on the node. Each of these background operations takes some time for the KDC operations to complete so if you use a for loop, it might be advisable to insert a pause after each one.for i in $(cat /var/tmp/regionservers) ; do echo $i ; curl -H 'X-Requested-By: ambari' -H 'Cookie: AMBARISESSIONID=xqptqwi8qs4s13wcikxtu5gnb' -X PUT "http://localhost:8080/api/v1/clusters/examplecluster/hosts/${i}/host_components/HDFS_CLIENT" -d '[{"RequestInfo": {"context" :"Install HDFS_CLIENT component via REST"}, "Body": {"HostRoles":{"state":"INSTALLED"}}}]' ; echo ; echo ; sleep 20 ; done

At this point you should find that all HBase components can be started up successfully, with Ranger-based authorization in place.