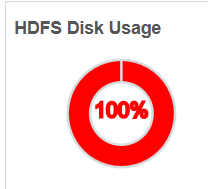

from ambari dashboard we can see that HDFS is 100%

I start with

hadoop fs -du -h / | grep spark2-history

60.9 G /spark2-history

* other way to see who take space is from - https://community.hortonworks.com/articles/16846/how-to-identify-what-is-consuming-space-in-hdfs.htm...

is it mean that spark-history take from HDFS 60.9G ?

if not how the know what need to clean from the HDFS ?

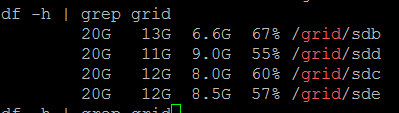

we have 5 workers machine

and each worker have the following:

Michael-Bronson