Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Accumulo TServer failing after Wizard Install ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Accumulo TServer failing after Wizard Install completion

- Labels:

-

Apache Accumulo

-

Apache Ambari

Created on 08-25-2016 09:51 AM - edited 08-19-2019 03:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

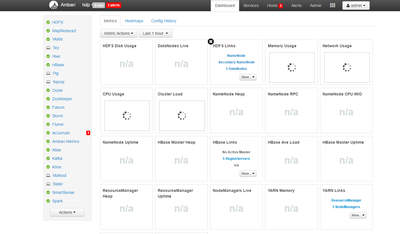

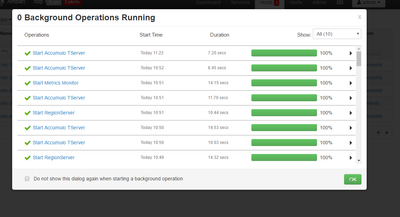

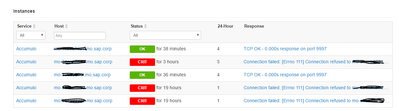

So I've just completed the Ambari install wizard (Ambari 2.2, HDP 2.3.4) and started the services.Everything is running and working now (not at first) except for 3/5 hosts have the same issue with the Accumulo TServer. It starts up with the solid green check (photo attached) but stops after just a few seconds with the alert icon.

The only information I found about the error is "Connection failed: [Errno 111] Connection refused to mo-31aeb5591.mo.sap.corp:9997" which I showed in the t-server-process. I checked my ssh connection and its fine, and all of the other services installed fine so I'm not sure what exactly that means. I posted the logs below, the .err file just said no such directory, and the .out file is empty. Are there other locations where there is more verbose err logs about this? As said, I am new to the environment.

Any general troubleshooting advice for initial issues after installation or links to guides that may help would also be very appreciated.

[root@xxxxxxxxxxxx ~]# cd /var/log/accumulo/ [root@xxxxxxxxxxxx accumulo]# ls accumulo-tserver.err accumulo-tserver.out [root@xxxxxxxxxxxx accumulo]# cat accumulo-tserver.err /usr/hdp/current/accumulo-client/bin/accumulo: line 4: /usr/hdp/2.3.4.0-3485/etc/default/accumulo: No such file or directory

Created 09-05-2016 12:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I ended up just removing the TServer service from the nodes that were failing. Not really a solution, but the others ones still work fine. Thanks for your help!

Created 08-30-2016 07:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the /usr/hdp/current/accumulo-client/bin/accumulo file that gives the error, which is identical on both nodes, the one whose TServer crashes and one who's tserver seems to work fine.

#!/bin/sh

. /usr/hdp/2.3.4.0-3485/etc/default/hadoop

. /usr/hdp/2.3.4.0-3485/etc/default/accumulo

# Autodetect JAVA_HOME if not defined

if [ -e /usr/libexec/bigtop-detect-javahome ]; then

. /usr/libexec/bigtop-detect-javahome

elif [ -e /usr/lib/bigtop-utils/bigtop-detect-javahome ]; then

. /usr/lib/bigtop-utils/bigtop-detect-javahome

fi

export HDP_VERSION=${HDP_VERSION:-2.3.4.0-3485}

export ACCUMULO_OTHER_OPTS="-Dhdp.version=${HDP_VERSION} ${ACCUMULO_OTHER_OPTS}"

exec /usr/hdp/2.3.4.0-3485//accumulo/bin/accumulo.distro "$@"

so I could change the line ". /usr/hdp/2.3.4.0-3485/etc/default/accumulo" but if that's whats causing me problems then all of the nodes running it would/should have the same problem.

I will compare their configs a little more to see if there's a difference somewhere.

Created 08-30-2016 05:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jonathan Hurley, no... this is expected. From a fresh HDP2.3.4 install I just did:

# ls -l /usr/hdp/2.3.6.0-3796/etc/default/accumulo -rw-r--r--. 1 root root 975 Jun 23 16:12 /usr/hdp/2.3.6.0-3796/etc/default/accumulo

# yum whatprovides /usr/hdp/2.3.6.0-3796/etc/default/accumulo accumulo_2_3_6_0_3796-1.7.0.2.3.6.0-3796.el6.x86_64 : Apache Accumulo is a distributed Key Value store based on Google's BigTable Repo : HDP-2.3 Matched from: Filename : /usr/hdp/2.3.6.0-3796/etc/default/accumulo accumulo_2_3_6_0_3796-1.7.0.2.3.6.0-3796.el6.x86_64 : Apache Accumulo is a distributed Key Value store based on Google's BigTable Repo : installed Matched from: Other : Provides-match: /usr/hdp/2.3.6.0-3796/etc/default/accumulo

Created 08-31-2016 08:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for both responses @Josh Elser, at least I know its not some obvious mistake I made. I will re-install the service and see what happens.

Created 08-31-2016 12:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ah, OK - I had misread the directory is /usr/hdp/current; indeed etc and other directories are allowed in /usr/hdp/2.3.6.0-3796

Ambari actually doesn't do anything with those directories. We only manipulate the symlinks in /usr/hdp/current and /etc

I'm curious if this is a problem with 2.3.4.0

Created 09-05-2016 12:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I ended up just removing the TServer service from the nodes that were failing. Not really a solution, but the others ones still work fine. Thanks for your help!

Created 02-13-2017 12:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm getting the same error after installation.

I grepped the /var/log/accumulo/tserver_hostname.log and found a report of:

ERROR: Exception while checking mount points, halting process java.io.FileNotFoundException: /proc/mounts (Too many files open)

After looking the open files I discovered 136K java open files and 106K jsvc open files, given I set a descriptor limit of 20K I think this might be my problem

$> lsof | awk '{print $1}' | sort | uniq -c | sort -n -k1

...

106000 jsvc

136000 javaI'm digging into this now too. This cluster has no jobs running I'm surprised to see so many open files...

- « Previous

-

- 1

- 2

- Next »