Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Ambari failed to install services

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

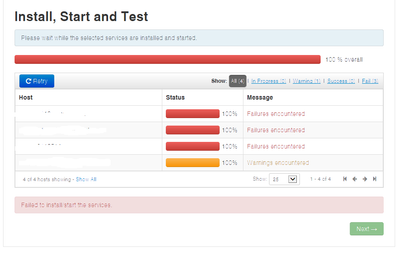

Ambari failed to install services

Created on 12-04-2017 04:04 PM - edited 08-17-2019 08:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm trying to install a hdp cluster and running into some installation issues. Currently my installation breaks at a certain point and

throws the following error.

Any ideas how to fix this?

stderr: /var/lib/ambari-agent/data/errors-1108.txt

Traceback (most recent call last): File "/var/lib/ambari-agent/cache/common-services/OOZIE/4.0.0.2.0/package/scripts/oozie_client.py", line 71, in <module> OozieClient().execute() File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 367, in execute method(env) File "/var/lib/ambari-agent/cache/common-services/OOZIE/4.0.0.2.0/package/scripts/oozie_client.py", line 33, in install self.install_packages(env) File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 803, in install_packages name = self.format_package_name(package['name']) File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 538, in format_package_name raise Fail("Cannot match package for regexp name {0}. Available packages: {1}".format(name, self.available_packages_in_repos)) resource_management.core.exceptions.Fail: Cannot match package for regexp name oozie_${stack_version}. Available packages: ['accumulo', 'accumulo-conf-standalone', 'accumulo-source', 'accumulo_2_6_3_0_235-conf-standalone', 'accumulo_2_6_3_0_235-source', 'atlas-metadata', 'atlas-metadata-falcon-plugin', 'atlas-metadata-hive-plugin', 'atlas-metadata-sqoop-plugin', 'atlas-metadata-storm-plugin', 'atlas-metadata_2_6_3_0_235-sqoop-plugin', 'atlas-metadata_2_6_3_0_235-storm-plugin', 'datafu', 'druid', 'falcon', 'falcon-doc', 'falcon_2_6_3_0_235-doc', 'flume', 'flume-agent', 'flume_2_6_3_0_235', 'flume_2_6_3_0_235-agent', 'hadoop', 'hadoop-client', 'hadoop-conf-pseudo', 'hadoop-doc', 'hadoop-hdfs', 'hadoop-hdfs-datanode', 'hadoop-hdfs-fuse', 'hadoop-hdfs-journalnode', 'hadoop-hdfs-namenode', 'hadoop-hdfs-secondarynamenode', 'hadoop-hdfs-zkfc', 'hadoop-httpfs', 'hadoop-httpfs-server', 'hadoop-libhdfs', 'hadoop-mapreduce', 'hadoop-mapreduce-historyserver', 'hadoop-source', 'hadoop-yarn', 'hadoop-yarn-nodemanager', 'hadoop-yarn-proxyserver', 'hadoop-yarn-resourcemanager', 'hadoop-yarn-timelineserver', 'hadoop_2_6_3_0_235-conf-pseudo', 'hadoop_2_6_3_0_235-doc', 'hadoop_2_6_3_0_235-hdfs-datanode', 'hadoop_2_6_3_0_235-hdfs-fuse', 'hadoop_2_6_3_0_235-hdfs-journalnode', 'hadoop_2_6_3_0_235-hdfs-namenode', 'hadoop_2_6_3_0_235-hdfs-secondarynamenode', 'hadoop_2_6_3_0_235-hdfs-zkfc', 'hadoop_2_6_3_0_235-httpfs', 'hadoop_2_6_3_0_235-httpfs-server', 'hadoop_2_6_3_0_235-mapreduce-historyserver', 'hadoop_2_6_3_0_235-source', 'hadoop_2_6_3_0_235-yarn-nodemanager', 'hadoop_2_6_3_0_235-yarn-proxyserver', 'hadoop_2_6_3_0_235-yarn-resourcemanager', 'hadoop_2_6_3_0_235-yarn-timelineserver', 'hadooplzo', 'hadooplzo-native', 'hadooplzo_2_6_3_0_235', 'hadooplzo_2_6_3_0_235-native', 'hbase', 'hbase-doc', 'hbase-master', 'hbase-regionserver', 'hbase-rest', 'hbase-thrift', 'hbase-thrift2', 'hbase_2_6_3_0_235-doc', 'hbase_2_6_3_0_235-master', 'hbase_2_6_3_0_235-regionserver', 'hbase_2_6_3_0_235-rest', 'hbase_2_6_3_0_235-thrift', 'hbase_2_6_3_0_235-thrift2', 'hive', 'hive-hcatalog', 'hive-hcatalog-server', 'hive-jdbc', 'hive-metastore', 'hive-server', 'hive-server2', 'hive-webhcat', 'hive-webhcat-server', 'hive2', 'hive2-jdbc', 'hive_2_6_3_0_235-hcatalog-server', 'hive_2_6_3_0_235-metastore', 'hive_2_6_3_0_235-server', 'hive_2_6_3_0_235-server2', 'hive_2_6_3_0_235-webhcat-server', 'hue', 'hue-beeswax', 'hue-common', 'hue-hcatalog', 'hue-oozie', 'hue-pig', 'hue-server', 'kafka', 'knox', 'knox_2_6_3_0_235', 'livy', 'livy2', 'livy2_2_6_3_0_235', 'livy_2_6_3_0_235', 'mahout-doc', 'mahout_2_6_3_0_235-doc', 'oozie-client', 'oozie-common', 'oozie-sharelib', 'oozie-sharelib-distcp', 'oozie-sharelib-hcatalog', 'oozie-sharelib-hive', 'oozie-sharelib-hive2', 'oozie-sharelib-mapreduce-streaming', 'oozie-sharelib-pig', 'oozie-sharelib-spark', 'oozie-sharelib-sqoop', 'oozie-webapp', 'phoenix', 'phoenix_2_6_3_0_235', 'pig', 'ranger-admin', 'ranger-atlas-plugin', 'ranger-hbase-plugin', 'ranger-hdfs-plugin', 'ranger-hive-plugin', 'ranger-kafka-plugin', 'ranger-kms', 'ranger-knox-plugin', 'ranger-solr-plugin', 'ranger-storm-plugin', 'ranger-tagsync', 'ranger-usersync', 'ranger-yarn-plugin', 'ranger_2_6_3_0_235-admin', 'ranger_2_6_3_0_235-kms', 'ranger_2_6_3_0_235-knox-plugin', 'ranger_2_6_3_0_235-solr-plugin', 'ranger_2_6_3_0_235-storm-plugin', 'ranger_2_6_3_0_235-tagsync', 'ranger_2_6_3_0_235-usersync', 'shc', 'shc_2_6_3_0_235', 'slider', 'spark', 'spark-master', 'spark-python', 'spark-worker', 'spark-yarn-shuffle', 'spark2', 'spark2-master', 'spark2-python', 'spark2-worker', 'spark2-yarn-shuffle', 'spark2_2_6_3_0_235', 'spark2_2_6_3_0_235-master', 'spark2_2_6_3_0_235-python', 'spark2_2_6_3_0_235-worker', 'spark_2_6_3_0_235', 'spark_2_6_3_0_235-master', 'spark_2_6_3_0_235-python', 'spark_2_6_3_0_235-worker', 'spark_llap', 'spark_llap_2_6_3_0_235', 'sqoop', 'sqoop-metastore', 'sqoop_2_6_3_0_235', 'sqoop_2_6_3_0_235-metastore', 'storm', 'storm-slider-client', 'storm_2_6_3_0_235', 'storm_2_6_3_0_235-slider-client', 'superset', 'superset_2_6_3_0_235', 'tez', 'tez_hive2', 'zeppelin', 'zeppelin_2_6_3_0_235', 'zookeeper', 'zookeeper-server', 'zookeeper_2_6_3_0_235-server', 'R', 'R-core', 'R-core-devel', 'R-devel', 'R-java', 'R-java-devel', 'compat-readline5', 'epel-release', 'fping', 'ganglia-debuginfo', 'ganglia-devel', 'ganglia-gmetad', 'ganglia-gmond', 'ganglia-gmond-modules-python', 'ganglia-web', 'hadoop-lzo', 'hadoop-lzo-native', 'libRmath', 'libRmath-devel', 'libconfuse', 'libganglia', 'libgenders', 'lua-rrdtool', 'lucidworks-hdpsearch', 'lzo', 'lzo-debuginfo', 'lzo-devel', 'lzo-minilzo', 'nagios', 'nagios-debuginfo', 'nagios-devel', 'nagios-plugins', 'nagios-plugins-debuginfo', 'nagios-www', 'openblas', 'openblas-devel', 'openblas-openmp', 'openblas-static', 'openblas-threads', 'pdsh', 'perl-Crypt-DES', 'perl-Net-SNMP', 'perl-rrdtool', 'python-rrdtool', 'rrdtool', 'rrdtool-debuginfo', 'rrdtool-devel', 'ruby-rrdtool', 'snappy', 'snappy-devel', 'tcl-rrdtool', 'accumulo_2_6_3_0_235', 'atlas-metadata_2_6_3_0_235', 'atlas-metadata_2_6_3_0_235-falcon-plugin', 'atlas-metadata_2_6_3_0_235-hive-plugin', 'bigtop-jsvc', 'datafu_2_6_3_0_235', 'druid_2_6_3_0_235', 'falcon_2_6_3_0_235', 'hadoop_2_6_3_0_235', 'hadoop_2_6_3_0_235-client', 'hadoop_2_6_3_0_235-hdfs', 'hadoop_2_6_3_0_235-libhdfs', 'hadoop_2_6_3_0_235-mapreduce', 'hadoop_2_6_3_0_235-yarn', 'hbase_2_6_3_0_235', 'hdp-select', 'hive2_2_6_3_0_235', 'hive2_2_6_3_0_235-jdbc', 'hive_2_6_3_0_235', 'hive_2_6_3_0_235-hcatalog', 'hive_2_6_3_0_235-jdbc', 'hive_2_6_3_0_235-webhcat', 'kafka_2_6_3_0_235', 'mahout', 'mahout_2_6_3_0_235', 'oozie', 'pig_2_6_3_0_235', 'ranger_2_6_3_0_235-atlas-plugin', 'ranger_2_6_3_0_235-hbase-plugin', 'ranger_2_6_3_0_235-hdfs-plugin', 'ranger_2_6_3_0_235-hive-plugin', 'ranger_2_6_3_0_235-kafka-plugin', 'ranger_2_6_3_0_235-yarn-plugin', 'spark2_2_6_3_0_235-yarn-shuffle', 'spark_2_6_3_0_235-yarn-shuffle', 'tez_2_6_3_0_235', 'tez_hive2_2_6_3_0_235', 'zookeeper_2_6_3_0_235', 'extjs']

stdout: /var/lib/ambari-agent/data/output-1108.txt

2017-12-04 10:48:30,428 - Stack Feature Version Info: Cluster Stack=2.6, Command Stack=None, Command Version=None -> 2.6 2017-12-04 10:48:30,429 - Using hadoop conf dir: /usr/hdp/2.6.3.0-235/hadoop/conf 2017-12-04 10:48:30,430 - Group['livy'] {} 2017-12-04 10:48:30,431 - Group['spark'] {} 2017-12-04 10:48:30,431 - Group['hdfs'] {} 2017-12-04 10:48:30,432 - Group['zeppelin'] {} 2017-12-04 10:48:30,432 - Group['hadoop'] {} 2017-12-04 10:48:30,432 - Group['users'] {} 2017-12-04 10:48:30,432 - Group['knox'] {} 2017-12-04 10:48:30,433 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,434 - User['infra-solr'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,435 - User['atlas'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,436 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,437 - User['falcon'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users'], 'uid': None} 2017-12-04 10:48:30,438 - User['accumulo'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,439 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,440 - User['flume'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,441 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,442 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,443 - User['storm'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,444 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,445 - User['oozie'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users'], 'uid': None} 2017-12-04 10:48:30,446 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users'], 'uid': None} 2017-12-04 10:48:30,447 - User['zeppelin'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['zeppelin', 'hadoop'], 'uid': None} 2017-12-04 10:48:30,448 - User['livy'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,449 - User['mahout'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,450 - User['druid'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,451 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users'], 'uid': None} 2017-12-04 10:48:30,452 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,453 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hdfs'], 'uid': None} 2017-12-04 10:48:30,454 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,455 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,456 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,457 - User['knox'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None} 2017-12-04 10:48:30,458 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555} 2017-12-04 10:48:30,460 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'} 2017-12-04 10:48:30,465 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if 2017-12-04 10:48:30,465 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'create_parents': True, 'mode': 0775, 'cd_access': 'a'} 2017-12-04 10:48:30,466 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555} 2017-12-04 10:48:30,468 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555} 2017-12-04 10:48:30,469 - call['/var/lib/ambari-agent/tmp/changeUid.sh hbase'] {} 2017-12-04 10:48:30,477 - call returned (0, '1002') 2017-12-04 10:48:30,478 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1002'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'} 2017-12-04 10:48:30,483 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase 1002'] due to not_if 2017-12-04 10:48:30,484 - Group['hdfs'] {} 2017-12-04 10:48:30,484 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hdfs']} 2017-12-04 10:48:30,485 - FS Type: 2017-12-04 10:48:30,485 - Directory['/etc/hadoop'] {'mode': 0755} 2017-12-04 10:48:30,502 - File['/usr/hdp/2.6.3.0-235/hadoop/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'} 2017-12-04 10:48:30,503 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777} 2017-12-04 10:48:30,520 - Repository['HDP-2.6-repo-2'] {'append_to_file': False, 'base_url': 'http://public-repo-1.hortonworks.com/HDP/centos6/2.x/updates/2.6.3.0', 'action': ['create'], 'components': ['HDP', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-2', 'mirror_list': None} 2017-12-04 10:48:30,529 - File['/etc/yum.repos.d/ambari-hdp-2.repo'] {'content': '[HDP-2.6-repo-2]\nname=HDP-2.6-repo-2\nbaseurl=http://public-repo-1.hortonworks.com/HDP/centos6/2.x/updates/2.6.3.0\n\npath=/\nenabled=1\ngpgcheck=0'} 2017-12-04 10:48:30,530 - Writing File['/etc/yum.repos.d/ambari-hdp-2.repo'] because contents don't match 2017-12-04 10:48:30,530 - Repository['HDP-UTILS-1.1.0.21-repo-2'] {'append_to_file': True, 'base_url': 'http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.21/repos/centos6', 'action': ['create'], 'components': ['HDP-UTILS', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'ambari-hdp-2', 'mirror_list': None} 2017-12-04 10:48:30,534 - File['/etc/yum.repos.d/ambari-hdp-2.repo'] {'content': '[HDP-2.6-repo-2]\nname=HDP-2.6-repo-2\nbaseurl=http://public-repo-1.hortonworks.com/HDP/centos6/2.x/updates/2.6.3.0\n\npath=/\nenabled=1\ngpgcheck=0\n[HDP-UTILS-1.1.0.21-repo-2]\nname=HDP-UTILS-1.1.0.21-repo-2\nbaseurl=http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.21/repos/centos6\n\npath=/\nenabled=1\ngpgcheck=0'} 2017-12-04 10:48:30,534 - Writing File['/etc/yum.repos.d/ambari-hdp-2.repo'] because contents don't match 2017-12-04 10:48:30,535 - Package['unzip'] {'retry_on_repo_unavailability': False, 'retry_count': 5} 2017-12-04 10:48:30,735 - Skipping installation of existing package unzip 2017-12-04 10:48:30,735 - Package['curl'] {'retry_on_repo_unavailability': False, 'retry_count': 5} 2017-12-04 10:48:30,860 - Skipping installation of existing package curl 2017-12-04 10:48:30,860 - Package['hdp-select'] {'retry_on_repo_unavailability': False, 'retry_count': 5} 2017-12-04 10:48:30,989 - Skipping installation of existing package hdp-select 2017-12-04 10:48:30,991 - The repository with version 2.6.3.0-235 for this command has been marked as resolved. It will be used to report the version of the component which was installed 2017-12-04 10:48:31,270 - Package['zip'] {'retry_on_repo_unavailability': False, 'retry_count': 5} 2017-12-04 10:48:31,591 - Skipping installation of existing package zip 2017-12-04 10:48:31,592 - Package['extjs'] {'retry_on_repo_unavailability': False, 'retry_count': 5} 2017-12-04 10:48:31,718 - Skipping installation of existing package extjs 2017-12-04 10:48:31,719 - Command repositories: HDP-2.6-repo-2, HDP-UTILS-1.1.0.21-repo-2 2017-12-04 10:48:31,719 - Applicable repositories: HDP-2.6-repo-2, HDP-UTILS-1.1.0.21-repo-2 2017-12-04 10:48:31,720 - Looking for matching packages in the following repositories: HDP-2.6-repo-2, HDP-UTILS-1.1.0.21-repo-2 2017-12-04 10:48:33,715 - No package found for oozie_${stack_version}(oozie_(\d|_)+$) 2017-12-04 10:48:33,716 - The repository with version 2.6.3.0-235 for this command has been marked as resolved. It will be used to report the version of the component which was installed Command failed after 1 tries

Created 12-06-2017 04:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Using the former release 2.6.2 worked for me

Created 12-04-2017 04:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oozie packages name looks wrong in the available ones, it's missing the version, try to install without Oozie first

Created 12-05-2017 11:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Didn't work either. Got the same error with the following service.

Created 12-04-2017 08:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you copy and paste the contents of your /etc/yum.repos.d ? The filename /etc/yum.repos.d/ambari-hdp-2.repo doesn't look correct you should see something like this

# ls -al /etc/yum.repos.d/ total 56 drwxr-xr-x. 2 root root 4096 Oct 19 13:13 . ...... -rw-r--r-- 1 root root 306 Oct 19 13:04 ambari.repo -rw-r--r--. 1 root root 575 Aug 30 21:34 hdp.repo -rw-r--r-- 1 root root 128 Oct 19 13:13 HDP.repo -rw-r--r-- 1 root root 151 Oct 19 13:13 HDP-UTILS.repo

Please correct that and retry

Created 12-05-2017 08:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Output from /etc/yum.respos.d looks like this

drwxr-xr-x. 2 root root 4096 Dec 4 09:24 . drwxr-xr-x. 141 root root 12288 Dec 5 03:11 .. -rw-r--r-- 1 root root 308 Dec 4 10:48 ambari-hdp-2.repo -rw-r--r-- 1 root root 306 Dec 4 08:43 ambari.repo -rw-r--r--. 1 root root 1991 Nov 27 07:33 CentOS-Base.repo -rw-r--r--. 1 root root 647 Mar 28 2017 CentOS-Debuginfo.repo -rw-r--r--. 1 root root 289 Mar 28 2017 CentOS-fasttrack.repo -rw-r--r--. 1 root root 630 Mar 28 2017 CentOS-Media.repo -rw-r--r--. 1 root root 7989 Mar 28 2017 CentOS-Vault.repo

Created 05-22-2018 01:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i can understand Lukas' issue with "*-2" named .repo files.

my install is error'ing out and giving me no clues, no breadcrumbs to follow.

all my

/var/lib/ambari-agent/data/errors*

log files are either size 0-length or 86-length, with latter:

"Server considered task failed and automatically aborted it."

on

centos7.4, Ambari2.6.1.5

when i installed with a

ambari-hdp.repo

Ambari complained and duplicated it as

ambari-hdp-1.repo

Justin

Created 12-06-2017 04:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Using the former release 2.6.2 worked for me

Created 01-25-2018 06:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Lukas Müller Please How did you resolve this issue

Created 01-25-2018 07:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@chris obia There was an issue with the repository. Using the former release worked for me.

Created 02-13-2018 09:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Lukas Muller:

I'm too stuck with the same issue while i try to install 2.6.4.0, Actually in backend it does install everything and breaks at the same point like yours. Just want to know how to you revert to 2.6.2.0? I mean did you do a manual clean up or directly tried 2.6.2.0 and it cleaned up and installs the 2.6.2.0 version? If its manual could you provide the steps. Thank you. Appreciate your reply.