Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Basics unclear about external table and hdfs f...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Basics unclear about external table and hdfs file relation

- Labels:

-

Apache Hadoop

-

Apache Hive

-

Apache Sqoop

Created on 05-06-2016 10:19 AM - edited 08-19-2019 01:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Stack : Installed HDP-2.3.2.0-2950 using Ambari 2.1

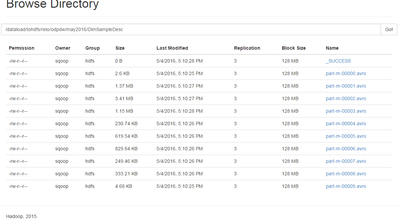

I had imported a table from ms sql server to HDFS using Sqoop, there I had faced permission issues but I could proceed with the help of the community. The corresponding files on HDFS are as shown in the image :

- Create an external table in Hive using the above files

- Dropping this table later and recreating it, say using Parquet, shouldn't cause any data loss or errors

- Preferably, store the Hive table at a custom location but this is NOT mandatory

Accordingly, I tried to create the table :

CREATE EXTERNAL TABLE DimSampleDesc ( SmapiName_ver varchar(30) ,SmapiColName varchar(35),ChartType int,X_Indexet int,Y_Indexet int,X_Tick varchar(20),Y_Tick varchar(20),X_TickRange varchar(40),X_TickRangeFrom varchar(20),X_TickRangeTom varchar(20),Y_TickRange varchar(40),Y_TickRangeFrom varchar(20),Y_TickRangeTom varchar(20),IndexCount int,X_IndexCount int,Y_IndexCount int,X_Symbol varchar(10),X_SymbolName varchar(40),X_SymbolDescr varchar(40),Y_Symbol varchar(10),Y_SymbolName varchar(40),Y_SymbolDescr varchar(40),SmapiName varchar(30),Incorrect_Ver_FL boolean ) COMMENT 'EXTERNAL, ORC table' STORED AS ORC LOCATION '/dataload/tohdfs/reio/odpdw/may2016/DimSampleDesc';

The error made me question about my basics about 'location' - I assumed it is the 'read/source' location but now Hive is trying to write there(I think so!). If this is the case, how do I specify the source location for the external table ?

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask. MetaException(message:java.security.AccessControlException: Permission denied: user=hive, access=WRITE, inode="/dataload/tohdfs/reio/odpdw/may2016/DimSampleDesc":sqoop:hdfs:drwxr-xr-x

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.check(FSPermissionChecker.java:319)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:219)

at org.apache.hadoop.hdfs.server.namenode.FSPermissionChecker.checkPermission(FSPermissionChecker.java:190)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1771)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPermission(FSDirectory.java:1755)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.checkPathAccess(FSDirectory.java:1729)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkAccess(FSNamesystem.java:8285)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.checkAccess(NameNodeRpcServer.java:1914)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.checkAccess(ClientNamenodeProtocolServerSideTranslatorPB.java:1443)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:969)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2137)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2133)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2131)

Created 05-06-2016 11:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Keeping imported table as-is and transforming it (ORC, Parquet, etc) is the preferred way. You keep your data, no need to import again if something unplanned happens, and once you have decided how to handle it you can drop the imported table. (You can also import directly to Hive as ORC, there is a guide how to do that on HCC). Regarding the second question, my example was with a managed table (stored at /apps/hive/warehouse), if you wish to store tables elsewhere you can create another external table, provide the location, and write into it.

Created 05-09-2016 11:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think I have found the root cause - the .avsc file is created in the tmp dir. and results in error while moving but I will put this in a separate post and once that is solved, will resume this.

Created 05-09-2016 01:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please try what I suggested, to replace VARCHAR with STRING in Hive declaration. Also, make sure the number and type of fields match those in your DB. I believe that your Avro tables are correct, but the Hive cannot read the files using your table definition. Also, it will be good idea to test everything on a smaller table, if you have one, with unique types, in your case: string, int and boolean. And, by the way, Hive is not using the .avsc file.

- « Previous

-

- 1

- 2

- Next »