Support Questions

- Cloudera Community

- Support

- Support Questions

- Best NiFi Heap usage performance for Large Server

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Best NiFi Heap usage performance for Large Server

- Labels:

-

Apache NiFi

Created on 06-10-2018 11:35 PM - edited 09-16-2022 06:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So I have 4 740xd Servers with 80 Threads on each and 384GB of ram each.

How should I best handle heap for each nifi VM on the server?

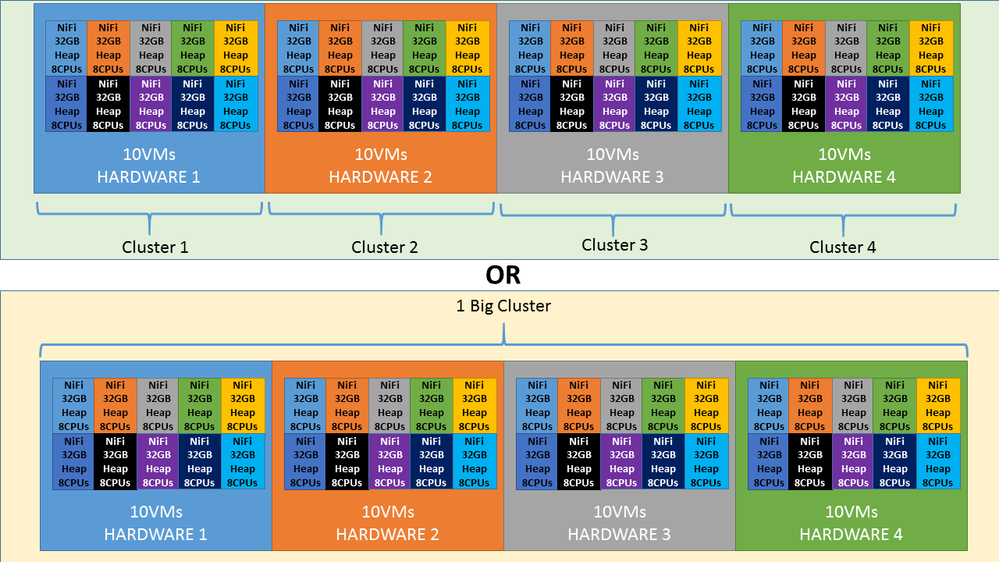

Option 1: 1, VM 80 cpus, NiFi set to 300GB of Ram for heap? And have the 4 nifis clustered.

Option 2: 10, VMs 8 cpus each, NiFi set to 32GB of Ram for heap for each VM, These NiFis would also be clustered, across the Virtualized 740 and across the 4 hardware 740s

Note: all these files going through these nifis are less than 4GB, and 90% of them are under 10MB

ALSO what generation should I use for Java Garbage Collection?

Attached are two pictures explaining my options.

John

Created 06-11-2018 01:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

-

Many factors go in to determining the correct hardware configuration. Most of which comes from the size or you data, amount of data, and specific processors used in your dataflow and how they are configured.

-

What I can tell you is running NiFi with a 300 GB configured heap is probably not the best idea. At a heap of that size, even partial garbage collection events could result in considerable stop-the-world times.

-

I would tend to lean more towards the smaller VMs to make better use of your hardware resources, but again that is based on very little knowledge of your specifics. Best to standup a small VM like you described and perform some load testing on your specific dataflows to determine how much data each VM could potentially process and scale from there on how many nodes you will actually need.

-

Also keep in mind that the memory used by NiFi's dataflows can and often does extend beyond just heap. Make sure you do not allocate to much memory to each VMs heap which may result in server issues due to insufficient memory for the OS and not heap related processing. (For example: think along the lines of OS level socket queues, externally issued commands and any scripting based processors you may use)

-

NiFi clusters in the range of 40 nodes is fairly uncommon; however, NiFi doe snot put a restriction on the number of nodes you can have in a cluster. Just make sure that as your increase the number of nodes you make adjustments to the Cluster node properties to maintain cluster stability. Most specifically nifi.cluster.node.connection.timeout (60 secs or higher), nifi.cluster.node.read.timeout (60 seconds or higher), and nifi.cluster.protocol.heartbeat.interval (20 seconds or higher).

-

As far as GC goes, the default G1GC has proven to be good performer. I have heard of some rare corner cases that can cause some stability issues and users have resolved that by commenting out the G1GC line in nifi bootstrap.conf file and just going with the default GC in the latest versions of Java.

-

Hope this information was useful for you,

Matt

Created 06-11-2018 01:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Any ideas?

Created 06-11-2018 01:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

any ideas as well?

Created 06-11-2018 01:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

-

Many factors go in to determining the correct hardware configuration. Most of which comes from the size or you data, amount of data, and specific processors used in your dataflow and how they are configured.

-

What I can tell you is running NiFi with a 300 GB configured heap is probably not the best idea. At a heap of that size, even partial garbage collection events could result in considerable stop-the-world times.

-

I would tend to lean more towards the smaller VMs to make better use of your hardware resources, but again that is based on very little knowledge of your specifics. Best to standup a small VM like you described and perform some load testing on your specific dataflows to determine how much data each VM could potentially process and scale from there on how many nodes you will actually need.

-

Also keep in mind that the memory used by NiFi's dataflows can and often does extend beyond just heap. Make sure you do not allocate to much memory to each VMs heap which may result in server issues due to insufficient memory for the OS and not heap related processing. (For example: think along the lines of OS level socket queues, externally issued commands and any scripting based processors you may use)

-

NiFi clusters in the range of 40 nodes is fairly uncommon; however, NiFi doe snot put a restriction on the number of nodes you can have in a cluster. Just make sure that as your increase the number of nodes you make adjustments to the Cluster node properties to maintain cluster stability. Most specifically nifi.cluster.node.connection.timeout (60 secs or higher), nifi.cluster.node.read.timeout (60 seconds or higher), and nifi.cluster.protocol.heartbeat.interval (20 seconds or higher).

-

As far as GC goes, the default G1GC has proven to be good performer. I have heard of some rare corner cases that can cause some stability issues and users have resolved that by commenting out the G1GC line in nifi bootstrap.conf file and just going with the default GC in the latest versions of Java.

-

Hope this information was useful for you,

Matt

Created 10-29-2018 05:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you explain (or maybe pointing to references) about this statement "Also keep in mind that the memory used by NiFi's dataflows can and often does extend beyond just heap"?

I'm experiencing this one, i set my heap 80% of total memory, yet the monitoring team reported that the total memory has been drained until 95 - 98%... My heap allocation is 8GB - 24GB for total memory 32GB; I seem can't find clear explanation about Nifi high memory utilization aside from this one

Thanks,

Bobby

Created 10-29-2018 06:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

-

Some processor may be designed to utilize memory outside of the JVM.

Some of the scripting processor like ExecuteProcess or ExecuteStreamCommand are a good examples. They are calling a process or script external to NiFi. Those externally executed commands will have a memory footprint of their own.

-

Listen type processors like ListenTCP or ListenUDP is another example. These have memory footprints both inside and outside the NiFi JVM heap space. These processors can be configured with socket buffer which is created outside of heap space.-

-

Thanks,

Matt