Support Questions

- Cloudera Community

- Support

- Support Questions

- Best cluster configuration

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Best cluster configuration

Created on 02-13-2016 02:07 PM - edited 09-16-2022 03:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I'm new to Hadoop and i'm currently working on a project using HDP. I've an OVH server with the following config:

- 4 CPUs x Intel(R) Xeon(R) CPU E3-1231 v3 @ 3.40GHz - RAM : 32 GB - Storage : 2 TB with ESXi installed.

My question is about the best partitioning schema and the number of Nodes. I'm not sure if 4 nodes with 1 cpu, 8 GB of RAM and 500 GB HDD is good, at least for development (1 Namenode and 3 Datanodes)? I'm working with Data from a middle sized retail.

Thanks.

Created 02-13-2016 02:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

** Assumption **

Lab environment and no performance test will be done

You have one server and planning to crave out 4 machines. I am sure you are not expecting high performance from this setup.

You can have 1 master and 3 workers/data nodes (In your case, you can pick one of datanodes and install other master services so basically, you can mix 1 datanode with master components --> Only applicable to sandbox/lab environments)

Don't install everything...HDFS, Mapreduce, Yarn , Zookeeper , Hive --> start with these components and then later you can add as you go.

Make sure that you give enough space to logs

for each vm

/ - root , generally 20GB is good

/usr/hdp - 20GB

/var/log - 50 to 100GB (small setup)

/hadoop - rest of space

Created 02-13-2016 02:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

** Assumption **

Lab environment and no performance test will be done

You have one server and planning to crave out 4 machines. I am sure you are not expecting high performance from this setup.

You can have 1 master and 3 workers/data nodes (In your case, you can pick one of datanodes and install other master services so basically, you can mix 1 datanode with master components --> Only applicable to sandbox/lab environments)

Don't install everything...HDFS, Mapreduce, Yarn , Zookeeper , Hive --> start with these components and then later you can add as you go.

Make sure that you give enough space to logs

for each vm

/ - root , generally 20GB is good

/usr/hdp - 20GB

/var/log - 50 to 100GB (small setup)

/hadoop - rest of space

Created 02-13-2016 02:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your answer. This is a development and test environment 🙂

Created 02-13-2016 02:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Zaher Mahdhi Perfect! You can go through the doc but many times, you have to find workaround based on the hardware limitations.

This is your starting point for the next step http://docs.hortonworks.com/HDPDocuments/Ambari-2.2.0.0/bk_Installing_HDP_AMB/content/_meet_minimum_...

Good luck!!!! 🙂

Created 02-14-2016 09:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do you recommend using Ambari or manual installation ? in the case of using Ambari, i went through documentation and didn’t find where I can allocate space for logs. Thanks.

Created on 02-14-2016 09:24 PM - edited 08-19-2019 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

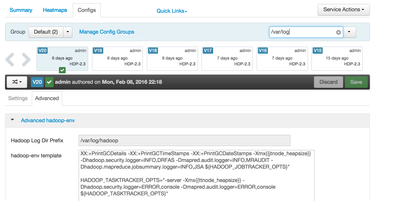

@Zaher Mahdhi Ambari installation method is the BEST way to go.

Make sure that you pay attention to following setting while installing the cluster if you want to customize the log location. You cannot change this once installation is done.

This will be for each component.

Created 02-14-2016 09:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Zaher Mahdhi Also, please see this article for future reference

Created 02-13-2016 02:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

your question has many answers, I suggest you read our cluster planning guide http://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.3.2/bk_cluster-planning-guide/content/ch_hardwar...

Created 02-13-2016 02:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Zaher Mahdhi: Also you can refer below article.