Support Questions

- Cloudera Community

- Support

- Support Questions

- Blocks with corrupted replicas

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Blocks with corrupted replicas

- Labels:

-

Apache Hadoop

Created on 06-03-2018 03:36 PM - edited 08-17-2019 08:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

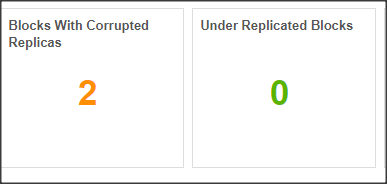

I've noticed in Ambari under HDFS metrics that we have 2 blocks with corrupt replicas.

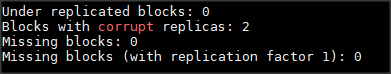

Running " hdfs fsck / " shows no corrupt blocks and system is healthy.

Running "hdfs dfsadmin-report" shows 2 corrupt replicas (same as Ambari dashboard)

I've restarted Ambari metrics & Ambari Agents on all nodes + Ambari-server as noted in one of the threads i came across but still - problem remains.

Ambari is 2.5.2

Any ideas how to fix this issue ?

Thanks

Adi

Created 06-03-2018 06:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Below is the procedure to remove the corrupt blocks or files

Locate the files have blocks that are corrupt.

$ hdfs fsck / | egrep -v '^\.+

or

$ hdfs fsck hdfs://ip.or.host:50070/ | egrep -v '^\.+

/path/to/filename.file_extension: CORRUPT blockpool BP-1016133662-10.29.100.41-1415825958975 block blk_1073904305 /path/to/filename.file_extension: MISSING 1 blocks of total size 15620361 B

$ hdfs dfs -rm /path/to/filename.file_extension

$ hdfs dfs -rm -skipTrash /path/to/filename.file_extension

$ hdfs fsck /path/to/filename/file_extension -locations -blocks -files

$ hdfs fsck hdfs://ip.or.hostname.of.namenode:50070/path/to/filename/file_extension -locations -blocks -files

Created 06-04-2018 05:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you @Geoffrey Shelton Okot

However the fsck shows no corrupt blocks. The problem is with corrupt replicas.

That said - after alert disappeared ...

Not sure if to be glad or suspicious 🙂

Adi

Created 06-02-2023 04:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This response is NOT to fix "files with corrupt replicas" but to find and fix files that are completely corrupt, that is that there are not good replicas to recover the files.

The warning of files with corrupt replicas is when the file has at least one replica which is corrupt, but the file can still be recovered from the remaining replicas.

In this case

hdfs fsck /path ... will not show these files because it considere these healty.

These files and the corrupted replicas are only reported by the command

hdfs dfsadmin -reportand as far as I known there is no direct command to fix this. Only way I have found I to wait for the Hadoop cluster to health itself by reallocating the bad replicas from the good ones.