Support Questions

- Cloudera Community

- Support

- Support Questions

- CDP Public Cloud : Data Replication

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

CDP Public Cloud : Data Replication

Created 01-11-2021 10:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I am looking for the best solution to replicate data between CDP Public Cloud instances

I have found proposal with Nifi :

or using Distcp.

I am not sure that both solution are handling properly "data synchronization" (at least the Nifi solution seems not able to handle file delete not sure for Distcp)

What is the best way to proceed ?

Do you knwo if Cloudera Replication Manager will soon support CDP to CDP scenario in the cloud ?

Created 01-12-2021 08:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have several ideas in mind :

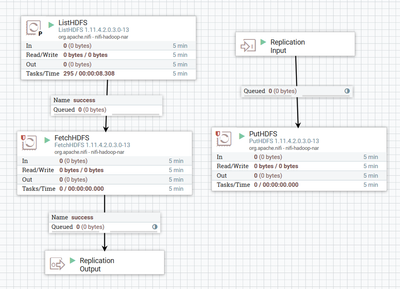

1) Nifi process at the file system level :

capture data with ListHDFS + FetchHDFS --> ingest data with PuHDFS

But what about file delete ?

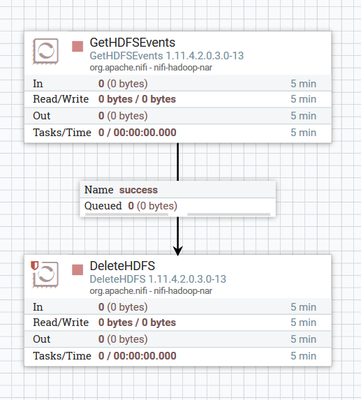

I was thinking to use GetHDFSEvents to capture "unlink" events and replicate events with DeleteHDFS

But it seems that GetHDFSEvents is not compatible with ADLS Gen2 storage

2) Distcp

Again seems working with new data and updated file but I don't understand how it can handle deletes (except if we drop the target data before a full copy)

3) AzCopy

Only compatible with ADLS (but I imagine that a similar tool is available for S3 buckets) with "azcopy sync" option

https://docs.microsoft.com/fr-fr/azure/storage/common/storage-ref-azcopy-sync

4) Nifi process at Hive level

Not sure if it's very elegant :

capture data with SelectHive3QL (Avro output)

Ingest data with PutHive3Streaming

But not sure how to manage deletes

Any best practice or other better idea ?

Created 01-13-2021 03:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What do you mean by "But what about file delete?" Do you want to delete the data at source HDFS as soon as you fetch it in NiFi?

Created 01-19-2021 02:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @ashinde

I mean if we delete data on the source CDP for whatever reason (purge, archiving, dataset rebuild) how to capture those events and replicate the delete action on the target CDP.

it seems that all of my proposal will only able to add data on the target.

Created 01-19-2021 02:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Delio It would be bit complicated flow design as direct support for delete events is not available for GetHDFSEvents processor yet.

But you can refer this solution, which can help you to move further.

If this answer resolves your issue or allows you to move forward, please choose to ACCEPT this solution and close this topic. If you have further dialogue on this topic please comment here.

-Akash

Created on 05-24-2024 12:31 PM - edited 05-24-2024 02:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

NiFi can be a powerful tool for orchestrating data flows relevant to B2B data building. While it excels at transferring data between CDP instances using processors like 'ListHDFS' and 'PutHDFS,' additional configuration might be needed for handling data deletion specific to B2B sources.

For B2B data building, you'll likely be acquiring data from external sources, not HDFS. NiFi's capabilities can still be leveraged, but the specific processors used would depend on the data format and source. However, the challenge of handling data deletion remains. Tools like 'GetHDFSEvents' might not be applicable for B2B data sources.