Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: CDSW - Can't access hive Tables from Scala Wor...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

CDSW - Can't access hive Tables from Scala Workbench

Created on 07-13-2017 03:40 AM - edited 09-16-2022 04:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

While running a Pilot for CDSW & CDH, i'm struggling running queries on hive table.

Scala Workbench

Test code:

import org.apache.spark.sql.Row

import org.apache.spark.sql.hive.HiveContext

import org.apache.spark.sql.Column

val sparkSession = SparkSession.builder.master("local").appName("mapexample").

enableHiveSupport().getOrCreate()

sparkSession.catalog.listTables.show()

val sqlContext = new HiveContext(sc)

sqlContext.sql("describe database default").show

sqlContext.sql("describe formatted default.mytable").show

sc.versionTest return

import org.apache.spark.sql.Row

import org.apache.spark.sql.hive.HiveContext

import org.apache.spark.sql.Column

val sparkSession = SparkSession.builder.master("local").appName("mapexample").

enableHiveSupport().getOrCreate()

sparkSession.catalog.listTables.show()

+----+--------+-----------+---------+-----------+

|name|database|description|tableType|isTemporary|

+----+--------+-----------+---------+-----------+

+----+--------+-----------+---------+-----------+

val sqlContext = new HiveContext(sc)

sqlContext.sql("describe database default").show

+-------------------------+--------------------------+

|database_description_item|database_description_value|

+-------------------------+--------------------------+

| Database Name| default|

| Description| default database|

| Location| /user/hive/warehouse|

+-------------------------+--------------------------+

sqlContext.sql("describe formatted default.mytable").show

Name: org.apache.spark.sql.catalyst.analysis.NoSuchTableException

Message: Table or view 'mytable' not found in database 'default';

StackTrace: at org.apache.spark.sql.catalyst.catalog.SessionCatalog.requireTableExists(SessionCatalog.scala:138)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.getTableMetadata(SessionCatalog.scala:289)

at org.apache.spark.sql.execution.command.DescribeTableCommand.run(tables.scala:437)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:58)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:56)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:74)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:114)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:114)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:135)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:132)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:113)

at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:87)

at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:87)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:185)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:64)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:592)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:699)

Interesing to note: Access hive though SparkSession returns nothing, while HiveContext does return a description of the default DB that does not match the actual default DB

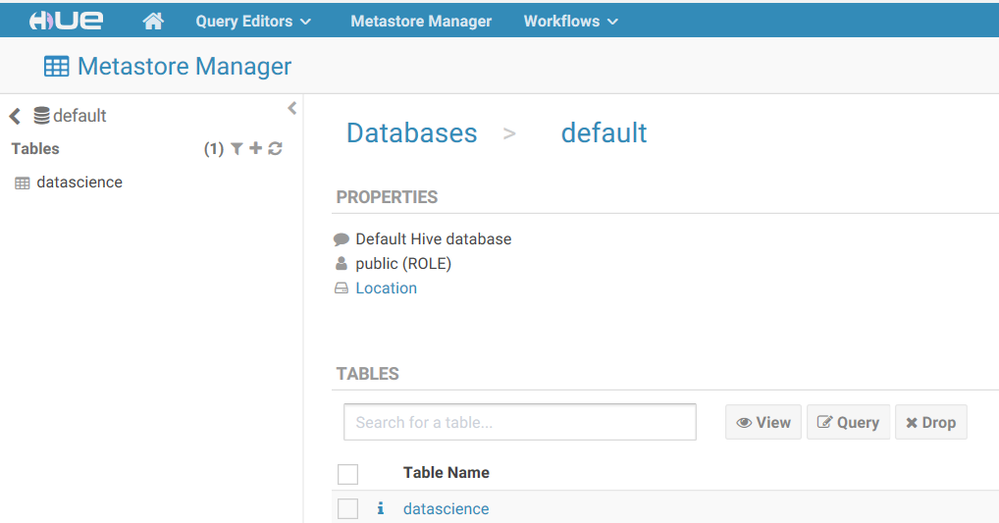

From Hue

default Default Hive database hdfs://<masked>/user/hive/warehouse public ROLE

The CDSW host has the right gateway roles installed, hive-site.xml is present with the spark config files.

Hive Metastore log does not register any access when the workbench is trying to access Hive

No Kerberos involved

I ran out of option to check, hence this post

Thanks

Chris

Created 07-20-2017 07:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I fixed it.

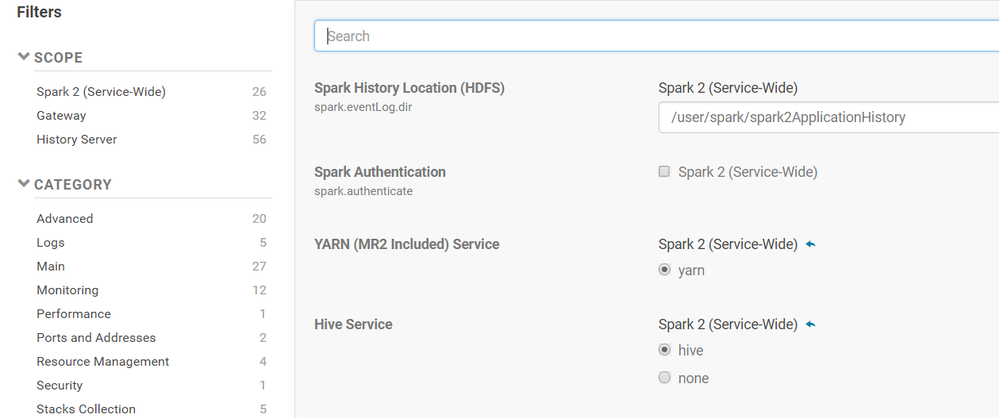

In the Spark2 Configuration Screen (In Cloudera Manager for the CDH cluster), Hiver Service was set to none

I set it to Hive and CDSW is now working as expected.

Thanks!

Created 07-13-2017 04:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Chris,

What are you trying to achieve? Why are you trying to run in local mode? Can you use the expected yarn client mode instead?

From the documentation:

"Unlike PySpark or Sparklyr, you can access a SparkContext assigned to the spark (SparkSession) and sc(SparkContext) objects on console startup, just as when using the Spark shell."

Can you access to your hive tables using the spark SparkSession object?

Regards,

Peter

Created 07-13-2017 04:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Peter,

The goal is to run Data Analysis using Spark where part of the data is stored in hive, The idea is to use the whole cluster to distribute the workload.

I missed the fact that I was running in local mode (As said it's a pilot, i'm totally new to Cloudera stack). I assumed that the workbench was by default in yarn mode. I'll dig in the doc again.

Trying to access hive using Sparksession object in my test was returning nothing. I'll try using yarn & report back

thanks!

Regards.

Chris

Created 07-13-2017 04:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Peter,

I updated my test code as follow

import org.apache.spark.sql.hive.HiveContext

spark.catalog.listTables.show()

val sqlContext = new HiveContext(sc)

sqlContext.sql("describe database default").show

sqlContext.sql("describe formatted default.mytable").show

sc.versionthe results for SparkSession:

spark.catalog.listTables.show() +----+--------+-----------+---------+-----------+ |name|database|description|tableType|isTemporary| +----+--------+-----------+---------+-----------+ +----+--------+-----------+---------+-----------+

(There should be one table listed)

The result for HiveContext

sqlContext.sql("describe database default").show

+-------------------------+--------------------------+

|database_description_item|database_description_value|

+-------------------------+--------------------------+

| Database Name| default|

| Description| default database|

| Location| /user/hive/warehouse|

+-------------------------+--------------------------+

sqlContext.sql("describe formatted default.mytable").show

Name: org.apache.spark.sql.catalyst.analysis.NoSuchTableException

Message: Table or view 'mytable' not found in database 'default';

StackTrace: at org.apache.spark.sql.catalyst.catalog.SessionCatalog.requireTableExists(SessionCatalog.scala:138)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.getTableMetadata(SessionCatalog.scala:289)

at org.apache.spark.sql.execution.command.DescribeTableCommand.run(tables.scala:437)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:58)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:56)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:74)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:114)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:114)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:135)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:132)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:113)

at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:87)

at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:87)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:185)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:64)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:592)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:699)while I do get an result for Describe Table, the description returned does not match the setting in Hive.

Created 07-13-2017 05:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Chris,

You can use the SparkSession object to query your Hive tables. You don't need to create a HiveContext.

spark.catalog.listTables.show()

spark.sql("describe database default").show

spark.sql("describe formatted default.mytable").show

I don't think that this change would fix your issue.

This doesn't seem like a problem with your CDSW installation. Could you execute the same commands from a Session terminal or directly on the CDSW host machine using the spark2-shell cli tool to verify this?

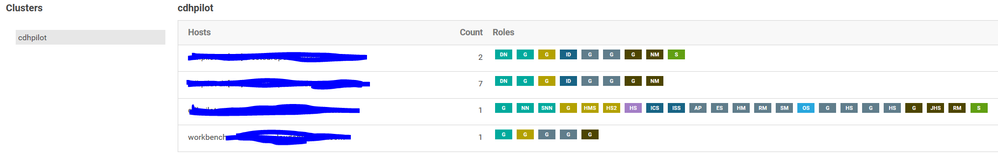

Could you double check that a Hive gateway is deployed to your CDSW host?

Can you show that the table exists from eg. beeline?

Regards,

Peter

Created 07-13-2017 07:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Peter,

See the roles installed. (In case of : Hive GW is the second listed role)

From Spark2-shell (Workbench host)

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.7.0_67) Type in expressions to have them evaluated. Type :help for more information. scala> spark.catalog.listTables.show() +----+--------+-----------+---------+-----------+ |name|database|description|tableType|isTemporary| +----+--------+-----------+---------+-----------+ +----+--------+-----------+---------+-----------+

From HUE running in the CDH cluster (table datascience): the table exist, and can be queried from HUE, the returned data is correct

Spark2-shell from a CDH node

scala> spark.catalog.listTables.show()

// Detected repl transcript. Paste more, or ctrl-D to finish.

+----+--------+-----------+---------+-----------+

|name|database|description|tableType|isTemporary|

+----+--------+-----------+---------+-----------+

+----+--------+-----------+---------+-----------+

scala> spark.sql("describe database default").show

+-------------------------+--------------------------+

|database_description_item|database_description_value|

+-------------------------+--------------------------+

| Database Name| default|

| Description| default database|

| Location| file:/log/clouder...|

+-------------------------+--------------------------+

scala> spark.sql("describe formatted default.datascience").show

org.apache.spark.sql.catalyst.analysis.NoSuchTableException: Table or view 'datascience' not found in database 'default';

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.requireTableExists(SessionCatalog.scala:138)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.getTableMetadata(SessionCatalog.scala:289)

at org.apache.spark.sql.execution.command.DescribeTableCommand.run(tables.scala:437)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult$lzycompute(commands.scala:58)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.sideEffectResult(commands.scala:56)

at org.apache.spark.sql.execution.command.ExecutedCommandExec.doExecute(commands.scala:74)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:114)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$execute$1.apply(SparkPlan.scala:114)

at org.apache.spark.sql.execution.SparkPlan$$anonfun$executeQuery$1.apply(SparkPlan.scala:135)

at org.apache.spark.rdd.RDDOperationScope$.withScope(RDDOperationScope.scala:151)

at org.apache.spark.sql.execution.SparkPlan.executeQuery(SparkPlan.scala:132)

at org.apache.spark.sql.execution.SparkPlan.execute(SparkPlan.scala:113)

at org.apache.spark.sql.execution.QueryExecution.toRdd$lzycompute(QueryExecution.scala:87)

at org.apache.spark.sql.execution.QueryExecution.toRdd(QueryExecution.scala:87)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:185)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:64)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:592)

... 48 elided

Thanks!

Created 07-20-2017 07:04 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I fixed it.

In the Spark2 Configuration Screen (In Cloudera Manager for the CDH cluster), Hiver Service was set to none

I set it to Hive and CDSW is now working as expected.

Thanks!