Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Can we read and compress folders using NiFi.?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Can we read and compress folders using NiFi.?

- Labels:

-

Apache NiFi

Created 12-06-2016 09:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, we have a use case where we need to save the source files as it is.

for each day we will have a folder with 1000 files. we will process 1000 files and send required 100 files to HDFS.

but we also want to compress and save the whole folder for any future references.(we like to keep the source as it is at a folder level not at file level)

is there anyway i can do this using NiFi.?

Regards,

Sai

Created on 12-07-2016 12:12 AM - edited 08-19-2019 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Saikrishna Tarapareddy

There's no Processor which compress a folder that I'm aware of. But you can do that by ExecuteProcess processor:

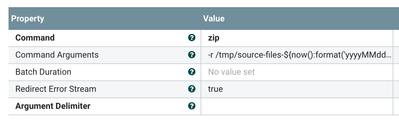

This is an example ExecuteProcess configuration to use zip command.

Command Arguments:

-r /tmp/source-files-${now():format('yyyyMMdd')}.zip /tmp/source-files/NiFi expression can be used in command arguments, above expression sets the zip filename using current date.

In your use-case, I'd use this single processor scheduled with 'Cron Driven' Scheduling Strategy, in addition to the main flow which processes the files and send those to HDFS.

Hope this helps.

Thanks,

Koji

Created on 12-07-2016 12:12 AM - edited 08-19-2019 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Saikrishna Tarapareddy

There's no Processor which compress a folder that I'm aware of. But you can do that by ExecuteProcess processor:

This is an example ExecuteProcess configuration to use zip command.

Command Arguments:

-r /tmp/source-files-${now():format('yyyyMMdd')}.zip /tmp/source-files/NiFi expression can be used in command arguments, above expression sets the zip filename using current date.

In your use-case, I'd use this single processor scheduled with 'Cron Driven' Scheduling Strategy, in addition to the main flow which processes the files and send those to HDFS.

Hope this helps.

Thanks,

Koji

Created 12-07-2016 04:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you. i will try that. so with above example it zips all the files under source-files into one zip file.?

also is there anyway that i can process this at subfolder level.?

in my case , under a folder "January" i will have 31 subfolders 01012016 to 01312016 (a folder for each day with 8000 files in each folder). if i point the above command at folder "January" it will try to zip all 31 subfolders into one which may come nearly 80G and it may be difficult for me to transfer to HDFS thru site2site.

i was looking at 1 zip for each subfolder. so in this case i will endup with 31 zip files.

Thanks again,

Sai

Created on 12-08-2016 02:18 AM - edited 08-19-2019 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

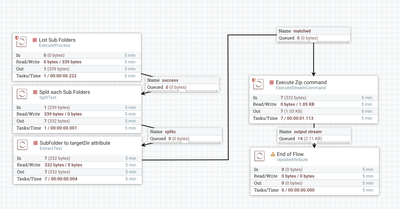

In that case, I'd use another command to list the sub folders first, then pass each sub folder to a zip command. NiFi flow looks like below.

List Sub Folders (ExecuteProcess): I used find command here.

find souce-files -type d -depth 1

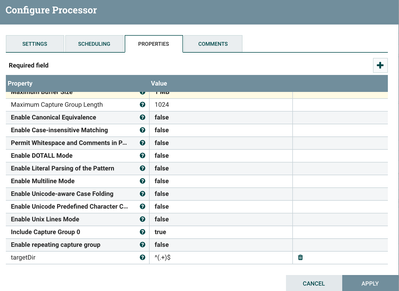

The command produces a flow file containing sub folders each line. So, split those outputs, then use ExtractText to extract sub folder path to an attribute 'targetDir'.

You need to add a dynamic property by clicking the plus sign, then name the property with an attribute name to extract the content to. The Value is a regular expression to extract desired text from the content.

Used ExecuteStreamCommand this time, to use incoming flow files.

- Command Path: zip

- Command Arguments: -r;${targetDir}-${now():format('yyyyMMdd')}.zip;${targetDir}

- Ignore STDIN: true

Then it will zip each sub folders.

Thanks,

Koji