Hello again friends -

I am working the tutorial "A Lap Around Apache Spark" and running into an issue. I am executing the following command:

./bin/spark-shell --master yarn-client --driver-memory 512m--executor-memory 512m

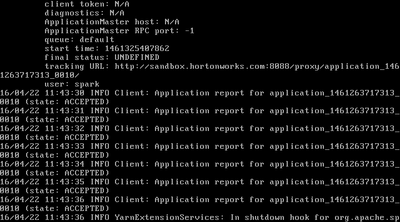

Which seems to start OK - but apparently not, as it seems to get stuck and repeat the following message every second:

And it will keep repeating the same message over and over again until I hit CTRL+C.

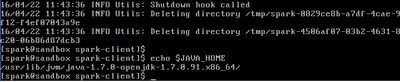

I am wondering about the $JAVA_HOME variable. I have tried remedying the above condition with different variations, none of which seem to have any effect. The current value of $JAVA_HOME is as follows:

Any thoughts? I hate the thought of giving up on this module until I understand what is creating this error.

Thanking all of you in advance - your response to my inquiries in the past have been spectacular, and I am very grateful.

Thanks,

Mike