Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Cannot modify hive.exec.max.dynamic.partitions...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cannot modify hive.exec.max.dynamic.partitions.pernode at runtime

- Labels:

-

Apache Hive

Created 12-28-2016 03:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've seen several different examples online of people setting this property in Hive at runtime, but I am not able to through beeline. I've even set up the whitelist in Ambari for this property, but I'm still unable to set it.

hive.security.authorization.sqlstd.confwhitelist=hive.exec.max.dynamic.partitions,hive.exec.max.dynamic.partitions.pernode

But when I try this in beeline:

0: jdbc:hive2://whqlhdpm004:10000/hive> set hive.exec.max.dynamic.partitions.pernode=1000;

I get the following error:

Error: Error while processing statement: Cannot modify hive.exec.max.dynamic.partitions.pernode at runtime. It is not in list of params that are allowed to be modified at runtime (state=42000,code=1)

Created on 12-28-2016 03:55 PM - edited 08-18-2019 03:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 12-28-2016 03:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the response. I did not mention, we are on HDP 2.4.2

Created 12-28-2016 04:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Barret Miller Did you restart the hive services post the changes ?

Created 12-28-2016 04:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I've restarted services from Ambari.

Created 12-28-2016 07:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you provide output of "set hive.security.authorization.sqlstd.confwhitelist". You should also ensure that the property is not present in "hive.conf.restricted.list" as it supersedes the whitelist declaration.

Created 01-03-2017 10:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I figured out the issue. The problem was in the format of the hive.security.authorization.sqlstd.confwhitelist in Ambari. It say's it's just a comma delimited list, but if you inspect the value in beeline e.g.

set hive.security.authorization.sqlstd.confwhitelist;

you see it's actually pipe delimited, and all of the periods are escaped with a backslash. If I put in exactly that format. e.g.:

hive\.auto\..*|hive\.cbo\..*|hive\.convert\..*|hive\.exec\.dynamic\.partition.*|hive\.exec\..*\.dynamic\.partitions\..*|hive\.exec\.compress\..*|hive\.exec\.infer\..*|hive\.exec\.mode.local\..*|hive\.exec\.orc\..*|hive\.exec\.parallel.*|hive\.explain\..*|hive\.fetch.task\..*|hive\.groupby\..*|hive\.hbase\..*|hive\.index\..*|hive\.index\..*|hive\.intermediate\..*|hive\.join\..*|hive\.limit\..*|hive\.log\..*|hive\.mapjoin\..*|hive\.merge\..*|hive\.optimize\..*|hive\.orc\..*|hive\.outerjoin\..*|hive\.parquet\..*|hive\.ppd\..*|hive\.prewarm\..*|hive\.server2\.proxy\.user|hive\.skewjoin\..*|hive\.smbjoin\..*|hive\.stats\..*|hive\.tez\..*|hive\.vectorized\..*|mapred\.map\..*|mapred\.reduce\..*|mapred\.output\.compression\.codec|mapred\.job\.queuename|mapred\.output\.compression\.type|mapred\.min\.split\.size|mapreduce\.job\.reduce\.slowstart\.completedmaps|mapreduce\.job\.queuename|mapreduce\.job\.tags|mapreduce\.input\.fileinputformat\.split\.minsize|mapreduce\.map\..*|mapreduce\.reduce\..*|mapreduce\.output\.fileoutputformat\.compress\.codec|mapreduce\.output\.fileoutputformat\.compress\.type|tez\.am\..*|tez\.task\..*|tez\.runtime\..*|tez.queue.name|hive\.exec\.reducers\.bytes\.per\.reducer|hive\.client\.stats\.counters|hive\.exec\.default\.partition\.name|hive\.exec\.drop\.ignorenonexistent|hive\.counters\.group\.name|hive\.default\.fileformat\.managed|hive\.enforce\.bucketing|hive\.enforce\.bucketmapjoin|hive\.enforce\.sorting|hive\.enforce\.sortmergebucketmapjoin|hive\.cache\.expr\.evaluation|hive\.hashtable\.loadfactor|hive\.hashtable\.initialCapacity|hive\.ignore\.mapjoin\.hint|hive\.limit\.row\.max\.size|hive\.mapred\.mode|hive\.map\.aggr|hive\.compute\.query\.using\.stats|hive\.exec\.rowoffset|hive\.variable\.substitute|hive\.variable\.substitute\.depth|hive\.autogen\.columnalias\.prefix\.includefuncname|hive\.autogen\.columnalias\.prefix\.label|hive\.exec\.check\.crossproducts|hive\.compat|hive\.exec\.concatenate\.check\.index|hive\.display\.partition\.cols\.separately|hive\.error\.on\.empty\.partition|hive\.execution\.engine|hive\.exim\.uri\.scheme\.whitelist|hive\.file\.max\.footer|hive\.mapred\.supports\.subdirectories|hive\.insert\.into\.multilevel\.dirs|hive\.localize\.resource\.num\.wait\.attempts|hive\.multi\.insert\.move\.tasks\.share\.dependencies|hive\.support\.quoted\.identifiers|hive\.resultset\.use\.unique\.column\.names|hive\.analyze\.stmt\.collect\.partlevel\.stats|hive\.server2\.logging\.operation\.level|hive\.support\.sql11\.reserved\.keywords|hive\.exec\.job\.debug\.capture\.stacktraces|hive\.exec\.job\.debug\.timeout|hive\.exec\.max\.created\.files|hive\.exec\.reducers\.max|hive\.reorder\.nway\.joins|hive\.output\.file\.extension|hive\.exec\.show\.job\.failure\.debug\.info|hive\.exec\.tasklog\.debug\.timeout|parquet\.compression|hive\.exec\.max\.dynamic\.partitions|hive\.exec\.max\.dynamic\.partitions\.pernode

Then I can set these values at runtime:

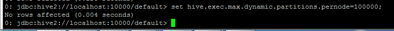

0: jdbc:hive2://whqlhdpm004:10000/lab> set hive.exec.max.dynamic.partitions.pernode; +------------------------------------------------+--+ | set | +------------------------------------------------+--+ | hive.exec.max.dynamic.partitions.pernode=5000 | +------------------------------------------------+--+ 1 row selected (0.117 seconds) 0: jdbc:hive2://whqlhdpm004:10000/lab> set hive.exec.max.dynamic.partitions.pernode=10000; No rows affected (0.003 seconds) 0: jdbc:hive2://whqlhdpm004:10000/lab> set hive.exec.max.dynamic.partitions.pernode; +-------------------------------------------------+--+ | set | +-------------------------------------------------+--+ | hive.exec.max.dynamic.partitions.pernode=10000 | +-------------------------------------------------+--+ 1 row selected (0.005 seconds) 0: jdbc:hive2://whqlhdpm004:10000/lab>

Created 01-03-2017 10:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now my question is whether this is a bug, because it looks like I should already be able to modify this parameter based on the default. If you look, this is the value of the 5th position:

hive\.exec\..*\.dynamic\.partitions\..*

So it looks like this would allow hive.exec.*.dynamic.partitions.*

or hive.exec.max.dynamic.partitions.pernode

Created 11-02-2017 07:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes had the same issue with comma seperated values,Pipe seperation did fix it.

Thanks