Support Questions

- Cloudera Community

- Support

- Support Questions

- Clarifications on state management within NiFi pro...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Clarifications on state management within NiFi processors

- Labels:

-

Apache NiFi

Created 05-18-2017 02:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I havent gone through the code for the DistributedCache yet. After the first try today, I had a few questions that came up.

1) Do we have to use different Distributed cache Server/Client for each Processor that has State management? For example can we use the same Distributed Cache Serve/Client for both ListSFTP and ListHDFS within the Processor Group?

2) If we specify a value in the "persistence directory" in the DistributedCacheServer, the assumption is the cache is present in both the memory and in Disk? Is the understanding correct?

3) The expectation of using the DistributedCachingService is that the state is maintained across the cluster which means even if we lose the node that was primarily running the Processor, we still do not duplicate the listing. But when I try to understand the thread in the link http://mail-archives.apache.org/mod_mbox/nifi-users/201611.mbox/%3CCA%2BWJ-%2B%2Bqdkg-qRzP-7gUAX%2BA... specifically the line "it does not implement any coordination logic that work nicely with NiFi cluster" I am not sure I exactly follow the issue. Please clarify.

Created 05-18-2017 01:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Question 1, No, you do not need a controller service for each processor, they can use the same DistributedCache service.

Question 2, Yes, that is the correct understanding. If no directory is specified then the cache is in memory only.

Question 3, The way the DistributedMapCacheServer works is, each node in the cluster will be running it's own server. But data is written via the DistributedMapCacheClient controller service, and in that configuration, you will specify which of the DistributedMapCacheServers to write the data. So, only one of servers will have the data. If that node is lost, then the server would not be available until the node was back in the cluster.

When you say "primarily running the processor", I am assuming that you mean the processor is configured to run on the primary node only. If you lose the primary node, then another node would be elected a primary, and then the processor would start running again. There might be an issue if the lost node also happen to be the node that was configured to be used by the DistributedMapCacheClient controller service, then the state would be unavailable.

FYI, there are only three processors currently that require a DistributedMapCache controller service. Any other processors that retain state use zookeeper. So, the state of the processor is maitained across the cluster automatically.

Created 05-18-2017 01:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Question 1, No, you do not need a controller service for each processor, they can use the same DistributedCache service.

Question 2, Yes, that is the correct understanding. If no directory is specified then the cache is in memory only.

Question 3, The way the DistributedMapCacheServer works is, each node in the cluster will be running it's own server. But data is written via the DistributedMapCacheClient controller service, and in that configuration, you will specify which of the DistributedMapCacheServers to write the data. So, only one of servers will have the data. If that node is lost, then the server would not be available until the node was back in the cluster.

When you say "primarily running the processor", I am assuming that you mean the processor is configured to run on the primary node only. If you lose the primary node, then another node would be elected a primary, and then the processor would start running again. There might be an issue if the lost node also happen to be the node that was configured to be used by the DistributedMapCacheClient controller service, then the state would be unavailable.

FYI, there are only three processors currently that require a DistributedMapCache controller service. Any other processors that retain state use zookeeper. So, the state of the processor is maitained across the cluster automatically.

Created 05-18-2017 09:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Wynner Thanks much for the response. Follow up question for Question 3. Say I have 4 nodes on the cluster, Node 1 is the Primary node and Node 2 is configured to use by the DistributedMapCacheClient controller service. If the Node 2 goes down, then the state information is lost and the next scheduled List will have everything that has been already processed along with any new ones. Is the understanding right? If yes, this doesn't really seem to have a Distributed behavior (that is probably what the other thread is talking about).

Created 05-18-2017 09:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Basically correct, which is why NiFi uses zookeeper for state information now.

I wouldn't use the DistributedMapCache unless I absolutely had too. Which processors are you using?

Created 05-18-2017 09:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Wynner I am using ListSFTP. But the behavior should not change based on processors right?

Created 05-18-2017 10:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Correct, but the ListSFTP processor does not require a Distributed Cache Service to maintain state. So, don't create one. It will use zookeeper by default.

Created 05-19-2017 04:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@WynnerI am unable to respond to your last response. Thanks for the answers again. Is there a list of processors that uses zookeeper by default for state management (or does all of them use)? I assume ListHDFS work the same way.

When and what exactly is the scenario one would use DistributedCacheService for? I tested ListSFTP by bringing down the primary node, restarting all nodes and it seems to work as expected for the listing without any DistributedCacheService configuration.

Created 05-19-2017 04:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@WynnerAlso, how do we clear the state if we want to re-list the files (maybe because there was an issue with the processing of the data)?

Created on 05-19-2017 06:14 PM - edited 08-18-2019 02:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There are only three processors which require a Distributed map Cache server: DetectDuplicate, FetchDistributedMapCache and PutDistributedMapCache. The rest will use zookeeper were applicable.

To clear the state of a processor, just do the following steps

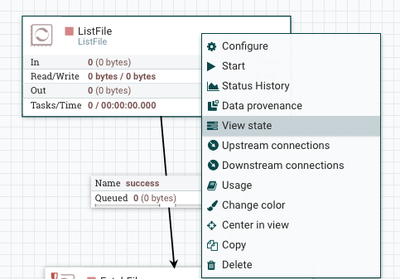

Right click on a processor, select View state from the menu

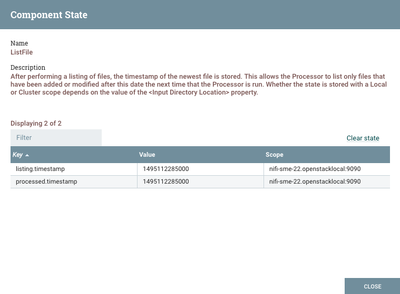

Then just click Clear state and the files will be listed again.