Support Questions

- Cloudera Community

- Support

- Support Questions

- Cloudera Manager difference in physical memory vs ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cloudera Manager difference in physical memory vs linux system memory usage

- Labels:

-

Apache YARN

-

Cloudera Manager

Created on 04-16-2018 02:08 AM - edited 09-16-2022 06:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have a cluster with 3 masters and 5 data nodes. Jupyter is installed on one of the master and runs via the following command in client mode

pyspark2 --master yarn --deploy-mode client --name jupyter-notebook --driver-memory 4G --executor-memory 4G --executor-cores 3 --num-executers 3

Everything is working well but there is a difference of physical memory usage which is shown in Cloudera Manager and system memory usage

Total physical memory : 62G

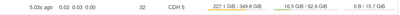

Memory usage as shown by CM: 16.5 G

Memory usage on the system: 56G

Cloudera Manager

while on the system

CM only takes count of memory used by Hadoop components but not the yarn running in a client mode.

Is there any way to make available the memory usage by yarn running in client-mode on CM?

Thank you

Created 04-17-2018 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

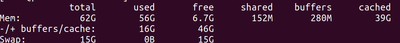

This is a mis-leading of the "free" output.

The first line (starting with "Mem") displays that you have 62G of memory and 56G are used. This memory is used but not from procesess. At the end of the line, you will see a number of 39G cached. In few words, Linux uses a part of free RAM to store data from files used often, in order to save some interactions with the hard disk. Once an application request memory and there is no "free", Linux automatically drops these caches. You cannot turn this feature off. The only thing you can do is just drop the current cached data, but Linux will store something the very next second.

In any case, when the output of "free" is similar to the one you provided, you should always refer to the second line

"-/+ buffers/cache: 16G 49G"

This is the real status, which show "16G" used and "49G" free.

Finally, CM displays the disk and memory usage of the host (in Hosts view) regardless of what process is using it. It is the same output as "free".

Created 04-16-2018 04:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For some reason I cannot see the image that you have uploaded, still i got your point and trying to answer your question

we cannot always match/compare the memory usage from CM vs linux for various reasons

1. Yes, as you said CM only takes count of memory used by Hadoop components and it won't count consider if you have any other applications running on your local linux as CM designed to monitor only Hadoop and dependent services

2. (I am not sure you are getting the CM report from host monitor) There are practical difficulties to get memory usage of each client node in a single report. Ex: Consider you have 100+ nodes and each node has different memory capacity like 100 GB, 200 GB, 250 GB, 300 GB, etc, it is difficult to generate a single report to get memory usage of each client

still if the default report available in CM is not meeting your requirement, may be you can try to build custom chart from CM -> Chart (menu) -> your tsquery

https://www.cloudera.com/documentation/enterprise/5-9-x/topics/admin_cluster_util_custom.html

Created 04-16-2018 06:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@saranvisa

Thanks for your quick reply,

The CM report is of the particular host where pyspark2 is running.

Custom charts will provide the memory usage of Hadoop components only.

Created 04-17-2018 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is a mis-leading of the "free" output.

The first line (starting with "Mem") displays that you have 62G of memory and 56G are used. This memory is used but not from procesess. At the end of the line, you will see a number of 39G cached. In few words, Linux uses a part of free RAM to store data from files used often, in order to save some interactions with the hard disk. Once an application request memory and there is no "free", Linux automatically drops these caches. You cannot turn this feature off. The only thing you can do is just drop the current cached data, but Linux will store something the very next second.

In any case, when the output of "free" is similar to the one you provided, you should always refer to the second line

"-/+ buffers/cache: 16G 49G"

This is the real status, which show "16G" used and "49G" free.

Finally, CM displays the disk and memory usage of the host (in Hosts view) regardless of what process is using it. It is the same output as "free".