Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Cloudera VM Free to use Apache Hadoop with Spa...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Cloudera VM Free to use Apache Hadoop with Spark

- Labels:

-

Apache Hadoop

-

Apache Hive

-

Apache Spark

-

HDFS

Created on 05-11-2016 12:09 PM - edited 09-16-2022 03:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

There exists some free Vitual Machine to use Apache Hadoop and Spark? I need to do some taks with HDFS and Hive and next some analysis with Spark.

Thanks!

Created 05-11-2016 12:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 05-23-2016 10:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 05-23-2016 12:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, thank you. I'll try later. By the way can you open a spark folder from hue? I have a message that application is not installed, any other folders I can open. At least in 5-5 i had more directories for spark :-). That's why I didn't try any spark shells from a command line. I decided there was nothing there.

Created 05-23-2016 04:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

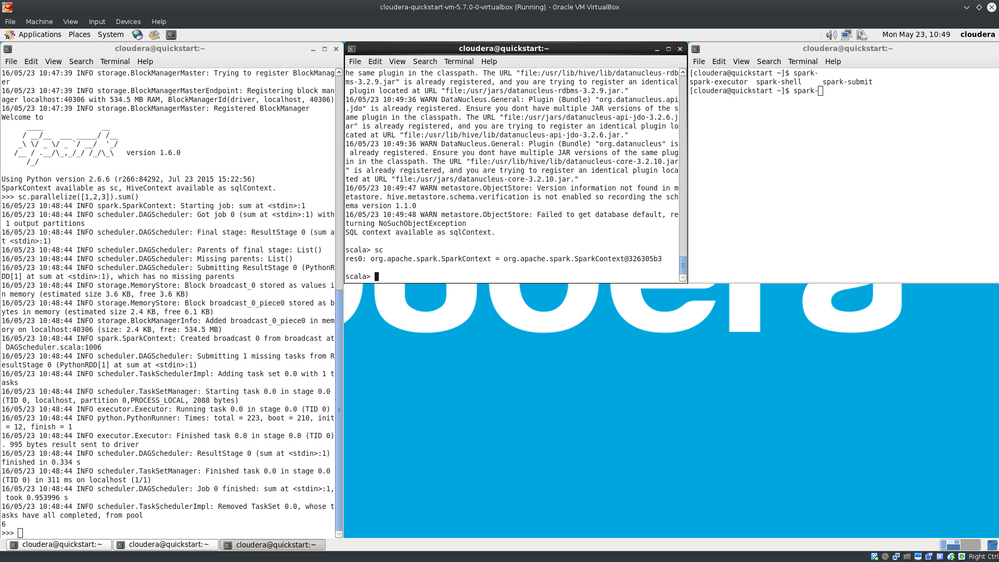

Thank you, I have spark! But what do I do wrong?

From localhost:4040/jobs I can see a job run, but from main menu I have no completed applications found.

Thank you.

- « Previous

-

- 1

- 2

- Next »