Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Combining Attributes in NiFi

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Combining Attributes in NiFi

- Labels:

-

Apache NiFi

Created 01-09-2017 02:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I have a scenario where I get a data file & control file. The data file has the actual data & the control file has the details about the data file, say filename,size etc. Below are the file names,

file_name - ABC.txt ctrl_file_name - CTRL_ABC.txt

I have to read the files from local filesystem & write into hdfs.

And say, I have the below flow in NiFi, List File -> Update attribute(file_name - ABC.txt,ctrl_file_name - CTRL_ABC.txt) Is there a way to combine these 2 attributes & read it using Fetch file processor & write it at once into HDFS?

Thanks!

Created 01-09-2017 02:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do you plan to combine the "content" of these two files together?

This is the first question that needs to be addressed. You can use the mergeContent processor to merge the "content" of multiple FlowFiles using binary concatenation. If this is acceptable, then all you need to do is consume both your datafile and control file, use updateAttribute to extract the a common name from both filenames into new attribute, and finally use that new attribute as the "correlation Attribute Name" in the MergeContent processor.

You would also want to set the min number of entries to 2 in the mergeContent processor.

Matt

Created 01-09-2017 02:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you explain a bit more about what is to be done with both files? So far it looks like your flow is finding the non-control files and adding an attribute for its corresponding control file. How do you intend to use the contents of the control file, and what would be the content for the file written to HDFS?

Created 01-09-2017 04:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Matt,

Below is my requirement on a high level,

As I mentioned in my previous post, the data file contains the actual data & control file has the details of the data file. If there are 100 records in the data file, the control has these details(name of the data file,file size and record count). My requirement is the read the control file, get the record count(store it in an attribute). Then read the data file & validate it against the record count derived from the control file to check whether the record count matches. If the record count matches, I will process the data file, if not I have to move both control file & data file to reject path in HDFS.

While I read the files, I fetch the control file(CTRL_ABC.txt) first & then from the control file name I fetch the data file(ABC.txt),store the filenames in 2 separate attributes.To read the contents of the files I use ListFile Processor & 2 FetchFile processors(to read the files separately).do the validation. If the validation fails, I need to move both the files to reject path.

Is there a way to move these files at once or do I need to move the files separately using 2 different FetchFile & PutHDFS processors?

Sample Flow-

ListFile->UpdateAttribute(to get data/ctrl file names)->FetchFile(read ctrl file)->UpdateAttribute(get record count)->FetchFile(to read data file)->Custom Processor(to do validation)

Thanks!

Created 01-09-2017 02:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How do you plan to combine the "content" of these two files together?

This is the first question that needs to be addressed. You can use the mergeContent processor to merge the "content" of multiple FlowFiles using binary concatenation. If this is acceptable, then all you need to do is consume both your datafile and control file, use updateAttribute to extract the a common name from both filenames into new attribute, and finally use that new attribute as the "correlation Attribute Name" in the MergeContent processor.

You would also want to set the min number of entries to 2 in the mergeContent processor.

Matt

Created 01-09-2017 04:27 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Matt for the response!! I have updated my requirement above.Could you please take a look at it & help me out?

Created on 01-09-2017 10:08 PM - edited 08-19-2019 03:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Let assume you want to merge the text based Content of both your ABC.txt and CTRL_ABC.txt files into one single NiFi FlowFile. The resulting content of that new merge FlowFile should consist of the content of CTRL_ABC.txt before ABC.txt.

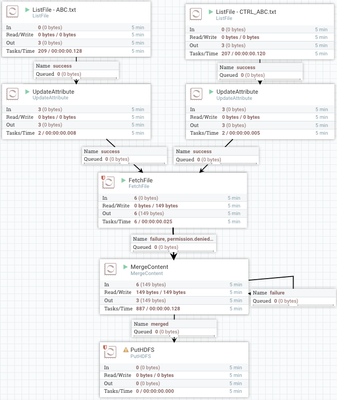

A simple combination of ListFile (need 2 of these) --> UpdateAtttribute (need 2 of these) --> FetchFile --> MergeContent will do the trick.

The flow would look something like this:

A template is below that can be uploaded to your NiFi which will show how to configure each of these processors so that they are merged in the proper order and merged with the appropriate CTRL files.

merge-two-files-using-defragmentation.xml

Hope this helps,

Matt

Created 01-09-2017 10:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you found this information helpful, please accept the answer.

Created on 03-05-2018 07:08 PM - edited 08-19-2019 03:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

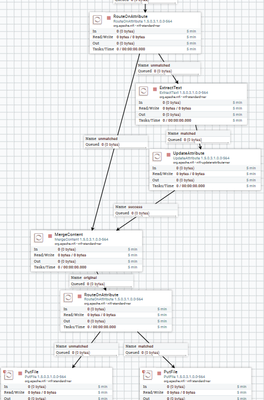

Hi @Matt Burgess, I have a question with regards to combining attributes from two different flows. I would like to merge the attributes of two different flows without merging the contents. For example, looking at the following flow:

I have different types of files and I would like to add some attributes based on the content of one type of files and then merge all attributes. I know using MergeContent I will be able to keep all unique attributes if the output of the MergeContent is set to merged. But I do not want to merge the contents of my files and would like to keep them as their original format. So, is there any way to merge the attributes of two different flows?

Thanks,

Sam

Created 03-07-2018 01:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Matt Burgess, just to clarify my above question, I need to get some values from 1.ctl and use these values as attributes for 2.txt.

GetFile>RouteOnAttribute(filename)>(if filename ends with ctl then: extractText>UpdateAttribute)

How can I add these attributes to the attributes of 2.txt?

I really appreciate your help.

Created 03-07-2018 03:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Will it always be the same ctl file? If so then you could use LookupAttribute to add attributes from the ctl file based on some key (like ID). Alternatively, you could read in a ctl file, extract the values to attributes, then set the filename attribute to 2.txt and use FetchFile to get 2.txt into the flow file's content (and the ctl attributes should remain in the flow file).

Selective merging is IMO not a "natural" operation in a flow-based paradigm. It can be accomplished with something like ExecuteScript, but hopefully one of the above options would work better for your use case.