Support Questions

- Cloudera Community

- Support

- Support Questions

- Configuration of the remote process group in a nif...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Configuration of the remote process group in a nifi cluster

- Labels:

-

Apache NiFi

Created 10-21-2017 11:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A have a nifi cluster with 5 machines, their FQDNs are

mycoapmny.nifiui.node01.net

mycoapmny.nifiui.node02.net (is the primary node)

mycoapmny.nifiui.node03.net

mycoapmny.nifiui.node04.net

mycoapmny.nifiui.node04.net

I am configuring remoteprocessgroup on mycoapmny.nifiui.node02.net to list files in a directory on the same node.

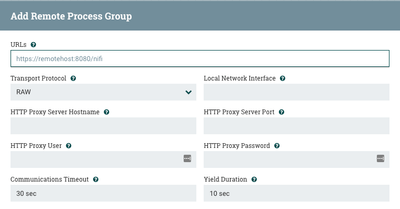

but I got the options such as

Transport protocol will it be HTTP? or Raw?

HTTP Proxy Server hostnames of each nodes in cluster

Http Proxy user --> is my ad group username? required here?

Local netwrok interface --> bond0? since this is the name I got doing after ifconfig on the primary node

Http proxy server port --> which port ? 9091 since this is configured for my nifi ui

Http proxy password --> is my ad group password required here?

Created on 10-23-2017 02:41 PM - edited 08-17-2019 05:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not sure I am completely following your use case.

With a NiFi cluster configuration it does not matter which nodes UI you access, the canvas you are looking at is what is running on all nodes in your cluster. The Node(s) that are the currently elected cluster coordinator and/or primary node may change at any time.

A Remote Process Group (RPG) is used to send or retrieve FlowFiles from another NiFi instance/cluster. It is not used to list files on a system. So I am not following you there.

When it comes to configuring a RPG, not all fields are required.

- You must provide the URL of the target NiFi instance/cluster (with a cluster, this URL can be anyone of the nodes).

- You must choose either "RAW" (default) or "HTTP" as you desired Transport Protocol. No matter which is chosen, the RPG will connect to the target URL over HTTP to retrieve Site-To-Site (S2S) details about the target instance/cluster. (number of nodes if cluster, available remote input/output ports, etc...). When it comes to the actual data transmission:

----- If configured for "RAW" (which uses a dedicated S2S port configured in the nifi.properties property nifi.remote.input.socket.port on target NiFi), data will be sent over that port.

----- If configured for "HTTP", data will be transmitted over same port used in the URL for the target NiFi's UI. (This requires that nifi.remote.input.http.enabled property in nifi.properties file is set to "true")

The other properties are optional:

- Configure the Proxy properties if an external proxy server is sitting between your NiFi and the target NiFi preventing any direct connection between your NiFi instance over the above used configured ports (defaults: unset). Since NiFi RPG will be sending data directly to each target node (if target is cluster), none of the NiFi nodes themselves are acting as a proxy in this process.

- Configure the Local Network Interface, if your NiFi nodes have multiple network ports and you want to force your RPG to only use a specific interface (default: unset).

Additional documentation resources from Apache:

https://nifi.apache.org/docs/nifi-docs/html/user-guide.html#site-to-site

https://nifi.apache.org/docs/nifi-docs/html/administration-guide.html#site_to_site_properties

If you find this answer addresses your question, please take a moment to click "Accept" below the answer.

Thanks,

Matt

Created on 10-23-2017 02:41 PM - edited 08-17-2019 05:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am not sure I am completely following your use case.

With a NiFi cluster configuration it does not matter which nodes UI you access, the canvas you are looking at is what is running on all nodes in your cluster. The Node(s) that are the currently elected cluster coordinator and/or primary node may change at any time.

A Remote Process Group (RPG) is used to send or retrieve FlowFiles from another NiFi instance/cluster. It is not used to list files on a system. So I am not following you there.

When it comes to configuring a RPG, not all fields are required.

- You must provide the URL of the target NiFi instance/cluster (with a cluster, this URL can be anyone of the nodes).

- You must choose either "RAW" (default) or "HTTP" as you desired Transport Protocol. No matter which is chosen, the RPG will connect to the target URL over HTTP to retrieve Site-To-Site (S2S) details about the target instance/cluster. (number of nodes if cluster, available remote input/output ports, etc...). When it comes to the actual data transmission:

----- If configured for "RAW" (which uses a dedicated S2S port configured in the nifi.properties property nifi.remote.input.socket.port on target NiFi), data will be sent over that port.

----- If configured for "HTTP", data will be transmitted over same port used in the URL for the target NiFi's UI. (This requires that nifi.remote.input.http.enabled property in nifi.properties file is set to "true")

The other properties are optional:

- Configure the Proxy properties if an external proxy server is sitting between your NiFi and the target NiFi preventing any direct connection between your NiFi instance over the above used configured ports (defaults: unset). Since NiFi RPG will be sending data directly to each target node (if target is cluster), none of the NiFi nodes themselves are acting as a proxy in this process.

- Configure the Local Network Interface, if your NiFi nodes have multiple network ports and you want to force your RPG to only use a specific interface (default: unset).

Additional documentation resources from Apache:

https://nifi.apache.org/docs/nifi-docs/html/user-guide.html#site-to-site

https://nifi.apache.org/docs/nifi-docs/html/administration-guide.html#site_to_site_properties

If you find this answer addresses your question, please take a moment to click "Accept" below the answer.

Thanks,

Matt

Created 10-24-2017 01:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

* HCC Tip: Don't respond to an answer via another answer. Added comments to existing answer unless you are truly starting a new answer.

Is the directory from which ListFile is listing files a mounted directory on all your nodes or only exists on just one node in your cluster?

Keep in mind when using "primary node" only scheduling that the primary node can change at any time.

--------

If it exists only on one node, you have a single point of failure in your cluster should that NiFi node go down.

To avoid this single point of failure:

1. You could use NiFi to execute your script on "primary node" every 15 minutes assuming the directory the script is writing to is not mounted across all nodes. Then you could have ListFile running on all nodes all the time. Of course only the the listFile on any one given node will have data to ingest at any given time since your script will only be executed on the currently elected primary node.

2. Switch to using listSFTP and FetchSFTP processors, That way no matter which node is the current primary node, it can still connect over SFTP and list data. The ListSFTP processor maintains cluster wide state for this processor in Zookeeper, so when Primary node changes it does not start over from beginning.

-------

The RPG is very commonly used to redistribute FlowFile within the same NiFi cluster.

Check out this HCC article that covers how load-balancing occurs with a RPG:

https://community.hortonworks.com/content/kbentry/109629/how-to-achieve-better-load-balancing-using-...

Thank you,

Matt

Created 10-25-2017 05:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply, appreciate it.

In my case directory from which files will be listed exist only on one node.

For now I am trying to implement with ListSFTP and FetchSFTP processors and hope it works fine.

Thank you once again for your valuable suggestions.

Thanks,

Basant

Created 10-23-2017 09:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My use case:

In one the nodes of nifi cluster, there is a Unix shell script running which create files in a directory every 15 minutes.

To ingest these files I am using "ListFile processor" on the node on which the file is generated and then use remote process group to input to FetchFile processor to efficiently use the nifi cluster.

My doubt is that do we need to run the ListFile processor on Primary Node.

What will happen if the Primary Node goes down?

Will the newly elected Primary node be able to access the original directory.

I am also following this blog:

https://pierrevillard.com/2017/02/23/listfetch-pattern-and-remote-process-group-in-apache-nifi/

According to this RPG can also be used to connect a NiFi cluster to itself.

Could you please clarify on this.

Thanks for all your help and support.

Thanks,

Basant