Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Confirming hosts is all failed HDP2.5 with amb...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Confirming hosts is all failed HDP2.5 with ambari

- Labels:

-

Apache Ambari

Created on 03-22-2017 10:28 PM - edited 08-18-2019 03:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

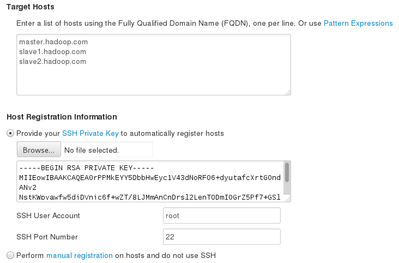

I`m progressing installation along the instruction, and now I`m progressing step 3.5(install options)

For this step, i added below 3 lines in ect/hosts.

127.0.0.1 master master.hadoop.com

127.0.0.1 slave1 slave1.hadoop.com

127.0.0.1 slave2 slave2.hadoop.com

then in terminal, # hostname master.hadoop.com

I tired for generating ssh along below instruction.

# ssh-keygen

# .ssh/id_rsa.pub

# cat id_rsa.pub >> authorized_keys

# chmod 700 ~/.ssh

# chmod 600 ~/.ssh/authorized_keys

then

# vi .ssh/id_rsa

I copy&past strings into web

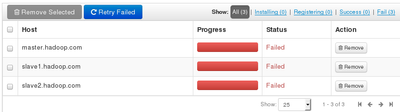

In the next step(confirm hosts), all hosts are failed.

master, installing > fail

others, promptly fail

I don`t know WHY?????????????????????///

please help me

thanks for reading.

and if someone give me solutions, I would be very highly so ultra glad.

Created 03-23-2017 12:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your "/etc/hosts" entries does not look right. Currently i see:

127.0.0.1 master.hadoop.com master 127.0.0.1 slave1.hadoop.com slave1 127.0.0.1 slave2.hadoop.com slave2

Because on the same host you can not assign same IP Address to multiple HDP cluster nodes. That will cause conflicts. Also When the agent will be installed then it's contents will be pushed to the "/var/lib/ambari-agent" & "/usr/lib/ambari-agent/" and "/etc/ambari-agent/"

So if you have the same host to install all the 3 nodes then you should be using Docker/VM not the local machine. Or Install a VirtualBox then create 3 VMs and then install ambari.

Once you install the 3 nodes on 3 VMs then you can have the "/etc/hosts" entries with 3 different IPs as following:

Example:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.20.115.110 jss1.example.com master 172.20.115.120 jss2.example.com slave1 172.20.115.130 jss3.example.com slave2

.

Created 03-22-2017 10:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it seems /etc/hosts entries are incorrect:

ideally it should be like

127.0.0.1 master.hadoop.com master

127.0.0.1 slave1.hadoop.com slave1

127.0.0.1 slave2.hadoop.com slave2

Ca you type hostname -f and give me the output from all the three nodes?

Did you set password less SSH between nodes?

try to do below steps from mater node?

ssh master

ssh slave1

ssh slave2

Created 03-22-2017 11:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I revised ect/hosts in to below

127.0.0.1 master.hadoop.com master

127.0.0.1 slave1.hadoop.com slave1

127.0.0.1 slave2.hadoop.com slave2

---------------------------------------------------

[root@localhost ~]# hostname

localhost.localdomain

[root@localhost ~]# hostname -f

localhost

Afert reboot CentOS7, hostname is changed.

Do I change hostname every reboot?

using this command "# hostname master.hadoop.com"

------------------------------------------------------------------------------

I generated SSH only in master node. because I have only 1 computer.

If I want to use multi node, do I use multi computer?

If it is, single node is OK.

because purpose is to study

---------------------------------------------------------------------------

[root@localhost ~]# ssh master

The authenticity of host 'master (127.0.0.1)' can't be established. ECDSA key fingerprint is b8:c1:a1:6e:d6:85:12:aa:cc:96:1f:34:9c:61:90:9a. Are you sure you want to continue connecting (yes/no)?

# yes

Warning: Permanently added 'master' (ECDSA) to the list of known hosts. Last login: Wed Mar 22 15:59:27 2017

[root@localhost ~]# ssh slave1

The authenticity of host 'slave1 (127.0.0.1)' can't be established. ECDSA key fingerprint is b8:c1:a1:6e:d6:85:12:aa:cc:96:1f:34:9c:61:90:9a. Are you sure you want to continue connecting (yes/no)?

# yes

Warning: Permanently added 'slave1' (ECDSA) to the list of known hosts. Last login: Wed Mar 22 16:12:14 2017 from localhost

[root@localhost ~]# ssh slave2

The authenticity of host 'slave2 (127.0.0.1)' can't be established. ECDSA key fingerprint is b8:c1:a1:6e:d6:85:12:aa:cc:96:1f:34:9c:61:90:9a. Are you sure you want to continue connecting (yes/no)?

# yes

Warning: Permanently added 'slave2' (ECDSA) to the list of known hosts. Last login: Wed Mar 22 16:12:27 2017 from localhost

Created 03-23-2017 12:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your "/etc/hosts" entries does not look right. Currently i see:

127.0.0.1 master.hadoop.com master 127.0.0.1 slave1.hadoop.com slave1 127.0.0.1 slave2.hadoop.com slave2

Because on the same host you can not assign same IP Address to multiple HDP cluster nodes. That will cause conflicts. Also When the agent will be installed then it's contents will be pushed to the "/var/lib/ambari-agent" & "/usr/lib/ambari-agent/" and "/etc/ambari-agent/"

So if you have the same host to install all the 3 nodes then you should be using Docker/VM not the local machine. Or Install a VirtualBox then create 3 VMs and then install ambari.

Once you install the 3 nodes on 3 VMs then you can have the "/etc/hosts" entries with 3 different IPs as following:

Example:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.20.115.110 jss1.example.com master 172.20.115.120 jss2.example.com slave1 172.20.115.130 jss3.example.com slave2

.

Created 03-23-2017 01:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

umm...

For 3 nodes

Do I need 4 computers?

1com: localhost

2com: master

3com: slave1

4com: slave2

Created 03-23-2017 01:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you have 3 node cluster you need three VMs (Computers/Host machines)

Every host should have a unique Hostname and the output of the following command on every host should return the correct FQDN

# hostname -f

- Every host should have an "/etc/hosts" file with same content in it so that it can resolve each other.

Example:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.10.10.101 master 10.10.10.102 slave1 10.10.10.103 slave1

Same entry should be present in master/slave1/slave2 hosts "etc/hosts" file. Do not delete the first two line (127.0.0.1 and ::1) of entry from the /etc/hosts file as that is default.

- Then you need to setup Password less ssh between from Master host to the slave hosts.

- Then install Ambari and configure Cluster.

Please see Hortonworks Video on the same:

HDP 2.4 Multinode Hadoop Installation using Ambari: https://www.youtube.com/watch?v=zutXwUxmaT4

And

Created 03-25-2017 02:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 03-31-2017 09:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for giving the video link .

It was very helpful.

Created 03-25-2017 03:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please try as below

1) ssh between Ambari-server and host need to enabled, better to create PSH connectivity using parallel ssh.

2) Hostname should be FQDN fully qualified hostnames, like as below

# hostname -f centos04.master.com

3) while copying the hostname,pasting on ssh-key, instead you copy to local machine access as file.