Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Confused about the Spark setup doc

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Confused about the Spark setup doc

- Labels:

-

Apache Spark

Created 07-13-2016 09:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Looks like the highlighted parts below are conflicting. Both are trying to set SPARK_HOME variable (with different values). Do you have any ideas?

link: http://hortonworks.com/hadoop-tutorial/a-lap-around-apache-spark/

3. Set JAVA_HOME and SPARK_HOME:

Make sure that you set JAVA_HOME before you launch the Spark Shell or thrift server.

export JAVA_HOME=<path to JDK 1.8>

The Spark install creates the directory where Spark binaries are unpacked to /usr/hdp/2.3.4.1-10/spark.

Set the SPARK_HOME variable to this directory:

export SPARK_HOME=/usr/hdp/2.3.4.1-10/spark/

4. Create hive-site in the Spark conf directory:

As user root, create the file SPARK_HOME/conf/hive-site.xml. Edit the file to contain only the following configuration setting:

<configuration>

<property>

<name>hive.metastore.uris</name>

<!--Make sure that <value> points to the Hive Metastore URI in your cluster -->

<value>thrift://sandbox.hortonworks.com:9083</value>

<description>URI for client to contact metastore server</description>

</property></configuration>

Set SPARK_HOME

If you haven’t already, make sure to set SPARK_HOME before proceeding:

export SPARK_HOME=/usr/hdp/current/spark-client

Created on 07-14-2016 02:35 AM - edited 08-19-2019 02:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

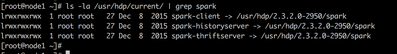

I don't think it matters as "/usr/hdp/current/spark-client" directory is a symlink to the "/usr/hdp/2.3.4.1-10/spark/" directory.

you can verify like below:

[In my screenshot hdp version is slightly different from yours]

Thanks

Created on 07-14-2016 02:35 AM - edited 08-19-2019 02:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I don't think it matters as "/usr/hdp/current/spark-client" directory is a symlink to the "/usr/hdp/2.3.4.1-10/spark/" directory.

you can verify like below:

[In my screenshot hdp version is slightly different from yours]

Thanks

Created 07-14-2016 05:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Got it. Thanks for the answer!