Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Convert Parquet file to CSV using NiFi

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Convert Parquet file to CSV using NiFi

- Labels:

-

Apache NiFi

Created 09-10-2020 12:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I haven't worked on Parquet file but i have requirement to convert Parquet file to CSV using NiFi.

Appreciate if you could guide me on this.

Created on 09-11-2020 04:42 AM - edited 09-11-2020 04:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

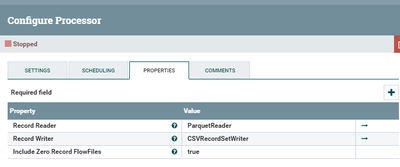

The solution you are looking for is ConvertRecord + ParquetRecordReader + CSVRecordWriter. The ParquetRecordReader is included in NiFi 1.10 and up. If you have an older nifi, here is a post where I talk about adding the required jar files to nifi 1.9 (older version doesn't have parquet):

Another suggestion: If you are working with nifi and hadoop/hdfs/hive, you could store the raw parquet, create external hive table on parquet, then select results and insert them into similar table of csv format. Then you select the csv table results and create csv file.

Also in order to validate/inspect your parquet, or to read the schema (if you need it for controller services) you use parquet tools:

If this answer resolves your issue or allows you to move forward, please choose to ACCEPT this solution and close this topic. If you have further dialogue on this topic please comment here or feel free to private message me. If you have new questions related to your Use Case please create separate topic and feel free to tag me in your post.

Thanks,

Steven @ DFHZ

Created on 09-11-2020 04:42 AM - edited 09-11-2020 04:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The solution you are looking for is ConvertRecord + ParquetRecordReader + CSVRecordWriter. The ParquetRecordReader is included in NiFi 1.10 and up. If you have an older nifi, here is a post where I talk about adding the required jar files to nifi 1.9 (older version doesn't have parquet):

Another suggestion: If you are working with nifi and hadoop/hdfs/hive, you could store the raw parquet, create external hive table on parquet, then select results and insert them into similar table of csv format. Then you select the csv table results and create csv file.

Also in order to validate/inspect your parquet, or to read the schema (if you need it for controller services) you use parquet tools:

If this answer resolves your issue or allows you to move forward, please choose to ACCEPT this solution and close this topic. If you have further dialogue on this topic please comment here or feel free to private message me. If you have new questions related to your Use Case please create separate topic and feel free to tag me in your post.

Thanks,

Steven @ DFHZ

Created 09-11-2020 06:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@stevenmatison : I tried below flow and getting this error.

ConvertRecord[id=7d5d0bb5-0174-1000-1a76-dc9d3a7b1b35] Failed to process StandardFlowFileRecord[uuid=4d10880f-3ce2-475a-ac53-cca01f190600,claim=,offset=0,name=userdata1.parquet,size=0]; will route to failure: org.apache.nifi.parquet.stream.NifiParquetInputFile@17badbbc is not a Parquet file (too small length: 0)

Below is my ConvertRecord settings.

For reading file from location and i want to convert into CSV and transfer to other location, i have built flow ListFile-->ConvertRecord-->FetchFile-->PutFile. Can you please check if the above settings is correct and flow is correct for my requirement

Created 09-11-2020 12:24 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content