Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: ConvertJSONtoSQL in Apache NiFi for Sending to...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

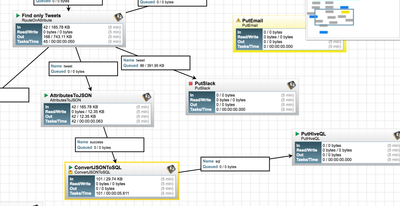

ConvertJSONtoSQL in Apache NiFi for Sending to PutHiveQL

- Labels:

-

Apache Hive

-

Apache NiFi

Created on 07-20-2016 03:11 PM - edited 08-19-2019 01:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is there anything special to get this to work?

Hive Table

create table

twitter(

id int,

handle string,

hashtags string,

msg string,

time string,

user_name string,

tweet_id string,

unixtime string,

uuid string

) stored as orc

tblproperties ("orc.compress"="ZLIB");

Data is paired down tweet:

{ "user_name" : "Tweet Person", "time" : "Wed Jul 20 15:09:42 +0000 2016", "unixtime" : "1469027382664", "handle" : "SomeTweeter", "tweet_id" : "755781737674932224", "hashtags" : "", "msg" : "RT some stuff" }

Created 07-30-2016 08:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not optimal, but this is a nice workaround:

Use ReplaceText processor

insert into twitter values (${tweet_id}, '${handle:urlEncode()}','${hashtag:urlEncode()}', '${msg:urlEncode()}','${time}', '${user_name:urlEncode()}','${tweet_id}', '${unixtime}','${uuid}')

So that's attributes in there.

I do url encode because of quotes and such. Would like a prepared statement or custom processor or call a groovy script. But this works.

Created 08-11-2016 12:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I used this method, but it is very slow, how about yours?

Created 08-11-2016 12:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it wasn't slow. I will try in NiFI 1.0

Created 08-11-2016 01:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I spent one day to insert 7000 rows data into hive, but I have more than 800 million rows.

Created 08-11-2016 01:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

if you have that many rows you need to go parallel and run on multiple nodes. You should probably trigger a Sqoop job or Spark SQL job from NiFi. have a few nodes running at once.

Created 02-28-2017 05:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

store to HDFS as ORC and then create HIVE table ontop of it.

I did 600,000 rows on a 4 GB machine and did that in a few minutes

Created 08-11-2016 02:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

thanks for your reply. do you have a example for details?

Created 08-11-2016 02:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sqoop is just regular sqoop. You call it with executeprocess.

https://sqoop.apache.org/docs/1.4.2/SqoopUserGuide.html#_literal_sqoop_create_hive_table_literal

NiFi + Spark (can be site-to-site, command trigger, kafka)

https://community.hortonworks.com/articles/12708/nifi-feeding-data-to-spark-streaming.html

Created 06-14-2017 02:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

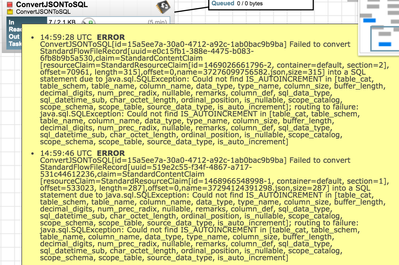

I confirmed this to be a bug in ConvertJSONToSQL, I have written up NIFI-4071, please see the Jira for details.

- « Previous

-

- 1

- 2

- Next »