Support Questions

- Cloudera Community

- Support

- Support Questions

- Converting SQL record to JSON

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Converting SQL record to JSON

- Labels:

-

Apache NiFi

Created 02-20-2022 02:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am trying to read records from a SQL source and put them in a s3 bucket.

Currently my flow is as follows:

QueryDBTableRecord (SQL record to CSV) >>> ConvertRecord (CSV to JSON conversion) >>> JOLT (Rarrange the json to suit) >>> PutS3Oject (Duh)

Firstly is this a valid route? It seems like there has been a lot of changes in the last few years and many tutorials are outdated and I think this is the current best practise.

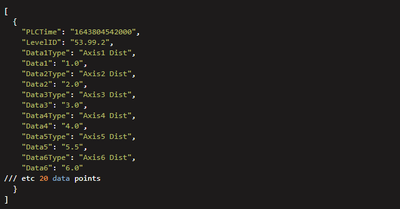

Secondly if this is correct, I'm struggling with the JOLT format. I want to transform this:

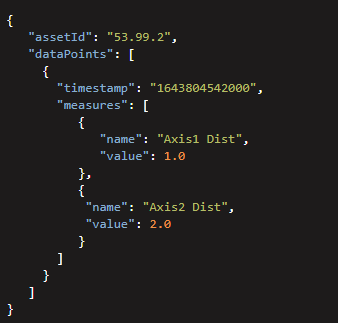

into this:

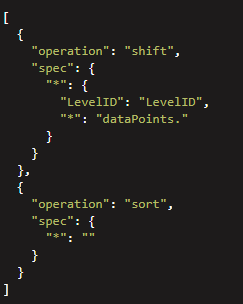

I've made a start with:

And this moves the two items up to the upper level as desired but I am struggling to make pairs from the data points instead of getting a flat array.

Created 02-20-2022 03:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The spec below does almost what you need. The only thing is that your data points are numbered starting from 1 and JSON array are zero-based, so when the Jolt transformation is applied the first element of the "measures" array is left null.

[

{

"operation": "shift",

"spec": {

"LevelID": "assetId",

"PLCTime": "datapoints[0].timestamp",

"Data*Type": "datapoints[0].measures[&(0,1)].name",

"Data*": "datapoints[0].measures[&(0,1)].value"

}

}

]

Cheers

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 02-20-2022 05:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've added a "remove" operation to the chain to solve the problem I mentioned in the last post, removing the "null" in the first position of the "measures" array:

[

{

"operation": "shift",

"spec": {

"LevelID": "assetId",

"PLCTime": "datapoints[0].timestamp",

"Data*Type": "datapoints[0].measures[&(0,1)].name",

"Data*": "datapoints[0].measures[&(0,1)].value"

}

},

{

"operation": "remove",

"spec": {

"datapoints": {

"*": {

"measures": {

"0": ""

}

}

}

}

}

]

This should give you the exact output you were looking for.

HTH,

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.