Support Questions

- Cloudera Community

- Support

- Support Questions

- Data Nodes displaying incorrect block report

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Data Nodes displaying incorrect block report

- Labels:

-

Apache Hadoop

-

Apache YARN

Created 05-29-2018 07:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

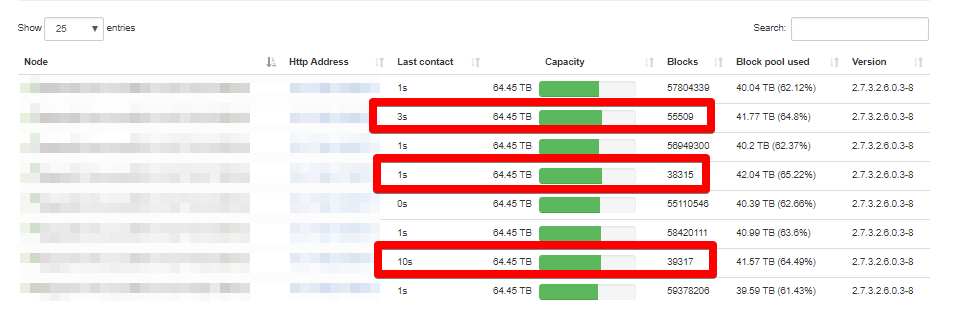

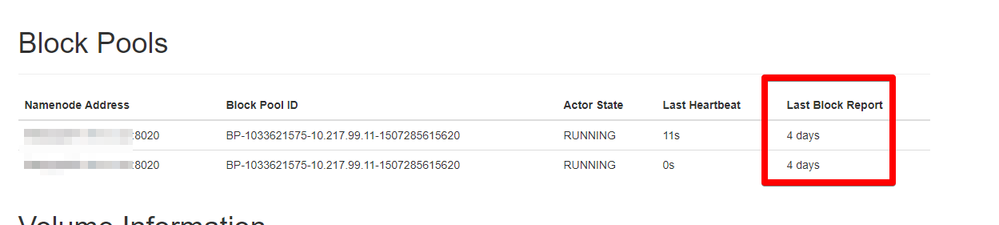

I am getting a strange issue with 3 out of 8 data nodes in our HDP 2.6.0 cluster. These 3 data nodes are not reporting the correct number of blocks and also not sending the block reports to name node on regular intervals.

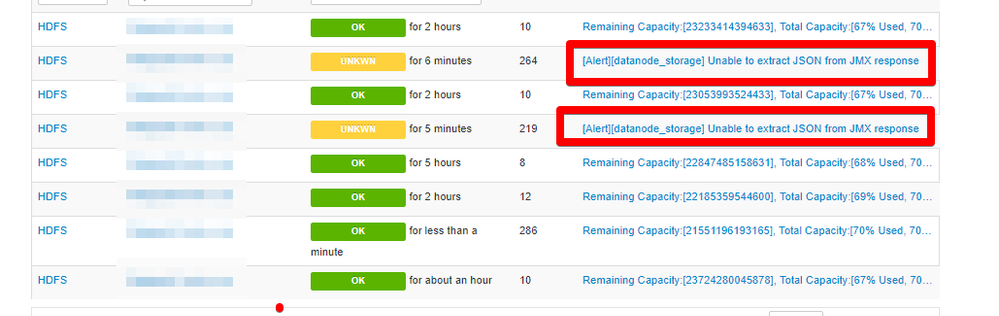

Ambari reporting :

[Alert][datanode_storage] Unable to extract JSON from JMX response

Any suggestion what is wrong with our cluster?

Thanks in advance for your assistance.

Created 05-29-2018 09:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The JMX response typically indicates 3 things why the datanode was not accessible.

- Network Issue

- DataNode is down

- Excessively long Garbage Collection

This message comes from "/usr/lib/python2.6/site-packages/ambari_agent/alerts/metric_alert.py" script and following is the logic:

if isinstance(self.metric_info, JmxMetric):

jmx_property_values, http_code = self._load_jmx(alert_uri.is_ssl_enabled, host, port, self.metric_info)

if not jmx_property_values and http_code in [200, 307]:

collect_result = self.RESULT_UNKNOWN

value_list.append('HTTP {0} response (metrics unavailable)'.format(str(http_code)))

elif not jmx_property_values and http_code not in [200, 307]:

raise Exception("[Alert][{0}] Unable to extract JSON from JMX response".format(self.get_name()))

else:

value_list.extend(jmx_property_values)

check_value = self.metric_info.calculate(value_list)

value_list.append(check_value)Network i

MTU (Maximum Transmission Unit) is related to TCP/IP networking in Linux. It refers to the size (in bytes) of the largest datagram that a given layer of a communications protocol can pass at a time. It should be identical on all the nodes.MTU is set in /etc/sysconfig/network-scripts/ifcfg-ethx

You can see current MTU setting with ifconfig command under Linux:

$ netstat -i

check the second row or

$ ip link list

- Check the host file on those failing nodes

- Check if DNS server is having problems in name resolution.

Run TestDFSIO performance tests

yarn jar /usr/hdp/2.x.x.x.x/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-*tests.jar TestDFSIO -write -nrFiles 100 -fileSize 100 TestDFSIO Read Test hadoop jar

Iperf

Is a widely used tool for network performance measurement and tuning

See Typical HDP Cluster Network Configuration Best Practices

Datanode is down

Restart the datanode using Ambari or manually

Garbage Collection

Running the GCViewer

Enable GC logging for Datanode service

- Open hadoop-env.sh and look for the following line

export HADOOP_DATANODE_OPTS="-Dcom.sun.management.jmxremote -Xms2048m -Xmx2048m -Dhadoop.security.logger=ERROR,DRFAS $HADOOP_DATANODE_OPTS"

- Insert the following into HADOOP_DATANODE_OPTS param

-verbose:gc -XX:+PrintGCDetails -Xloggc:${HADOOP_LOG_DIR}/hadoop-hdfs-datanode-`date +'%Y%m%d%H%M'`.gclog -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=20 - After adding the GC log pram the HADOOP_DATANODE_OPTS should look like this

export HADOOP_DATANODE_OPTS="-Dcom.sun.management.jmxremote -Xms2048m -Xmx2048m -Dhadoop.security.logger=ERROR,DRFAS -verbose:gc -XX:+PrintGCDetails -Xloggc:${HADOOP_LOG_DIR}/hadoop-hdfs-datanode-`date +'%Y%m%d%H%M'`.gclog -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=20 $HADOOP_DATANODE_OPTS"

The log should give you a detailed info.

Hope that helps

Created 06-06-2018 01:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When a Hadoop framework creates a new block, it places the first replica on the local node. And place the second one in a different rack, and the third one is on a different node on the local node. During block replicating, if the number of existing replicas is one, place the second on a different rack. When the number of existing replicas are two, if the two replicas are in the same rack, place the third one on a different rack.

The main purpose of Rack awareness is to:

- Improve data reliability and data availability.

- Better cluster performance.

- Prevents data loss if the entire rack fails.

- To improve network bandwidth.

- Keep the bulk flow in-rack when possible.

If your production and this problematic cluster have the same Ambari/HDP version then, you can't call it a bug but client specific problem.

I would still insist you enable rack awareness and monitor over 24hr to see the change in the alerts. Have you tried running a cluster balancing utility?

$ hadoop balancer

HTH

- « Previous

-

- 1

- 2

- Next »