Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Data node down java.net.BindException: Addres...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Data node down java.net.BindException: Address already and error processing unknown operation src: /127.0.0.1:34584 dst: /127.0.0.1:50010

- Labels:

-

Apache Hadoop

Created 08-08-2016 03:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am using hdp 2.3.0

hdfs log :

ERROR datanode.DataNode (DataXceiver.java:run(278)) - hdp :50010:DataXceiver error processing unknown operation src: /127.0.0.1:34584 dst: /127.0.0.1:50010

java.io.EOFException at java.io.DataInputStream.readShort(DataInputStream.java:315) at org.apache.hadoop.hdfs.protocol.datatransfer.Receiver.readOp(Receiver.java:58) at org.apache.hadoop.hdfs.server.datanode.DataXceiver.run(DataXceiver.java:227) at java.lang.Thread.run(Thread.java:745) 2016-08-08 00:26:21,672 ERROR datanode.DataNode (LogAdapter.java:error(69)) - RECEIVED SIGNAL 15: SIGTERM 2016-08-08 00:26:50,714 INFO datanode.DataNode (LogAdapter.java:info(45)) - STARTUP_MSG:

java.net.BindException: Problem binding to [0.0.0.0:50010] java.net.BindException: Address already in use; For more details see: http://wiki.apache.org/hadoop/BindException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

.......

Caused by: java.net.BindException: Address already in use at sun.nio.ch.Net.bind0(Native Method) at sun.nio.ch.Net.bind(Net.java:437)

.....

2016-08-08 00:26:51,970 INFO util.ExitUtil (ExitUtil.java:terminate(124)) - Exiting with status 1 2016-08-08 00:26:51,974 INFO datanode.DataNode (LogAdapter.java:info(45)) - SHUTDOWN_MSG:

Check :

1) ps -ef | grep datanode :

result: no service

2) nc -l 50010 : already used

3) netstat -nap | grep 50010

Result: many services but all are close wait, not table to find process id

4) hadoop dfs report :

Dead datanodes (1): Name: 10.0.0.14:50010 (hdp-n4) Hostname: hdp-n4 Decommission Status : Normal Configured Capacity: 0 (0 B) DFS Used: 0 (0 B) Non DFS Used: 0 (0 B) DFS Remaining: 0 (0 B) DFS Used%: 100.00% DFS Remaining%: 0.00% Configured Cache Capacity: 0 (0 B) Cache Used: 0 (0 B) Cache Remaining: 0 (0 B) Cache Used%: 100.00% Cache Remaining%: 0.00% Xceivers: 0 Last contact: Fri Aug 05 20:51:37 UTC 2016

Created 08-08-2016 04:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So, you have one dead node where 50010 is already taken up by some process, so datanode is not starting. It could be a case on datanode process not shutting down cleanly. You can get the process id from netstat and see if kill -9 clears that port.

Created on 08-08-2016 05:03 AM - edited 08-18-2019 04:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

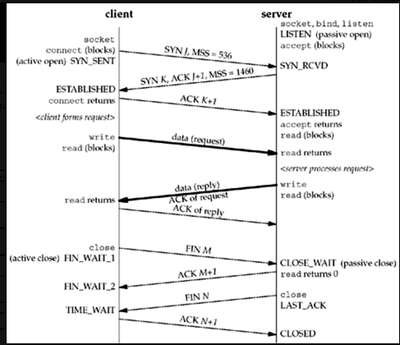

looks like your Datanode is not at all responding. Close wait connections indicates that client side has initiated connection close(FIN M signal) however Datanode is still trying to close it or its unresponsive hence those will stay in close_wait state for infinite time(never initiate ACK M+1).

Please check carefully if Datanode process has become zombie ( if netstat shows '-' instead of process ID )

Below is the sample diagram for how 4 way TCP close works( image reference - http://jason4zhu.blogspot.com 😞

@Arpit Agarwal - Please feel free to correct me if anything is missed.

Created 08-08-2016 07:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the heads up @Kuldeep Kulkarni. It could be a couple of things:

- The ephemeral port was in use by another process that is now gone.

- There is a process using the port but it is running with different user credentials. @vijay kadel were you running the ps/netstat/lsof commands as the root user?

Created 03-27-2017 09:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I accidentally have this issue. And Ifound there no other process listening on the ip and port.

But in the result of netstat -anp|grep 50010,I found a timw_wait record

I donn't know why.

finally, I had to remove the datanode of the host

Created 08-08-2016 05:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@vijay kadel please find the knowledge article related to issue: https://hortonworks-community.force.com/customers/articles/en_US/Issue/DataXceiver-error-processing-...

Created 07-25-2017 03:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I ran into same issue but it's automatically fixed after re-starting my data node server (re-boot physical linux server).