Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Data03 node is decomissioned, i want it back i...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Data03 node is decomissioned, i want it back in working condition.

Created on 06-02-2022 11:43 PM - edited 09-16-2022 07:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Team,

From last few days, data03 node was not working, so we moved it to decomissioned state and restarted all our services.

Now i need to recomission and start the services here as well.

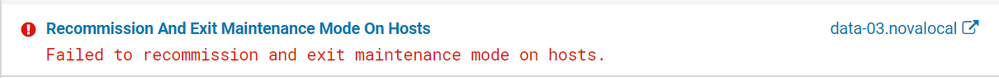

I selected the host and under actions clicked on End maintenace, but it is failing.

Please help me out with what shall i do to resolve this?

Created 06-07-2022 12:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Try to install the agent package on this host and let me know if that solves the issue.

- Make a copy of your /etc/cloudera-scm-agent/config.ini.

- Uninstall the cloudera-manager-agent package

- Install the cloudera-manager-agent package

- Copy the /etc/cloudera-scm-agent/config.ini back

- Start the cloudera-scm-agent service

Created 06-07-2022 10:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @rki_ ,

Thanks for helping out. I followed the above mentioned steps and now data03 node is in commissioned state and roles are started.

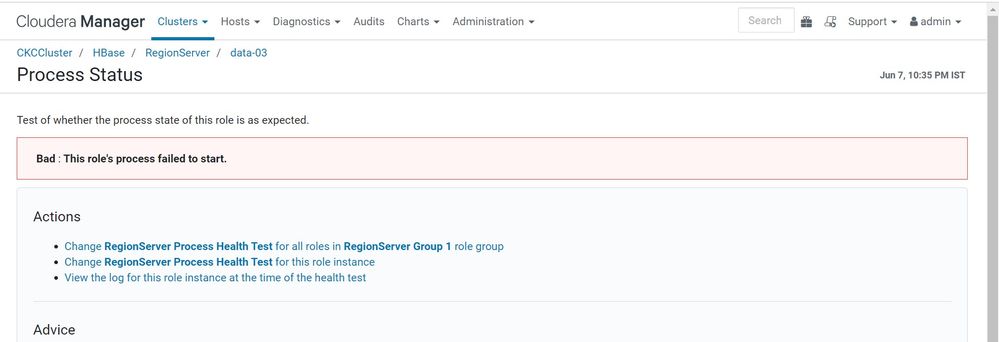

But still i am getting some error for Hbase. (Please refer to the screenshot attached)

Could you please me with your inputs on this.

Regards,

Jessica Jindal

Created 06-07-2022 11:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Jessica_cisco ,

As per the screenshot, it reports that the Region server on this host has failed to start. You can login to that host and confirm if there is a Region server process running.

#ps -ef | grep regionserver

If you don't see any process, try to restart this Region server from CM and if it still fails, please check the stderr and the role log of this Region server for more clues.

Created 06-08-2022 04:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @rki_ ,

This region server issue is resolved. It was because this host time was not in sync with the other nodes.

So after the time sync and restarting the region server. It is resolved.

Now, to get the process time which will than further be used in oozie workflows, i had run the below commands on the cm-hue node -

- kinit -kt /usr/local/etl/etl.keytab oozie/data-02.novalocal@CIM.IVSG.AUTH

- hdfs dfs -cat /user/oozie/oozie/res/config.properties| sed -nr '/processstart/ s/processstart=|\\//g p'

But it is giving the output as below :

2022-04-19T17:02+0530

It is the same day when it stopped working.

Now my doubt is like it should give the current date and time right?

If so what shall i check now i mean any specific logs or so?

Regards,

Jessica Jindal

Created 06-08-2022 11:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @rki_ ,

I created the etl jobs.

But workflows are getting killed continuously. With the below email -

The workflow 0000085-220601112151640-oozie-oozi-W is killed, error message: RuntimeException: couldn't retrieve HBase table (cim.Locations) info:

Failed after attempts=4, exceptions:

Thu Jun 09 11:41:14 IST 2022, RpcRetryingCaller{globalStartTime=1654755074760, pause=100, maxAttempts=4}, org.apache.hadoop.hbase.NotServingRegionException: org.apache.hadoop.hbase.NotServingRegionException: hbase:meta,,1 is not online on data-02.novalocal,16020,1652959331850

at org.apache.hadoop.hbase.regionserver.HRegionServer.getRegionByEncodedName(HRegionServer.java:3328)

at org.apach.

I checked the oozie yarn logs as well for this workflow. Please refer the attachement for that)

Please suggest me some way out.

Regards,

Jessica Jindal

Created 06-09-2022 12:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Jessica_cisco,

The hbase:meta ( system table ) is not online and thus the Hbase Master has not come out of initialisation phase. We will need to assign the region for the hbase:meta table, as this table contains the mapping regarding which region is hosted on a specific Region server. The workflow is failing as this hbase:meta table is not assigned/online.

We need to use hbck2 jar ( we get this via support ticket) to assign this region for which the best way is to open a Cloudera Support ticket. https://docs.cloudera.com/runtime/7.2.10/troubleshooting-hbase/topics/hbase_fix_issues_hbck.html

Else, ( Doesn't work everytime )

You can try to restart the Region server data-02.novalocal followed by a restart of the Hbase Master to see if the Master is able to assign the meta table.

Regards,

Robin

Created on 06-09-2022 02:35 AM - edited 06-09-2022 03:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @rki_ ,

Thanks for this. I tried restarting regionserver and master, but that didn't work.

I will raise a support ticket than.

Regards,

Jessica Jindal

Regards,

Jessica Jindal

Created 06-07-2022 01:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Jessica_cisco ,

You can check if cloudera-manager.repo is present on this host under /etc/yum.repos.d/. If not, copy this repo from a working node.

If you run the below command on this host, it should show you the repo from where it will download the agent package.

# yum whatprovides cloudera-manager-agent

cloudera-manager-agent-7.6.1-24046616.el7.x86_64 : The Cloudera Manager Agent

Repo : @cloudera-manager

Once the above is confirmed, You can use the below doc for instructions.

Created 06-07-2022 02:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @rki_ ,

I tried searching for cloudera-manager.repo on this host as well as working datanode, but it is not found anywhere.

Also on the working node i could see that below cloudera-manager-agent is present when i tried running this command : rpm -qa 'cloudera-manager-*'

cloudera-manager-agent-6.3.0-1281944.el7.x86_64

- « Previous

-

- 1

- 2

- Next »