Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: DataNodes status not consistent

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

DataNodes status not consistent

- Labels:

-

Apache Hadoop

Created on 11-29-2016 03:16 PM - edited 08-19-2019 02:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

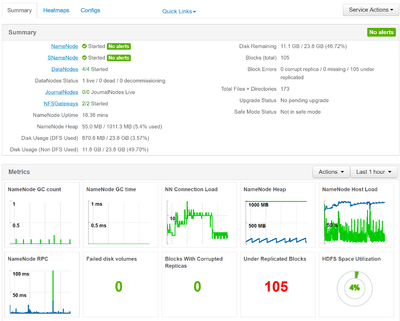

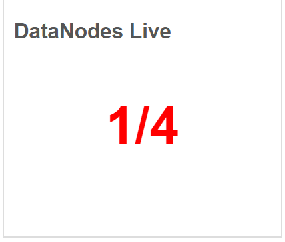

I have a cluster with 4 machines. In each machine has been installed a DataNode. I reported below the screenshots showing the Ambari status.

It is correctly showing 4/4 datanodes however only 1 seems live.

My questions are: *) why is not showing 4 lives datanodes? *) is this affecting also that block are not replicated (see "under replicated blocks") *) also, when running a spark job i get:

YarnSchedulerBackend$YarnSchedulerEndpoint: Container marked as failed: container_e07_1480428595380_0003_02_000003 on host: slave01.hortonworks.com. Exit status: -1000. Diagnostics: org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1459687468-127.0.1.1-1479480481481:blk_1073741831_1007 file=/hdp/apps/2.5.0.0-1245/spark/spark-hdp-assembly.jar

Could you please help me on the above issues?

Thanks

Created 12-01-2016 11:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

i solved the issue adding the following configuration in the "Custom hdfs-site" section.

<property> <name>dfs.namenode.rpc-bind-host</name> <value>0.0.0.0</value> </property>

I modified also the following in the "Advanced hdfs-site" section:

from nameMyServer:8020

to ipMyServer:8020

Regards

Alessandro

Created 12-01-2016 12:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please check NN UI, if all DNs are active over there. If there are servers marked dead, we will need to see the DN logs on each of these nodes.

Created 12-01-2016 11:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

i solved the issue adding the following configuration in the "Custom hdfs-site" section.

<property> <name>dfs.namenode.rpc-bind-host</name> <value>0.0.0.0</value> </property>

I modified also the following in the "Advanced hdfs-site" section:

from nameMyServer:8020

to ipMyServer:8020

Regards

Alessandro