I want to Import data from Microsoft SQL Server to BigQuery using NiFi. I used ExecuteSQL -> PutBigQueryBatch processors and data was successfully dumped onto BigQuery in avro (default) format. But when I check the schema in avro, it shows timestamp column in {"name":"LastModifiedDate","type":["null",{"type":"long","logicalType":"timestamp-millis"}]} format which is creating this column in integer format in BigQuery table.

I want to know if we can map the avro column datatype with user defined datatype so that we can use it while creating BigQuery table( say LastModifiedDate timestamp) . I can see few suggestions to define the schema registry but that will be specific to a table only. I am looking for some generic solution where we can define a centralize repository and that can be used for all tables for such types of column.

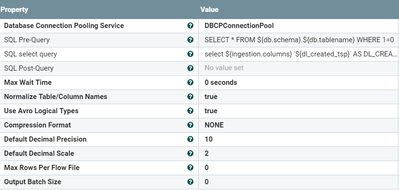

The executeSQL processor configuration is as follow:

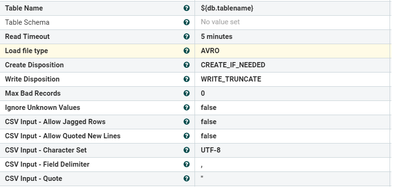

The PutBigQueryBatch processor configuration is as follow:

My question is pretty much similar to this https://community.hortonworks.com/questions/202979/avro-schema-change-using-convertrecord-processor-... but looks like it is missing the answer what i want.