Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Docker sandbox-hdp.hortonworks.com:50070: Con...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Docker sandbox-hdp.hortonworks.com:50070: Connection refused (Connection refused

- Labels:

-

Hortonworks Data Platform (HDP)

Created on 12-20-2017 11:29 PM - edited 08-18-2019 02:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Just trying to work through the tutorial, im using the docker version. All was going well, until I got to the "explore Ambari" part. It seems some connections are getting refused, yet Ambari is running fine, and I can access the sandbox through the terminal and stop/start Ambari. Please have a look at the picture below.

Created 12-20-2017 11:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looks like in your case the HDFS Service check is failing because the HDFS service might not be healthy.

So please check the ambari UI --> HDFS --> "Service Actions" and then restart the HDFS services again then validate if the port 50070 is opened successfully or not?

# netstat -tnlpa | grep 50070 (OR) # netstat -tnlpa | grep `cat /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid`

.

Created on 12-21-2017 12:01 AM - edited 08-18-2019 02:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

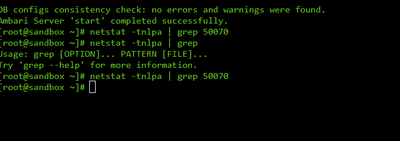

Hey Jay, thanks for the reply 🙂

Am I doing this correctly?

Created 12-21-2017 12:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your netstat command output shows that your NameNode is not running because we do not see 50070 port listening at all.

So please login to ambari UI and then Navigate to

HDFS --> "Service Actions" (Drop Down) --> "Restart All"

.

Then check the NameNode logs to see if there are any strange errors. And then again validate the port 50070 is opened or not?

Once you see that port 50070 is listening fine then you can try accessing the Hive View..

Created 12-21-2017 01:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks yes I did the restart all, and the result checking if 50070 was open as shown in the picture. Are you able to tell me how to check the namenode logs?

Created 12-21-2017 01:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If the port 50070 is opened by the NameNode then we should see output like following:

[root@sandbox ~]# netstat -tnlpa | grep 50070 tcp 0 0 172.17.0.2:50070 0.0.0.0:* LISTEN 512/java [root@sandbox ~]# netstat -tnlpa | grep `cat /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid` tcp 0 0 172.17.0.2:8020 0.0.0.0:* LISTEN 512/java tcp 0 0 172.17.0.2:50070 0.0.0.0:* LISTEN 512/java tcp 0 0 172.17.0.2:8020 172.17.0.2:36554 ESTABLISHED 512/java tcp 0 0 172.17.0.2:36554 172.17.0.2:8020 ESTABLISHED 512/java tcp 0 0 172.17.0.2:36512 172.17.0.2:8020 TIME_WAIT - tcp 0 0 172.17.0.2:8020 172.17.0.2:40692 ESTABLISHED 512/java tcp 0 0 172.17.0.2:35336 172.17.0.2:50010 ESTABLISHED 512/java tcp 0 0 172.17.0.2:8020 172.17.0.2:36576 ESTABLISHED 512/java tcp 0 0 172.17.0.2:8020 172.17.0.2:36590 ESTABLISHED 512/java tcp 0 0 172.17.0.2:8020 172.17.0.2:40554 ESTABLISHED 512/java

.

For Name Node log (to see if there are any errors during startup) we can refer to the following log:

# less /var/log/hadoop/hdfs/hadoop-hdfs-namenode-sandbox.hortonworks.com.log

Created on 12-21-2017 01:36 AM - edited 08-18-2019 02:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

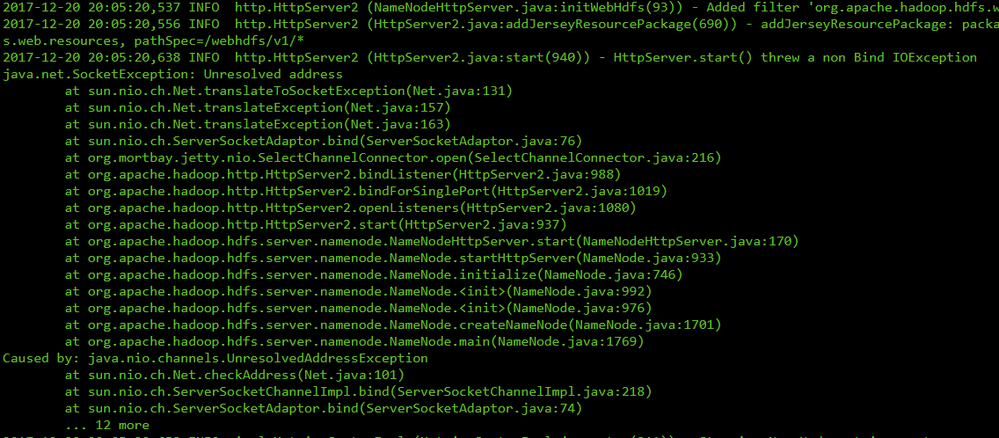

@Jay Kumar SenSharma Cool thanks- I can see the log, and there is indeed an error:

Created 12-21-2017 01:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

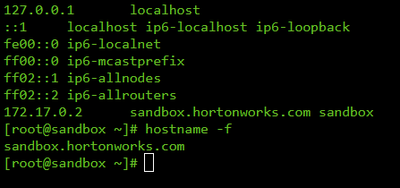

Can you please check if you have mistakenly modified your "/etc/hosts' file by any chance?

Following works for me.

[root@sandbox ~]# cat /etc/hosts 127.0.0.1 localhost ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 172.17.0.2 sandbox.hortonworks.com sandbox<br>

.

FQDN of your Sandbox should be correctly resolvable.

[root@sandbox ~]# hostname -f sandbox.hortonworks.com

.

https://community.hortonworks.com/questions/148362/service-hdfs-check-failed-2.html

Created on 12-21-2017 02:37 AM - edited 08-18-2019 02:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

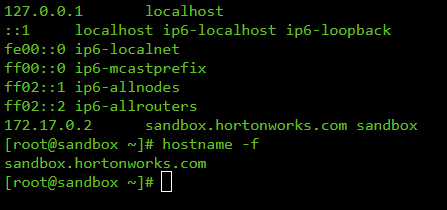

localhost name resolution is handled within DNS itself. #127.0.0.1 localhost #::1 localhost 127.0.0.1 localhost sandbox.hortonworks.com sandbox-hdp.hortonworks.com sandbox-hdf.hortonworks.com

@Jay Kumar SenSharma Above is my etc/hosts

Should it be 172.17.0.2 instead?

Created 12-21-2017 05:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your /etc/hosts file does not look right. It is not the default one that comes with the HDP Sandbox.

So please check with any other HDP Sandbox image or use the default "/etc/hosts" file.

Try using my File data (Keep a backup of your File). Then after modifying the /etc/hosts file better to restart the whole Sandbox before testing further.

.