Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Does jar files missing for spark interpreter?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Does jar files missing for spark interpreter?

- Labels:

-

Apache Hive

-

Apache Spark

-

Apache Zeppelin

Created 02-22-2017 02:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am facing a strange behaviour in sparK;

I am following the tutotial (lab 4 -spark analysis) but when executing the command the script is returned as you can see on the picture(red rectangle)

I have tables in My hive database default.

I checked the jar files in my spark interpreter and found jar files as you can see on picture sparkjarfiles.png (Are some jar files missing please?

Any suggestion?hivedisplay.pnghicecontextdisplay.png

Created 02-23-2017 06:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you provide the following:

1. As @Bernhard Walter already asked, can you attach the screenshot of your spark interpreter config from Zeppelin UI

2. Create a new Notebook and run the below and send the output:

%sh whoami

3. Can you attach the output of

$ ls -lrt /usr/hdp/current/zeppelin-server/local-repo

4. Is your cluster Kerberized?

Created 02-23-2017 09:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

localrepo.pngHi @Daniel

The %sh

whoami works well. It returns zeppelin. the problem I have is with %spark as said to @Bernhard.

I don't know what is happening this morning but not able to see the directoty hdp as you can see. Yesterday I could 😞

The proble is resolved, The ssh port was not correct so please see the result of the command on the pic "localrepo"

Created 02-23-2017 09:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see that you are trying to do ssh on port 2122 which is not right ... many of basic commands will not work that way.

So please do ssh as following:

ssh -p 2222 root@127.0.0.1

Created 02-23-2017 09:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @jay. I have already noticed my error.

Created 02-23-2017 10:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

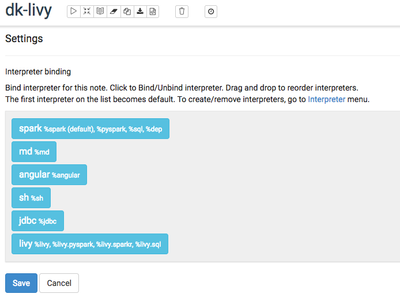

Thanks a lot @Jay! The problem resolved with a small notice of @Daniel Kozlowski concerning interpreter biding. The spark interpreter was "white" , I should have kept it "blue"

Thanks a lot !!

Created 02-23-2017 09:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 02-23-2017 09:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Do exactly this:

- in the new section type: %spark

- press <Enter> button

- type: sc.version

- press <Enter> button

Now, run it

Does this help?

I am asking as noticed that the copied code causing issues.

Created 02-23-2017 10:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Daniel, I have already took @jay notice into account. I have no more paste the code since yesterday but type it by hand.

What i have done now is create a new notebook file

type %spark - press enter - type print(sc.version)- press enter , run it and I have a "prefix not found" display.

I have tried to open the log file from /var/log/zeppelin/zeppelin-interpreter-sh-zeppelin-sandbox.hortonworks.com.log (as you can see on the picture)scversion2.pngscversion1.pnglogfile.png

I have also retstart spark interpreter again but still have the same "prefix not found" error.

Created on 02-23-2017 10:26 AM - edited 08-19-2019 03:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For the "prefix not found" - double-check if you have spark interpreter binded in that notebook.

See my screenshot - Spark needs to be "blue"

Created 02-23-2017 10:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh! @Daniel ! you are wright with this "blue" detail!!!!

I though for the interpreter binding , spark should be "white"!

Please see this fantastic screen!

thanks a lot ! and keep helping newbies of newbies as me. !scversion3.png

Created 02-23-2017 10:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am glad you have this working now.

If you believe I helped, please vote up my answer and select as best one 🙂