Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Dose MapReduce2 have log4j in order to limit t...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Dose MapReduce2 have log4j in order to limit the logs size and backup number ?

Created 08-08-2018 03:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

capture.pngunder the following mapred folder

/var/log/hadoop-mapreduce/mapred

we have the following log files

[root@master mapred]# du -sh *20M mapred-mapred-historyserver-master.sys64.com.log257M mapred-mapred-historyserver-master.sys64.com.log.1257M mapred-mapred-historyserver-master.sys64.com.log.2257M mapred-mapred-historyserver-master.sys64.com.log.3257M mapred-mapred-historyserver-master.sys64.com.log.4257M mapred-mapred-historyserver-master.sys64.com.log.5257M mapred-mapred-historyserver-master.sys64.com.log.64.0K mapred-mapred-historyserver-master.sys64.com.out4.0K mapred-mapred-historyserver-master.sys64.com.out.14.0K mapred-mapred-historyserver-master.sys64.com.out.24.0K mapred-mapred-historyserver-master.sys64.com.out.34.0K mapred-mapred-historyserver-master.sys64.com.out.44.0K mapred-mapred-historyserver-master.sys64.com.out.5 <br>

which is the log4j configuration that need update so each file will not be greater than 100M and maxbackup file will not more then 3 files

we search in the service Mapreduce2 --> configuration

but we not found any log4j file , is it logical ?

Created 08-08-2018 10:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

MapReduce uses RFA appender. Which is defined inside the "Advanced hdfs-log4j"

Ambari UI --> HDFS --> Configs --> Advanced --> "Advanced hdfs-log4j" --> hdfs-log4j template (Text area)

You will find something like this. By default you can see it like following:

#

# Rolling File Appender

#

log4j.appender.RFA=org.apache.log4j.RollingFileAppender

log4j.appender.RFA.File=${hadoop.log.dir}/${hadoop.log.file}

# Logfile size and and 30-day backups

log4j.appender.RFA.MaxFileSize={{hadoop_log_max_backup_size}}MB

log4j.appender.RFA.MaxBackupIndex={{hadoop_log_number_of_backup_files}}

log4j.appender.RFA.layout=org.apache.log4j.PatternLayout

log4j.appender.RFA.layout.ConversionPattern=%d{ISO8601} %-5p %c{2} - %m%n

log4j.appender.RFA.layout.ConversionPattern=%d{ISO8601} %-5p %c{2} (%F:%M(%L)) - %m%n

NOTE:

However making changes to "RFA" appender will not be good because this

appender is also being used by many other components.

So if you want just want to limit the number of logfiles based on size for MapReduce hostory server only then you can try this :

1. Create a new Appender like "RFA_MAPRED" inside the somewhere just below the RFA appender which we posted above.

Ambari UI --> HDFS --> Configs --> Advanced --> "Advanced hdfs-log4j" --> hdfs-log4j template (Text area)

Add "RFA_MAPRED" as following:

#

# MapReduce Rolling File Appender (ADDED MANUALLY)

#

log4j.appender.RFA_MAPRED=org.apache.log4j.RollingFileAppender

log4j.appender.RFA_MAPRED.File=${hadoop.log.dir}/${hadoop.log.file}

log4j.appender.RFA_MAPRED.MaxFileSize=100MB

log4j.appender.RFA_MAPRED.MaxBackupIndex=3

log4j.appender.RFA_MAPRED.layout=org.apache.log4j.PatternLayout

log4j.appender.RFA_MAPRED.layout.ConversionPattern=%d{ISO8601} %-5p %c{2} - %m%n

log4j.appender.RFA_MAPRED.layout.ConversionPattern=%d{ISO8601} %-5p %c{2} (%F:%M(%L)) - %m%n

2. Now Save the config changes. Click Save Button.

3. Now we need to edit the "Advanced mapred-env"

Ambari UI --> MapReduce --> Configs --> Advanced --> "Advanced mapred-env" --> mapred-env template

Change the following line to use the "RFA_MAPRED" appender instead of default "RFA" (Single line change)

Old value:

export HADOOP_MAPRED_ROOT_LOGGER=INFO,RFA

New value:

export HADOOP_MAPRED_ROOT_LOGGER=INFO,RFA_MAPRED

4. Save and restart all required services to get this change reflected.

5. You can also verify that MapReduce is now using the correct Appender by running the following Command on Job History Server host.

# ps -ef | grep JobHistoryServer

. You should see -Dhadoop.root.logger=DEBUG,RFA_MAPRED in the output of the above command.

Created 08-08-2018 04:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Michael Bronson ,

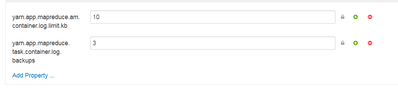

can you set this parameter in Custom mapred site , and see if it works ?

Reference : https://hadoop.apache.org/docs/stable/hadoop-mapreduce-client/hadoop-mapreduce-client-core/mapred-de...

yarn.app.mapreduce.task.container.log.backups=3 yarn.app.mapreduce.am.container.log.limit.kb=102400

Created on 08-08-2018 05:20 PM - edited 08-17-2019 08:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I set that under Custom mapred-site: and I restart the service

Created 08-08-2018 05:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

but files still not effected , seems this conf isnt work , or I miss something?

ls -ltr total 1592376 -rw-r--r--. 1 mapred hadoop 268437275 Aug 22 2017 mapred-mapred-historyserver-master.sys64.com.log.6 -rw-r--r--. 1 mapred hadoop 268435538 Aug 28 2017 mapred-mapred-historyserver-master.sys64.com.log.5 -rw-r--r--. 1 mapred hadoop 268435639 Sep 2 2017 mapred-mapred-historyserver-master.sys64.com.log.4 -rw-r--r--. 1 mapred hadoop 268435470 May 30 10:02 mapred-mapred-historyserver-master.sys64.com.log.3 -rw-r--r-- 1 mapred hadoop 268441217 Jul 10 02:45 mapred-mapred-historyserver-master.sys64.com.log.2 -rw-r--r-- 1 mapred hadoop 268442505 Jul 16 01:17 mapred-mapred-historyserver-master.sys64.com.log.1 -rw-r--r-- 1 mapred hadoop 1477 Jul 19 15:28 mapred-mapred-historyserver-master.sys64.com.out.5 -rw-r--r-- 1 mapred hadoop 1687 Jul 25 17:51 mapred-mapred-historyserver-master.sys64.com.out.4 -rw-r--r-- 1 mapred hadoop 1477 Jul 29 15:17 mapred-mapred-historyserver-master.sys64.com.out.3 -rw-r--r-- 1 mapred hadoop 1477 Aug 1 19:30 mapred-mapred-historyserver-master.sys64.com.out.2 -rw-r--r-- 1 mapred hadoop 1687 Aug 7 11:48 mapred-mapred-historyserver-master.sys64.com.out.1 -rw-r--r-- 1 mapred hadoop 1477 Aug 8 17:09 mapred-mapred-historyserver-master.sys64.com.out -rw-r--r-- 1 mapred hadoop 19888308 Aug 8 17:20 mapred-mapred-historyserver-master.sys64.com.log

Created 08-08-2018 08:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Akhil any suggestion regarding my comments ?

Created 08-08-2018 10:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

MapReduce uses RFA appender. Which is defined inside the "Advanced hdfs-log4j"

Ambari UI --> HDFS --> Configs --> Advanced --> "Advanced hdfs-log4j" --> hdfs-log4j template (Text area)

You will find something like this. By default you can see it like following:

#

# Rolling File Appender

#

log4j.appender.RFA=org.apache.log4j.RollingFileAppender

log4j.appender.RFA.File=${hadoop.log.dir}/${hadoop.log.file}

# Logfile size and and 30-day backups

log4j.appender.RFA.MaxFileSize={{hadoop_log_max_backup_size}}MB

log4j.appender.RFA.MaxBackupIndex={{hadoop_log_number_of_backup_files}}

log4j.appender.RFA.layout=org.apache.log4j.PatternLayout

log4j.appender.RFA.layout.ConversionPattern=%d{ISO8601} %-5p %c{2} - %m%n

log4j.appender.RFA.layout.ConversionPattern=%d{ISO8601} %-5p %c{2} (%F:%M(%L)) - %m%n

NOTE:

However making changes to "RFA" appender will not be good because this

appender is also being used by many other components.

So if you want just want to limit the number of logfiles based on size for MapReduce hostory server only then you can try this :

1. Create a new Appender like "RFA_MAPRED" inside the somewhere just below the RFA appender which we posted above.

Ambari UI --> HDFS --> Configs --> Advanced --> "Advanced hdfs-log4j" --> hdfs-log4j template (Text area)

Add "RFA_MAPRED" as following:

#

# MapReduce Rolling File Appender (ADDED MANUALLY)

#

log4j.appender.RFA_MAPRED=org.apache.log4j.RollingFileAppender

log4j.appender.RFA_MAPRED.File=${hadoop.log.dir}/${hadoop.log.file}

log4j.appender.RFA_MAPRED.MaxFileSize=100MB

log4j.appender.RFA_MAPRED.MaxBackupIndex=3

log4j.appender.RFA_MAPRED.layout=org.apache.log4j.PatternLayout

log4j.appender.RFA_MAPRED.layout.ConversionPattern=%d{ISO8601} %-5p %c{2} - %m%n

log4j.appender.RFA_MAPRED.layout.ConversionPattern=%d{ISO8601} %-5p %c{2} (%F:%M(%L)) - %m%n

2. Now Save the config changes. Click Save Button.

3. Now we need to edit the "Advanced mapred-env"

Ambari UI --> MapReduce --> Configs --> Advanced --> "Advanced mapred-env" --> mapred-env template

Change the following line to use the "RFA_MAPRED" appender instead of default "RFA" (Single line change)

Old value:

export HADOOP_MAPRED_ROOT_LOGGER=INFO,RFA

New value:

export HADOOP_MAPRED_ROOT_LOGGER=INFO,RFA_MAPRED

4. Save and restart all required services to get this change reflected.

5. You can also verify that MapReduce is now using the correct Appender by running the following Command on Job History Server host.

# ps -ef | grep JobHistoryServer

. You should see -Dhadoop.root.logger=DEBUG,RFA_MAPRED in the output of the above command.

Created 08-09-2018 08:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jay , great let me check your procedure on my cluster , I will update soon ...

Created 08-10-2018 05:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jay , thank you so much we do the testing and they are fine , now we need to do the steps VIA API