Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Doubts about YARN memory configuration in Clou...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Doubts about YARN memory configuration in Cloudera Manager

Created on 12-14-2018 02:41 AM - edited 09-16-2022 06:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi everyone, I have a cluster where each worker has 110 GB of RAM.

On the Cloudera Manager I've configured the following Yarn memory parameters:

| yarn.nodemanager.resource.memory-mb | 80 GB |

| yarn.scheduler.minimum-allocation-mb | 1 GB |

| yarn.scheduler.maximum-allocation-mb | 20 GB |

| mapreduce.map.memory.mb | 0 |

| mapreduce.reduce.memory.mb | 0 |

| yarn.app.mapreduce.am.resource.mb | 1 GB |

| mapreduce.job.heap.memory-mb.ratio | 0,8 |

| mapreduce.map.java.opts | -Djava.net.preferIPv4Stack=true |

| mapreduce.reduce.java.opts | -Djava.net.preferIPv4Stack=true |

| Map Task Maximum Heap Size | 0 |

| Reduce Task Maximum Heap Size | 0 |

One of my goal was to let YARN to autochoose the correct Java Heap size for the jobs using the 0,8 ratio as the upperbound (20 GB * 0,8 = 16 GB), thus I've leave all the heap and mapper/reducer settings to zero.

I have this hive job which perfoms some joins between large tables. Just running the job as it is I get a failure:

Container [pid=26783,containerID=container_1389136889967_0009_01_000002] is running

beyond physical memory limits.

Current usage: 2.7 GB of 2 GB physical memory used; 3.7 GB of 3 GB virtual memory used.

Killing container.

If I explicitly set the memory requirements for the job in the hive code, it completes succesfully:

SET mapreduce.map.memory.mb=8192; SET mapreduce.reduce.memory.mb=16384; SET mapreduce.map.java.opts=-Xmx6553m; SET mapreduce.reduce.java.opts=-Xmx13106m;

My question: why does not YARN automatically gives this job enough memory to complete succesfully?

Since I have specified 20 GB as the maximum container size and 0,8 as the maximum heap ratio, I was expecting that YARN could give a max of 16 GB to each mapper/reducer without have to me esplicitly specify these parameters.

Could someone please explain what's going on?

Thanks for any information.

Created 12-14-2018 08:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

yarn.scheduler.maximum-allocation-mb is specified as 20 GB which means the largest amount of physical memory, that can be requested for a container and yarn.scheduler.minimum-allocation-mb will be the least amount of physical memory, that can be requested for a container.

When we submit a MR job requested container memory will be assigned “mapreduce.map.memory.mb” which is by default 1 GB. If it is not specified then we will be given container of 1GB.(Same for reducer)

This can be verified in the yarn logs -:

mapreduce.map.memory.mb - requested container memory 1GB

INFO [Thread-52] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: mapResourceRequest:<memory:1024, vCores:1>

mapreduce.map.java.opts - Which is 80% of container memory by default

org.apache.hadoop.mapred.JobConf: Task java-opts do not specify heap size. Setting task attempt jvm max heap size to -Xmx820m

1 GB is the default and it is quite low. I recommend reading the below link. It provides a good understanding of YARN and MR memory setting, how they relate, and how to set some baseline settings based on the cluster node size (disk, memory, and cores).

https://www.cloudera.com/documentation/enterprise/latest/topics/cdh_ig_yarn_tuning.html

Hope it helps. Let us know if you have any questions

Thanks

Jerry

Created 12-16-2018 01:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Jerry, thank you for the reply.

If I understand correctly you are saying that if not explicitly specified values for mapreduce.map.memory.mb and mapreduce.reduce.memory.mb YARN will assign to the job the minimum container memory value yarn.scheduler.minimum-allocation-mb, (1 GB in this case) ?

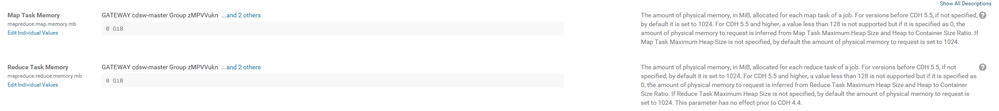

Because from what I can read in the description fields on the Cloudera Manager, I though that if the values for mapreduce.map.memory.mb and mapreduce.reduce.memory.mb are left to zero, the memory assigned to a job should be inferred by the map maximum heap and heap to container ratio:

Could you explain please how this work?

Created 12-16-2018 07:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you let me know the CM / CDH version you are runining ?

Created 12-16-2018 08:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @csguna, CDH version is 5.13.2