Support Questions

- Cloudera Community

- Support

- Support Questions

- Enabling LZO compression using NiFi PutHDFS

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Enabling LZO compression using NiFi PutHDFS

- Labels:

-

Apache NiFi

Created 12-09-2016 10:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello All,

LZO compression codec is enabled on HDFS and we are writing to HDFS using PutHDFS. We have run into the issue as in this question. Workaround to remove the LZO specific line from the core-site works fine. However, is there a way that we can still use LZO codec with NiFi PutHDFS. The compression codec section of this document does not have specifics around NONE, AUTOMATIC and DEFAULT. Could someone share more information around this?

Thanks, Arun

Created on 12-15-2016 06:16 PM - edited 08-18-2019 06:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Karthik Narayanan. I was able to resolve the issue.

Before diving into the solutions, I should make the below statement -

With NiFi 1.0 and 1.1, LZO compression cannot be achieved using the PutHDFS processor. The only supported compressions are the ones listed in the compression codec drop down.

With the LZO related classes being present in the core-site.xml, the NiFi processor fails to run. The suggestion from the previous HCC post was to remove those classes. It needed to be retained so that NiFi's copy and HDP's copy of core-site are always in sync.

NiFi 1.0

I created the hadoop-lzo jar by building it from sources and added the same to the NiFi lib directory and restarted NiFi.

This resolved the issue and I am able to proceed using the PutHDFS without it erroring out.

NiFi 1.1

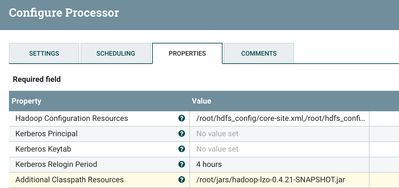

Configure the processor's additional classpath to the jar file. No restart required.

Note : This does not provide LZO compression, it just can run the processor without ERROR even when you have the LZO classes in the core site.

UNSATISFIED LINK ERROR WITH SNAPPY

I also had issue with Snappy Compression codec in NiFi. Was able to resolve it setting the path to the .so file. This did not work on the ambari-vagrant boxes, but I was able to get this working on an openstack cloud instance. The issue on the virtual box could be systemic.

To resolve the link error, I copied the .so files from HDP cluster and recreated the links. And as @Karthik Narayanan suggested, added the java library path to the directory containing the .so files.

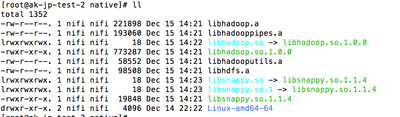

Below is the list of .so and links

And below is the bootstrap configuration change

Created 12-12-2016 07:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

you will have to include the path to lzo codec binaries in the NiFi bootstrap script.

add an entry like so -

java.arg.15=-Djava.libaray.path=/path/to/your/lzocodec.so in the bootstrap.conf file.

Created 12-12-2016 08:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Karthik Narayanan, yet to try this, could you also help on the compression codec to be used in this case? I haven't been able to find out what NONE, AUTOMATIC and DEFAULT means.

Created 12-12-2016 09:55 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

NONE means don't use a codec at all.

AUTOMATIC is not meant to be used with PutHDFS, it is only for the read side (GetHDFS and FetchHDFS)

DEFAULT means use the DefaultCodec class provided by Hadoop which I believe uses a zlib compression (slide 6 here http://www.slideshare.net/Hadoop_Summit/kamat-singh-june27425pmroom210cv2)

Created 12-13-2016 06:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Karthik Narayanan : Just to give you an update, this did not work for me. Tried the same with Snappy. Even snappy does not seem to work. Throws an unsatisfied link even though I have the ".so" added to the bootstrap.conf

Created 12-14-2016 12:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

any chance you are on OS X?

Created 12-15-2016 02:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Nope, its on the ambari vagrant box

Created 12-15-2016 03:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I posted the above link on how you can use compression, try and let me know how it goes. I don't think you can use LZO as it is not shipped as part of Nifi. You can try doing a yum install of LZO and then use executestream to compress the file and then do puthdfs, with none for compression codec.

Created on 12-15-2016 06:16 PM - edited 08-18-2019 06:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Karthik Narayanan. I was able to resolve the issue.

Before diving into the solutions, I should make the below statement -

With NiFi 1.0 and 1.1, LZO compression cannot be achieved using the PutHDFS processor. The only supported compressions are the ones listed in the compression codec drop down.

With the LZO related classes being present in the core-site.xml, the NiFi processor fails to run. The suggestion from the previous HCC post was to remove those classes. It needed to be retained so that NiFi's copy and HDP's copy of core-site are always in sync.

NiFi 1.0

I created the hadoop-lzo jar by building it from sources and added the same to the NiFi lib directory and restarted NiFi.

This resolved the issue and I am able to proceed using the PutHDFS without it erroring out.

NiFi 1.1

Configure the processor's additional classpath to the jar file. No restart required.

Note : This does not provide LZO compression, it just can run the processor without ERROR even when you have the LZO classes in the core site.

UNSATISFIED LINK ERROR WITH SNAPPY

I also had issue with Snappy Compression codec in NiFi. Was able to resolve it setting the path to the .so file. This did not work on the ambari-vagrant boxes, but I was able to get this working on an openstack cloud instance. The issue on the virtual box could be systemic.

To resolve the link error, I copied the .so files from HDP cluster and recreated the links. And as @Karthik Narayanan suggested, added the java library path to the directory containing the .so files.

Below is the list of .so and links

And below is the bootstrap configuration change