Support Questions

- Cloudera Community

- Support

- Support Questions

- Encountered warnings and errors while install,star...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Encountered warnings and errors while install,start,test phase of ambari cluster setup

Created on 04-17-2016 05:45 AM - edited 09-16-2022 03:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

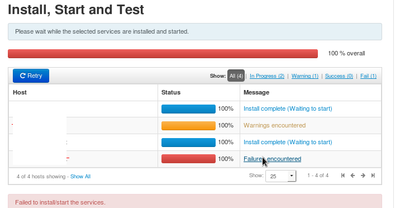

I'm trying to setting up a 4 node cluster using HDP 2.3.x ambari2.1.x.At final stage of installation i.e., Install,start,test phase.

I've received the following errors and warnings.

1.Failure on the 4th node:

stderr:

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/datanode.py", line 153, in <module>

DataNode().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 216, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/datanode.py", line 34, in install

self.install_packages(env, params.exclude_packages)

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 392, in install_packages

Package(name)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 154, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 152, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 118, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/__init__.py", line 45, in action_install

self.install_package(package_name, self.resource.use_repos, self.resource.skip_repos)

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/package/yumrpm.py", line 49, in install_package

shell.checked_call(cmd, sudo=True, logoutput=self.get_logoutput())

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 70, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 92, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 140, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 291, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of '/usr/bin/yum -d 0 -e 0 -y install snappy-devel' returned 1. Error: Package: snappy-devel-1.0.5-1.el6.x86_64 (HDP-UTILS-1.1.0.20)

Requires: snappy(x86-64) = 1.0.5-1.el6

Installed: snappy-1.1.0-1.el6.x86_64 (@anaconda-RedHatEnterpriseLinux-201507020259.x86_64/6.7)

snappy(x86-64) = 1.1.0-1.el6

Available: snappy-1.0.5-1.el6.x86_64 (HDP-UTILS-1.1.0.20)

snappy(x86-64) = 1.0.5-1.el6

You could try using --skip-broken to work around the problem

You could try running: rpm -Va --nofiles --nodigest

stdout:

2016-04-17 06:05:11,465 - Group['hadoop'] {}

2016-04-17 06:05:11,466 - Group['users'] {}

2016-04-17 06:05:11,466 - Group['knox'] {}

2016-04-17 06:05:11,466 - Group['spark'] {}

2016-04-17 06:05:11,467 - User['oozie'] {'gid': 'hadoop', 'groups': ['users']}

2016-04-17 06:05:11,468 - User['hive'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,468 - User['ambari-qa'] {'gid': 'hadoop', 'groups': ['users']}

2016-04-17 06:05:11,469 - User['flume'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,470 - User['hdfs'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,471 - User['knox'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,472 - User['storm'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,473 - User['spark'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,473 - User['mapred'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,474 - User['hbase'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,475 - User['tez'] {'gid': 'hadoop', 'groups': ['users']}

2016-04-17 06:05:11,475 - User['zookeeper'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,476 - User['kafka'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,477 - User['falcon'] {'gid': 'hadoop', 'groups': ['users']}

2016-04-17 06:05:11,478 - User['sqoop'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,478 - User['yarn'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,479 - User['hcat'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,480 - User['ams'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,481 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-04-17 06:05:11,483 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2016-04-17 06:05:11,492 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] due to not_if

2016-04-17 06:05:11,492 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'recursive': True, 'mode': 0775, 'cd_access': 'a'}

2016-04-17 06:05:11,499 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-04-17 06:05:11,501 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2016-04-17 06:05:11,509 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] due to not_if

2016-04-17 06:05:11,510 - Group['hdfs'] {'ignore_failures': False}

2016-04-17 06:05:11,510 - User['hdfs'] {'ignore_failures': False, 'groups': ['hadoop', 'hdfs']}

2016-04-17 06:05:11,511 - Directory['/etc/hadoop'] {'mode': 0755}

2016-04-17 06:05:11,533 - File['/usr/hdp/current/hadoop-client/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2016-04-17 06:05:11,534 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 0777}

2016-04-17 06:05:11,552 - Repository['HDP-2.2'] {'base_url': 'http://public-repo-1.hortonworks.com/HDP/centos6/2.x/updates/2.2.8.0', 'action': ['create'], 'components': ['HDP', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'HDP', 'mirror_list': None}

2016-04-17 06:05:11,561 - File['/etc/yum.repos.d/HDP.repo'] {'content': InlineTemplate(...)}

2016-04-17 06:05:11,562 - Repository['HDP-UTILS-1.1.0.20'] {'base_url': 'http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.20/repos/centos6', 'action': ['create'], 'components': ['HDP-UTILS', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'HDP-UTILS', 'mirror_list': None}

2016-04-17 06:05:11,565 - File['/etc/yum.repos.d/HDP-UTILS.repo'] {'content': InlineTemplate(...)}

2016-04-17 06:05:11,565 - Package['unzip'] {}

2016-04-17 06:05:11,737 - Skipping installation of existing package unzip

2016-04-17 06:05:11,737 - Package['curl'] {}

2016-04-17 06:05:11,756 - Skipping installation of existing package curl

2016-04-17 06:05:11,756 - Package['hdp-select'] {}

2016-04-17 06:05:11,773 - Skipping installation of existing package hdp-select

2016-04-17 06:05:11,954 - Package['hadoop_2_2_*'] {}

2016-04-17 06:05:12,119 - Skipping installation of existing package hadoop_2_2_*

2016-04-17 06:05:12,120 - Package['snappy'] {}

2016-04-17 06:05:12,138 - Skipping installation of existing package snappy

2016-04-17 06:05:12,139 - Package['snappy-devel'] {}

2016-04-17 06:05:12,157 - Installing package snappy-devel ('/usr/bin/yum -d 0 -e 0 -y install snappy-devel')And it gave a warning on the top " ! Data Node Install ".

2.Warnings on the 2nd node:

stderr:

None

stdout:

2016-04-17 06:05:11,468 - Group['hadoop'] {}

2016-04-17 06:05:11,469 - Group['users'] {}

2016-04-17 06:05:11,469 - Group['knox'] {}

2016-04-17 06:05:11,469 - Group['spark'] {}

2016-04-17 06:05:11,470 - User['oozie'] {'gid': 'hadoop', 'groups': ['users']}

2016-04-17 06:05:11,470 - User['hive'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,471 - User['ambari-qa'] {'gid': 'hadoop', 'groups': ['users']}

2016-04-17 06:05:11,472 - User['flume'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,472 - User['hdfs'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,473 - User['knox'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,474 - User['storm'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,475 - User['spark'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,475 - User['mapred'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,476 - User['hbase'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,477 - User['tez'] {'gid': 'hadoop', 'groups': ['users']}

2016-04-17 06:05:11,477 - User['zookeeper'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,478 - User['kafka'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,479 - User['falcon'] {'gid': 'hadoop', 'groups': ['users']}

2016-04-17 06:05:11,479 - User['sqoop'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,480 - User['yarn'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,481 - User['hcat'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,481 - User['ams'] {'gid': 'hadoop', 'groups': ['hadoop']}

2016-04-17 06:05:11,482 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-04-17 06:05:11,484 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2016-04-17 06:05:11,489 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] due to not_if

2016-04-17 06:05:11,490 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'recursive': True, 'mode': 0775, 'cd_access': 'a'}

2016-04-17 06:05:11,491 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-04-17 06:05:11,493 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2016-04-17 06:05:11,499 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] due to not_if

2016-04-17 06:05:11,500 - Group['hdfs'] {'ignore_failures': False}

2016-04-17 06:05:11,500 - User['hdfs'] {'ignore_failures': False, 'groups': ['hadoop', 'hdfs']}

2016-04-17 06:05:11,501 - Directory['/etc/hadoop'] {'mode': 0755}

2016-04-17 06:05:11,525 - File['/usr/hdp/current/hadoop-client/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2016-04-17 06:05:11,525 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 0777}

2016-04-17 06:05:11,541 - Repository['HDP-2.2'] {'base_url': 'http://public-repo-1.hortonworks.com/HDP/centos6/2.x/updates/2.2.8.0', 'action': ['create'], 'components': ['HDP', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'HDP', 'mirror_list': None}

2016-04-17 06:05:11,552 - File['/etc/yum.repos.d/HDP.repo'] {'content': InlineTemplate(...)}

2016-04-17 06:05:11,553 - Repository['HDP-UTILS-1.1.0.20'] {'base_url': 'http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.20/repos/centos6', 'action': ['create'], 'components': ['HDP-UTILS', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'HDP-UTILS', 'mirror_list': None}

2016-04-17 06:05:11,557 - File['/etc/yum.repos.d/HDP-UTILS.repo'] {'content': InlineTemplate(...)}

2016-04-17 06:05:11,557 - Package['unzip'] {}

2016-04-17 06:05:11,707 - Skipping installation of existing package unzip

2016-04-17 06:05:11,708 - Package['curl'] {}

2016-04-17 06:05:11,725 - Skipping installation of existing package curl

2016-04-17 06:05:11,725 - Package['hdp-select'] {}

2016-04-17 06:05:11,743 - Skipping installation of existing package hdp-select

2016-04-17 06:05:11,931 - Package['falcon_2_2_*'] {}

2016-04-17 06:05:12,076 - Installing package falcon_2_2_* ('/usr/bin/yum -d 0 -e 0 -y install 'falcon_2_2_*'')

2016-04-17 06:08:11,638 - Execute['ambari-sudo.sh -H -E touch /var/lib/ambari-agent/data/hdp-select-set-all.performed ; ambari-sudo.sh /usr/bin/hdp-select set all `ambari-python-wrap /usr/bin/hdp-select versions | grep ^2.2 | tail -1`'] {'not_if': 'test -f /var/lib/ambari-agent/data/hdp-select-set-all.performed', 'only_if': 'ls -d /usr/hdp/2.2*'}

2016-04-17 06:08:11,641 - Skipping Execute['ambari-sudo.sh -H -E touch /var/lib/ambari-agent/data/hdp-select-set-all.performed ; ambari-sudo.sh /usr/bin/hdp-select set all `ambari-python-wrap /usr/bin/hdp-select versions | grep ^2.2 | tail -1`'] due to not_if

2016-04-17 06:08:11,641 - XmlConfig['core-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'owner': 'hdfs', 'only_if': 'ls /usr/hdp/current/hadoop-client/conf', 'configurations': ...}

2016-04-17 06:08:11,668 - Generating config: /usr/hdp/current/hadoop-client/conf/core-site.xml

2016-04-17 06:08:11,668 - File['/usr/hdp/current/hadoop-client/conf/core-site.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-04-17 06:08:11,687 - Can only link configs for HDP-2.3 and higher.And the title of the warning is "Falcon server install ".

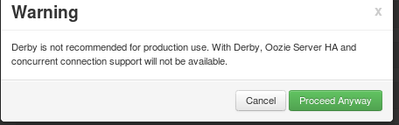

In the earlier step of "Configurations":

I faced this warning which i didn't attend as i was said that DB can be changed after the deployment of the cluster.

Is this one of the reasons behind the failures?.

Anybody please share your thoughts to resolve this issue.And post me any useful links if available.

Thanks in advance,

Karthik.

Created 04-17-2016 06:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Package "snappy-1.1.0-1.el6.x86_64" seems to be causing the conflict here. You should uninstall it and then it should work.

snappy-devel-1.0.5-1.el6.x86_64 => (HDP-UTILS-1.1.0.20)

- Required: snappy(x86-64)=1.0.5-1.el6 which comes from HDP-UTILS

- Installed: snappy-1.1.0-1.el6.x86_64 (@anaconda-RedHatEnterpriseLinux-201507020259.x86_64/6.7)

Created 04-17-2016 06:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Package "snappy-1.1.0-1.el6.x86_64" seems to be causing the conflict here. You should uninstall it and then it should work.

snappy-devel-1.0.5-1.el6.x86_64 => (HDP-UTILS-1.1.0.20)

- Required: snappy(x86-64)=1.0.5-1.el6 which comes from HDP-UTILS

- Installed: snappy-1.1.0-1.el6.x86_64 (@anaconda-RedHatEnterpriseLinux-201507020259.x86_64/6.7)

Created 04-18-2016 12:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What about the second node?