Support Questions

- Cloudera Community

- Support

- Support Questions

- Error Nifi Cluster Setup: Attempted to register Le...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Error Nifi Cluster Setup: Attempted to register Leader Election for role 'Cluster Coordinator' but this role is already registered

- Labels:

-

Apache NiFi

-

Cloudera DataFlow (CDF)

Created 06-16-2022 04:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was trying to setup Nifi cluster.

All these hosts pings one another.

I have tried everything i could on the web, but no success.

This is my configuration for master node:

Thanks.

MASTERNODE:

nifi.state.management.embedded.zookeeper.start true

nifi.zookeeper.connect.string =masternode:2181,workernode02:2181,workernode03:2181

nifi.cluster.is.node=true

nifi.cluster.node.address=masternode

nifi.cluster.node.protocol.port=9991

nifi.cluster.node.protocol.threads=10

nifi.cluster.node.event.history.size=25

nifi.cluster.node.connection.timeout=5 sec

nifi.cluster.node.read.timeout=5 sec

nifi.cluster.firewall.file=

nifi.cluster.protocol.is.secure=false

nifi.cluster.load.balance.host=masternode

nifi.cluster.load.balance.port=6342

nifi.remote.input.host=masternode

nifi.remote.input.secure=false

nifi.remote.input.socket.port=10000

nifi.remote.input.http.enabled=true

nifi.remote.input.http.transaction.ttl=30 sec

nifi.web.http.host=masternode

nifi.web.http.port=9443

------------------------------------------------------

WORKERNODE 02:

nifi.state.management.embedded.zookeeper.start=true

nifi.zookeeper.connect.string=masternode:2181,workernode02:2181,workernode03:2181

nifi.cluster.is.node=true

nifi.cluster.node.address=workernode02

nifi.cluster.node.protocol.port=9991

nifi.cluster.node.protocol.threads=10

nifi.cluster.node.event.history.size=25

nifi.cluster.node.connection.timeout=5 sec

nifi.cluster.node.read.timeout=5 sec

nifi.cluster.firewall.file =

nifi.cluster.protocol.is.secure=false

nifi.cluster.load.balance.host=workernode02

nifi.cluster.load.balance.port=6342

nifi.remote.input.host=workernode02

nifi.remote.input.secure=false

nifi.remote.input.socket.port=10000

nifi.remote.input.http.enabled=true

nifi.remote.input.http.transaction.ttl=30 sec

nifi.web.http.host=workernode02

nifi.web.http.port=9443

-----------------------------------------

WORKERNODE 03: same as above except the host.

------------------------------------------------------------

state-maanagement.xml:

<cluster-provider>

<id>zk-provider</id>

<class>org.apache.nifi.controller.state.providers.zookeeper.ZooKeeperStateProvider</class>

<property name="Connect String">masternode:2181,workernode02:2181,workernode03:2181</property>

<property name="Root Node">/nifi</property>

<property name="Session Timeout">10 seconds</property>

<property name="Access Control">Open</property>

</cluster-provider>

-------------------------------------------------------

zookeeper.properties:

server.1=masternode:2888:3888;2181

server.2=workernode02:2888:3888;2181

server.3=workernode03:2888:3888;2181

------------------------------------------

ERROR:

2022-06-16 11:50:03,954 WARN [main] o.a.nifi.controller.StandardFlowService There is currently no Cluster Coordinator. This often happens upon restart of NiFi when running an embedded ZooKeeper. Will register this node to become the active Cluster Coordinator and will attempt to connect to cluster again

2022-06-16 11:50:03,954 INFO [main] o.a.n.c.l.e.CuratorLeaderElectionManager CuratorLeaderElectionManager[stopped=false] Attempted to register Leader Election for role 'Cluster Coordinator' but this role is already registered

2022-06-16 11:50:05,119 WARN [Heartbeat Monitor Thread-1] o.a.n.c.l.e.CuratorLeaderElectionManager Unable to determine leader for role 'Cluster Coordinator'; returning null

org.apache.zookeeper.KeeperException$ConnectionLossException: KeeperErrorCode = ConnectionLoss for /nifi/leaders/Cluster Coordinator

at org.apache.zookeeper.KeeperException.create(KeeperException.java:102)

at org.apache.zookeeper.KeeperException.create(KeeperException.java:54)

at org.apache.zookeeper.ZooKeeper.getChildren(ZooKeeper.java:2707)

at org.apache.curator.framework.imps.GetChildrenBuilderImpl$3.call(GetChildrenBuilderImpl.java:242)

at org.apache.curator.framework.imps.GetChildrenBuilderImpl$3.call(GetChildrenBuilderImpl.java:231)

at org.apache.curator.RetryLoop.callWithRetry(RetryLoop.java:93)

at org.apache.curator.framework.imps.GetChildrenBuilderImpl.pathInForeground(GetChildrenBuilderImpl.java:228)

at org.apache.curator.framework.imps.GetChildrenBuilderImpl.forPath(GetChildrenBuilderImpl.java:219)

at org.apache.curator.framework.imps.GetChildrenBuilderImpl.forPath(GetChildrenBuilderImpl.java:41)

at org.apache.curator.framework.recipes.locks.LockInternals.getSortedChildren(LockInternals.java:154)

at org.apache.curator.framework.recipes.locks.LockInternals.getParticipantNodes(LockInternals.java:134)

at org.apache.curator.framework.recipes.locks.InterProcessMutex.getParticipantNodes(InterProcessMutex.java:170)

at org.apache.curator.framework.recipes.leader.LeaderSelector.getLeader(LeaderSelector.java:337)

at org.apache.nifi.controller.leader.election.CuratorLeaderElectionManager.getLeader(CuratorLeaderElectionManager.java:281)

at org.apache.nifi.controller.leader.election.CuratorLeaderElectionManager$ElectionListener.verifyLeader(CuratorLeaderElectionManager.java:571)

at org.apache.nifi.controller.leader.election.CuratorLeaderElectionManager$ElectionListener.isLeader(CuratorLeaderElectionManager.java:525)

at org.apache.nifi.controller.leader.election.CuratorLeaderElectionManager$LeaderRole.isLeader(CuratorLeaderElectionManager.java:466)

at org.apache.nifi.controller.leader.election.CuratorLeaderElectionManager.isLeader(CuratorLeaderElectionManager.java:262)

at org.apache.nifi.cluster.coordination.node.NodeClusterCoordinator.isActiveClusterCoordinator(NodeClusterCoordinator.java:824)

at org.apache.nifi.cluster.coordination.heartbeat.AbstractHeartbeatMonitor.monitorHeartbeats(AbstractHeartbeatMonitor.java:132)

at org.apache.nifi.cluster.coordination.heartbeat.AbstractHeartbeatMonitor$1.run(AbstractHeartbeatMonitor.java:84)

at org.apache.nifi.engine.FlowEngine$2.run(FlowEngine.java:110)

at java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:515)

at java.base/java.util.concurrent.FutureTask.runAndReset(FutureTask.java:305)

at java.base/java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:305)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

at java.base/java.lang.Thread.run(Thread.java:834)

2022-06-16 11:50:11,732 INFO [Cleanup Archive for default] o.a.n.c.repository.FileSystemRepository Successfully deleted 0 files (0 bytes) from archive

2022-06-16 11:50:11,733 INFO [Cleanup Archive for default] o.a.n.c.repository.FileSystemRepository Archive cleanup completed for container default; will now allow writing to this container. Bytes used = 10.06 GB, bytes free = 7.63 GB, capacity = 17.7 GB

Created 06-16-2022 10:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks to everyone especially @SAMSAL.

I got to know it is the firewall that was blocking the communications among the ports.

I tried to add those ports to firewall but no much success. When i tried to reach NIFI GUI, i ran into this error: java.net.NoRouteToHostException: No route to host (Host unreachable).

So i disable the firewall & everything worked perfectly.

FIRST METHOD: NO SUCCESS

firewall-cmd --zone=public --permanent --add-port 9991/tcp

firewall-cmd --zone=public --permanent --add-port 2888/tcp

firewall-cmd --zone=public --permanent --add-port 3888/tcp

firewall-cmd --zone=public --permanent --add-port 2181/tcp

firewall-cmd --reload

SECOND METHOD: SUCCESS

systemctl stop firewalld

Created 06-16-2022 06:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not sure if this is related , but if you are setting up a secure cluster the property

nifi.cluster.protocol.is.secure should be true. If not just make sure you follow steps mentioned in the following url and you have not missed anything:

Created 06-16-2022 07:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks sir.

I'm not setting a secured one.

Infact, i disable selinux.

Created 06-16-2022 07:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Have you added the nodes in the authorizers.xml under userGroupProvider and AccessPolicyProvider

Created 06-16-2022 08:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No sir,

I have not done that.

Created 06-16-2022 09:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have added these. I am still having same error.

AccessPolicyProvider

<property name="Node Identity 1">cn=masternode</property>

<property name="Node Identity 2">cn=workernode02</property>

<property name="Node Identity 3">cn=workernode03</property>

userGroupProvider

<property name="Initial User Identity 1">cn=masternode</property>

<property name="Initial User Identity 2">cn=workersnode02</property>

<property name="Initial User Identity 3">cn=workersnode03</property>

Created 06-16-2022 09:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

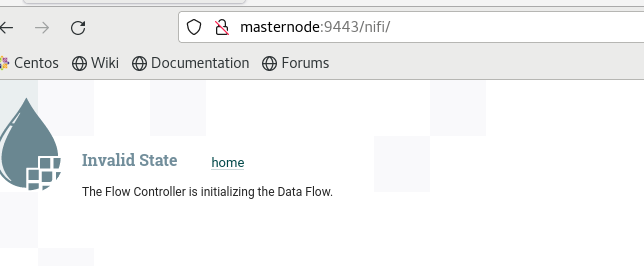

I cannot login because of the above issue.

Created 06-16-2022 09:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What the screenshot is telling that its still initializing the data flow, so give it some time and refresh.

Created 06-16-2022 09:37 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Though, i gave it some time before.

Now, i would leave it for several minutes and give you a feedback.

Thank you sir.

Created 06-16-2022 12:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It's more than 2hrs now, same "Invalid State" at the login.

While the log trace is still showing:

CuratorLeaderElectionManager Unable to determine leader for role 'Cluster Coordinator'; returning null

org.apache.zookeeper.KeeperException$ConnectionLossException: KeeperErrorCode = ConnectionLoss for /nifi/leaders/Cluster Coordinator