Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Error spark job input is too large to fit in a...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Error spark job input is too large to fit in a byte array

- Labels:

-

Apache Spark

Created 05-18-2022 07:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

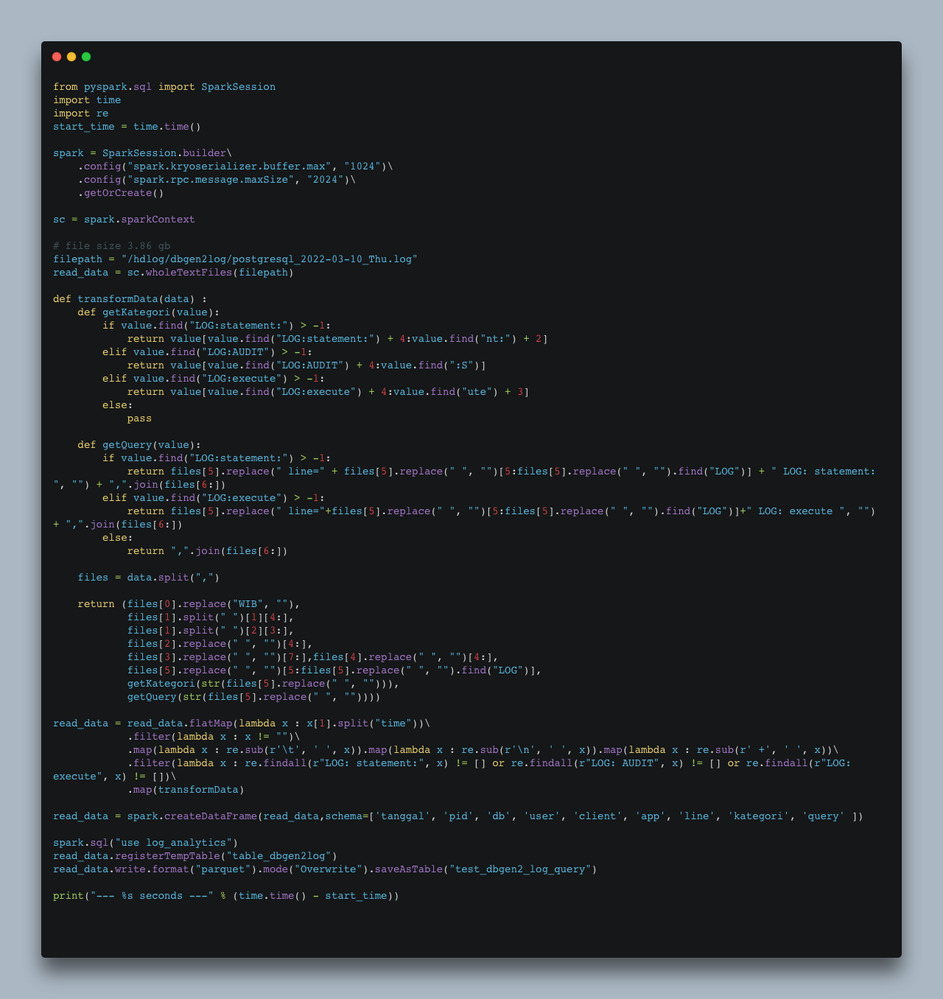

I want to process log file which has 20gb size and save it to hive table and this is my code:

spark = SparkSession \

Created 06-22-2022 02:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Yosieam , Using "collect" method is not recommended as it needs to collect the data to the Spark driver side and as such it needs to fit the whole dataset into the Driver's memory. Please rewrite your code to avoid the "collect" method.

Created 06-22-2022 07:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i want to collect them because i want to make them as 1 string and split them to array and store as RDD, because my log is need to split into several pieces with seperator i give

Created 06-22-2022 08:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have change my code not using collect, but I use wholeTextFiles() and use flatMap() for split the string , but i have difference error

Created 06-23-2022 01:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

wholeTextFiles is also not a scalable solution.

https://spark.apache.org/docs/3.1.3/api/python/reference/api/pyspark.SparkContext.wholeTextFiles.htm...

"Small files are preferred, as each file will be loaded fully in memory."

Created 06-24-2022 12:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now I am facing the error like this

Error Exception in thread "dispatcher-event-loop-40" java.lang.OutOfMemoryError: Requested array size exceeds VM limit

And I stuck with this one for about a month, everty time I try to run the job its always stucked there, i have increased the driver and executor memory but it didnt do nothing

Created 06-24-2022 12:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The "Requested array size exceeds VM limit" means that your code tries to instantiate an array which has more than 2^31-1 elements (~2 billion) which is the max size of an array in Java. You cannot solve this with adding more memory. You need to split the work between executors and not process data on a single JVM (Driver side).

Created 06-24-2022 12:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you tell me where I should check that the process data in single JVM? the purpose of my spark job is writing the result to hive table and the oom comes when the job try to write all data into hive table

Created 08-31-2022 09:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Yosieam

Thanks for sharing the code. You forgot to share the spark-submit/pyspark command. Please check what is executor/driver memory is passed to the spark-submit. Could you please confirm file is in local system/hdfs system.