Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Error starting Zeppelin Notebook using HDP 2.5

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Error starting Zeppelin Notebook using HDP 2.5

- Labels:

-

Apache Zeppelin

Created 12-01-2017 01:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I am having trouble to start Zeppelin Notebook in Ambari. Below is the standard errors.

stderr: /var/lib/ambari-agent/data/errors-3626.txt

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/ZEPPELIN/0.6.0.2.5/package/scripts/master.py", line 467, in <module>

Master().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 329, in execute

method(env)

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 865, in restart

self.start(env, upgrade_type=upgrade_type)

File "/var/lib/ambari-agent/cache/common-services/ZEPPELIN/0.6.0.2.5/package/scripts/master.py", line 223, in start

self.update_kerberos_properties()

File "/var/lib/ambari-agent/cache/common-services/ZEPPELIN/0.6.0.2.5/package/scripts/master.py", line 273, in update_kerberos_properties

config_data = self.get_interpreter_settings()

File "/var/lib/ambari-agent/cache/common-services/ZEPPELIN/0.6.0.2.5/package/scripts/master.py", line 248, in get_interpreter_settings

config_data = json.loads(config_content)

File "/usr/lib64/python2.7/json/__init__.py", line 338, in loads

return _default_decoder.decode(s)

File "/usr/lib64/python2.7/json/decoder.py", line 366, in decode

obj, end = self.raw_decode(s, idx=_w(s, 0).end())

File "/usr/lib64/python2.7/json/decoder.py", line 382, in raw_decode

obj, end = self.scan_once(s, idx)

ValueError: Unterminated string starting at: line 119 column 9 (char 4087

Created 12-01-2017 06:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please attach your /etc/zeppelin/conf/interpreter.json file.

Created 12-01-2017 03:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We see that the error which is causing 503 Internal server error is as following:

java.lang.IllegalStateException: Failed to delete temp dir /usr/hdp/2.5.3.0-37/zeppelin/webapps

at org.eclipse.jetty.webapp.WebInfConfiguration.configureTempDirectory(WebInfConfiguration.java:372)

at org.eclipse.jetty.webapp.WebInfConfiguration.resolveTempDirectory(WebInfConfiguration.java:260)

at org.eclipse.jetty.webapp.WebInfConfiguration.preConfigure(WebInfConfiguration.java:69)

at org.eclipse.jetty.webapp.WebAppContext.preConfigure(WebAppContext.java:468)

at org.eclipse.jetty.webapp.WebAppContext.doStart(WebAppContext.java:504)

So please check the ownership and permissions of "/usr/hdp/2.5.3.0-37/zeppelin" it should be owned by "zeppelin:zeppelin". So please verify the permission and if found other wise then please reset the permission.

# chown -R zeppelin:hadoop /usr/hdp/2.5.3.0-37/zeppelin/webapps # chmod -R 755 /usr/hdp/2.5.3.0-37/zeppelin/webapps

For

more detailed information please refer to:

https://community.hortonworks.com/articles/81471/zeppelin-ui-returns-503-error.html

.

Created 12-01-2017 04:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Additionally please check and share the following:

1. Do you see the following file exist and it's size is not Zero/Corrupted ?

# ls -lart /etc/zeppelin/conf/interpreter.json

2. Please check the ownership of this file is "zeppelin:zeppelin"

3. Please Share the content of the following file content.

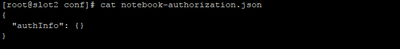

# cat /etc/zeppelin/conf/notebook-authorization.json

For a quick test you can take a backup of the above file and replace the content of this file to default as

{

"authInfo": {}

}

.

Created on 12-01-2017 04:47 AM - edited 08-17-2019 08:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jay,

1. and 2. Yes. Its not corrupted and the ownership is zeppelin:zeppelin.

-rw-r--r--. 1 zeppelin zeppelin 4096 Nov 26 23:30 /etc/zeppelin/conf/interpreter.json

3. Below shows file content before I do backup

Created 12-01-2017 05:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jay, I did the command but Zeppelin UI still giving error 503.

Created 12-01-2017 06:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please attach your /etc/zeppelin/conf/interpreter.json file.

Created 12-01-2017 06:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aditya, here is my /etc/zeppelin/conf/interpreter.json

{

"interpreterSettings": {

"2CZ3ASUMC": {

"id": "2CZ3ASUMC",

"name": "python",

"group": "python",

"properties": {

"zeppelin.python": "/usr/lib/miniconda2/bin/python",

"zeppelin.python.maxResult": "1000000000",

"zeppelin.interpreter.localRepo": "/usr/hdp/current/zeppelin-server/local -repo/2CZ3ASUMC",

"zeppelin.python.useIPython": "true",

"zeppelin.ipython.launch.timeout": "30000"

},

"interpreterGroup": [

{

"class": "org.apache.zeppelin.python.PythonInterpreter",

"name": "python"

}

],

"dependencies": [],

"option": {

"remote": true,

"perNoteSession": false,

"perNoteProcess": false,

"isExistingProcess": false,

"isUserImpersonate": false

}

},

"2CKEKWY8Z": {

"id": "2CKEKWY8Z",

"name": "angular",

"group": "angular",

"properties": {},

"interpreterGroup": [

{

"class": "org.apache.zeppelin.angular.AngularInterpreter",

"name": "angular"

}

],

"dependencies": [],

"option": {

"remote": true,

"perNoteSession": false,

"perNoteProcess": false,

"isExistingProcess": false,

"port": "-1",

"isUserImpersonate": false

}

},

"2CK8A9MEG": {

"id": "2CK8A9MEG",

"name": "jdbc",

"group": "jdbc",

"properties": {

"phoenix.user": "phoenixuser",

"hive.url": "jdbc:hive2://slot4:2181,slot2:2181,slot3:2181/;serviceDiscov eryMode\u003dzooKeeper;zooKeeperNamespace\u003dhiveserver2",

"default.driver": "org.postgresql.Driver",

"phoenix.driver": "org.apache.phoenix.jdbc.PhoenixDriver",

"hive.user": "hive",

"psql.password": "",

"psql.user": "phoenixuser",

"psql.url": "jdbc:postgresql://localhost:5432/",

"default.user": "gpadmin",

"phoenix.hbase.client.retries.number": "1",

"phoenix.url": "jdbc:phoenix:slot4,slot2,slot3:/hbase-unsecure",

"tajo.url": "jdbc:tajo://localhost:26002/default",

"tajo.driver": "org.apache.tajo.jdbc.TajoDriver",

"psql.driver": "org.postgresql.Driver",

"default.password": "",

"zeppelin.interpreter.localRepo": "/usr/hdp/current/zeppelin-server/local -repo/2CK8A9MEG",

"zeppelin.jdbc.auth.type": "SIMPLE",

"hive.proxy.user.property": "hive.server2.proxy.user",

"hive.password": "",

"zeppelin.jdbc.concurrent.use": "true",

"hive.driver": "org.apache.hive.jdbc.HiveDriver",

"zeppelin.jdbc.keytab.location": "",

"common.max_count": "1000",

"phoenix.password": "",

"zeppelin.jdbc.principal": "",

"zeppelin.jdbc.concurrent.max_connection": "10",

"default.url": "jdbc:postgresql://localhost:5432/"

},

"interpreterGroup": [

{

"class": "org.apache.zeppelin.jdbc.JDBCInterpreter",

"name": "sql"

}

],

"dependencies": [],

"option": {

"remote": true,

"perNoteSession": false,

"perNoteProcess": false,

"isExistingProcess": false,

"port": "-1",

"isUserImpersonate": false

}

},

"2CYSZ9Q7Q": {

"id": "2CYSZ9Q7Q",

"name": "spark",

"group": "spark",

"properties": {

"spark.cores.max": "",

"zeppelin.spark.printREPLOutput": "true",

"master": "local[*]",

"zeppelin.spark.maxResult": "1000",

"zeppelin.dep.localrepo": "local-repo",

"spark.app.name": "Zeppelin",

"spark.executor.memory": "",

"zeppelin.spark.sql.stacktrace": "false",

"zeppelin.spark.importImplicit": "true",

"zeppelin.spark.useHiveContext": "true",

"zeppelin.interpreter.localRepo": "/usr/hdp/current/zeppelin-server/local -repo/2CYSZ9Q7Q",

"zeppelin.spark.concurrentSQL": "false",

"args": "",

"zeppelin.pyspark.python": "/usr/lib/miniconda2/bin/python",

"spark.yarn.keytab": "",

Created 12-01-2017 06:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You can see that json is corrupted.

1)Login to Ambari -> Hosts- > Select Zeppelin Host -> Refresh Client configs

2)Make sure that json is valid

3)Restart zeppelin

(or)

Try moving the file to a back up file and try restarting zeppelin and see if it creates a new file

Created 12-01-2017 07:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Aditya it worked. I made a backup file. 🙂