I am trying to copy CSV files from my local directory into a SQL Server database running in my local machine by using Apache NiFi.

I am new to the tool and I have been spending few days googling and building my flow. I managed to connect to source and destination but still I am not able to populate the database since I get the following error: "None of the fields in the record map to the columns defined by the tablename table."

I have been struggling with this for a while and I have not been able to find a solution in the Web.

Any hint would be highly appreciated.

Here are further details.

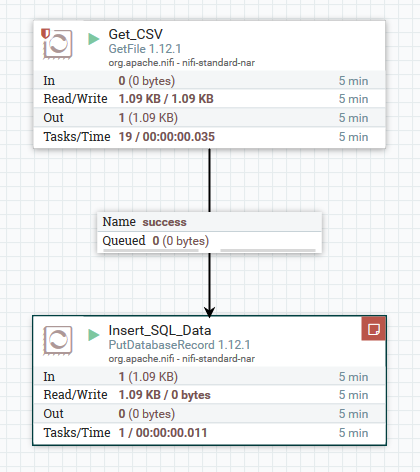

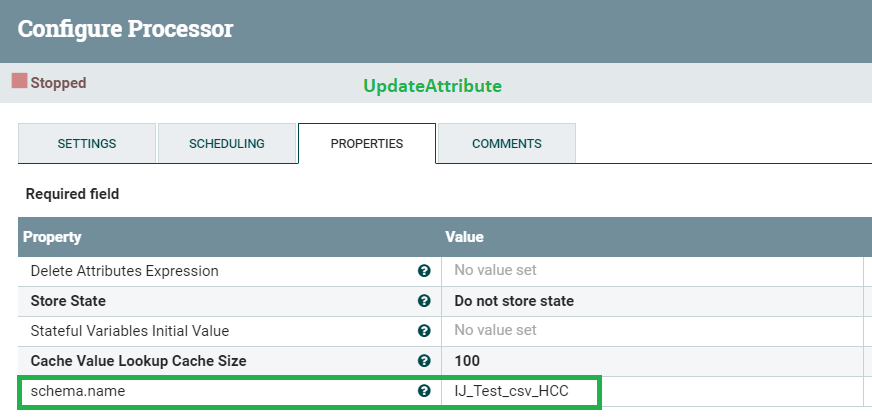

I have built a simple flow using GetFile and PutDatabaseRecord processors

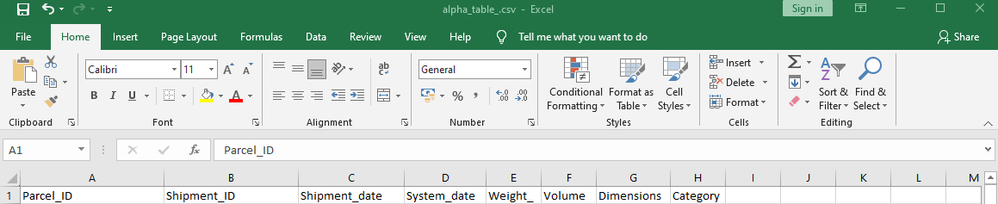

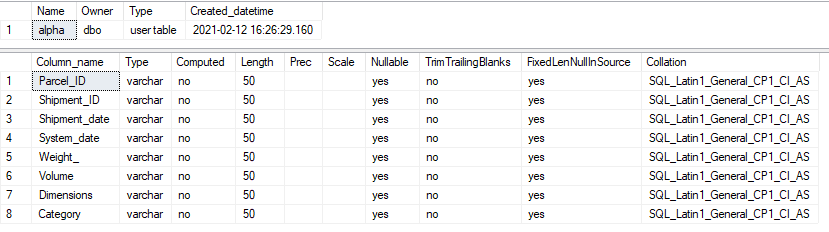

My input is a simple table with 8 columns

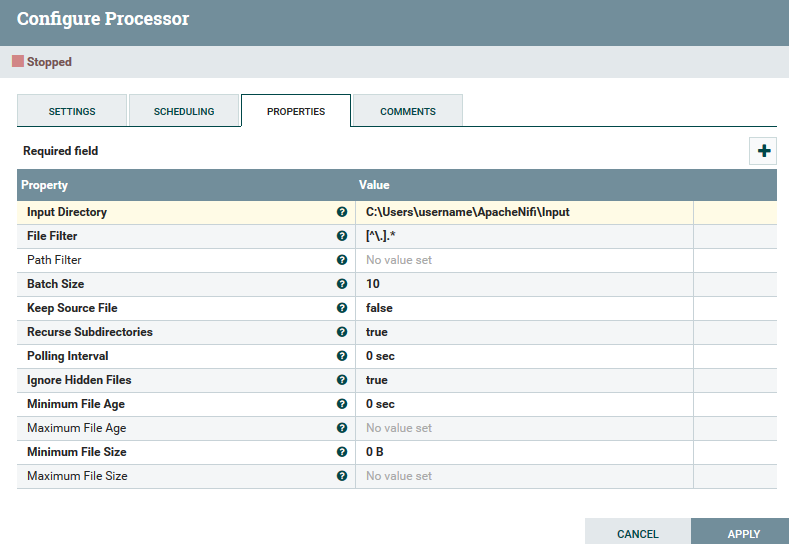

My configurations for GetCSV process are here (I have added the input directory and left the rest as default)

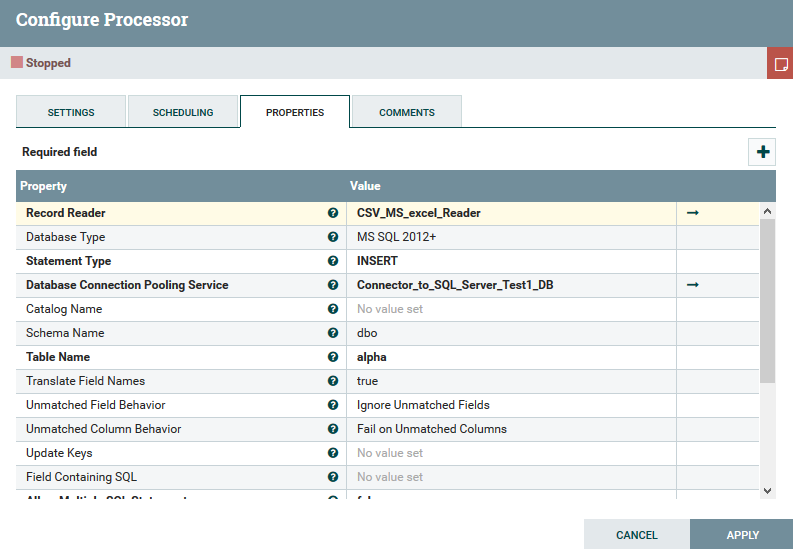

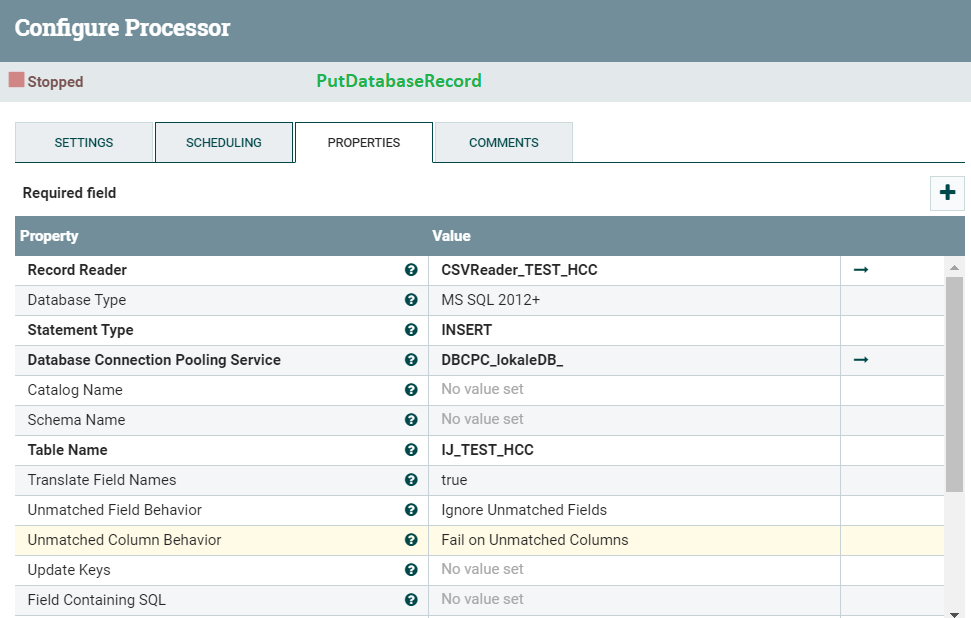

The configuration for PutDatabaseRecord process is here (I have referred to the CSVReader and DBCPConnectionPool controller services, used the MS SQL 2012+ database type (I have 2019 version), configured INSERT statement type, inserted the schema and correct table name and left everything else as default)

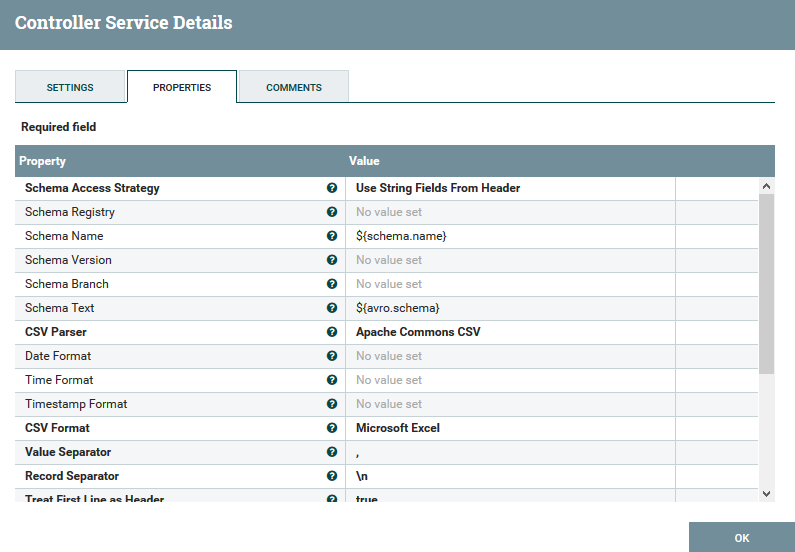

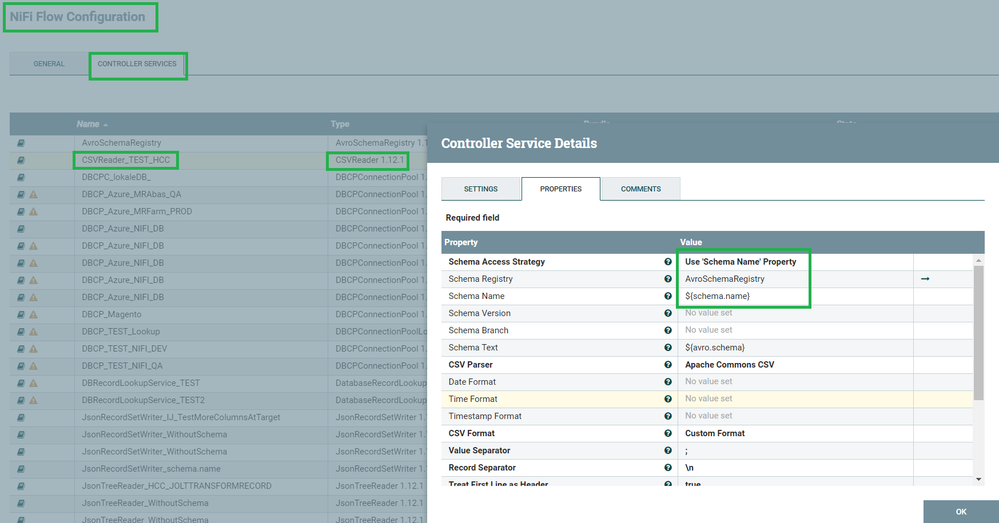

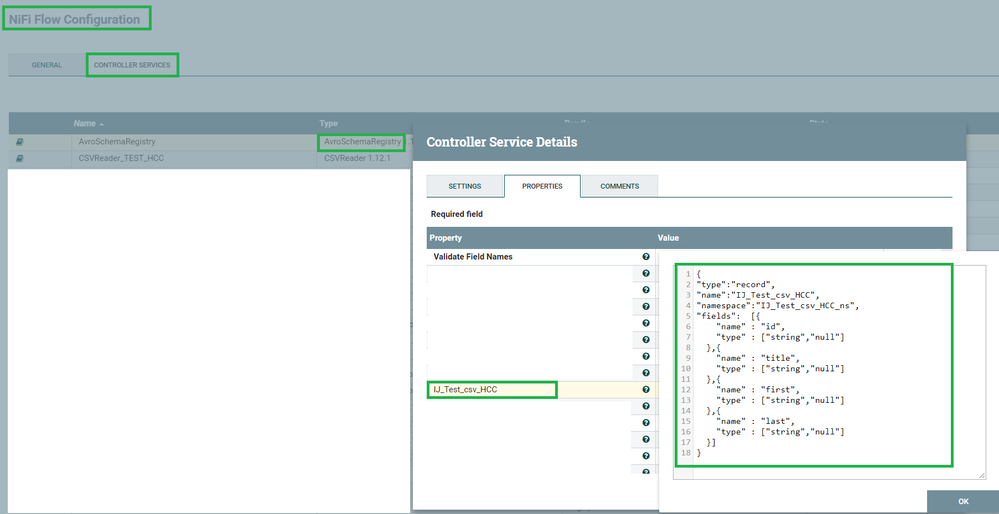

The CSVReader configuration looks as shown here (Schema Access Strategy = Use String Fields From Header; CSV Format = Microsoft Excel)

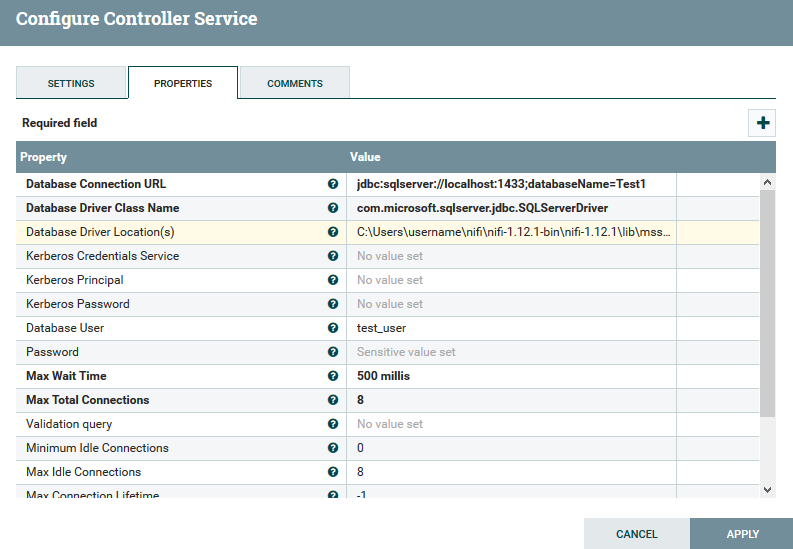

And this is the configuration of the DBCPConnectionPool (I have added the correct URL, DB driver class name, driver location, DB user and password)

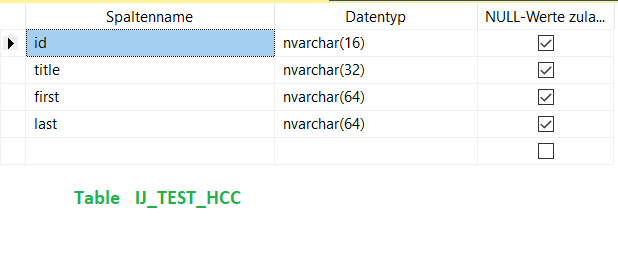

Finally, this is a snapshot of the description of the table I have created in the database to host the content

Many thanks in advance!