Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Error while ingesting Plain CSV to SAM via NIF...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Error while ingesting Plain CSV to SAM via NIFI

- Labels:

-

Apache Storm

Created on 09-19-2017 06:22 AM - edited 08-17-2019 11:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

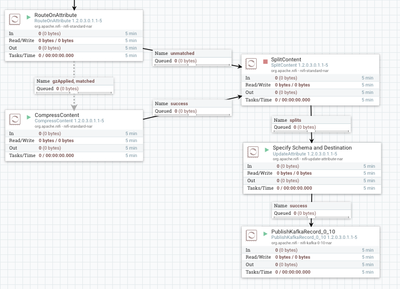

I'm trying to upgrade a existing visualization(Kafka>Flink>Druid>Superset) solution to work with HWX SAM & Registry.

Currently the NIFI Works as a HTTP proxy to collect events and push to kafka, I'm trying to convert the events(CSV) to avro in this stage and push to kafka so that SAM can consume.

Output of the SplitContent is something similar to "abc,def,ghi,jkl,,"

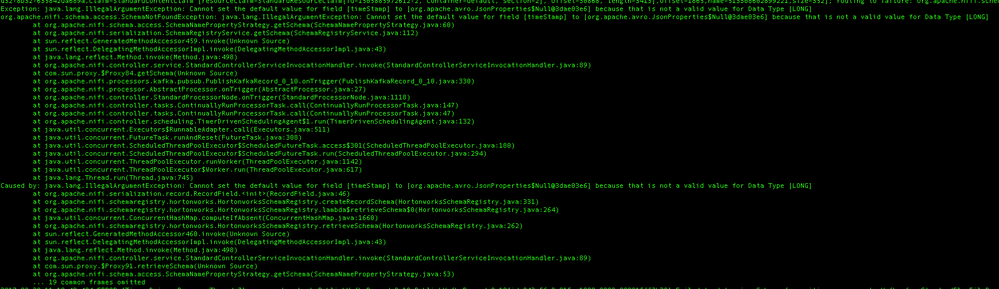

I'm getting this error in storm UI

com.hortonworks.registries.schemaregistry.serde.SerDesException: Unknown protocol id [49] received while deserializing the payload at com.hortonworks.registries.schemaregistry.serdes.avro.AvroSnapsho

Is there something I should pay closer attention to when processing CSV? Troubleshooting recommendations ?

Created 09-19-2017 01:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

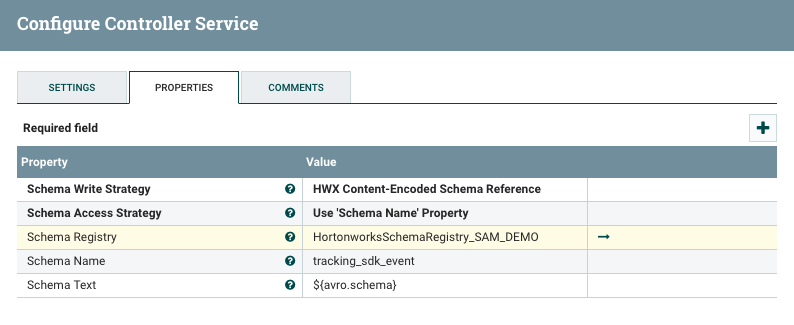

The reader on the SAM side is trying to read the encoded schema reference, but it is likely not there. The AvroRecordSetWriter being used by PublishKafkaRecord_0_10 must be configured with a "Schema Write Strategy" of "Hortonworks Content Encoded Schema Reference".

Created 09-19-2017 08:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please show the configuration of publishkafka reader and writer CS?

This looks to be an issue while setting the attributes of the flowfile when it is being sent to retrieve the Schema from registry.

Created 09-19-2017 01:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The reader on the SAM side is trying to read the encoded schema reference, but it is likely not there. The AvroRecordSetWriter being used by PublishKafkaRecord_0_10 must be configured with a "Schema Write Strategy" of "Hortonworks Content Encoded Schema Reference".

Created 09-20-2017 05:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

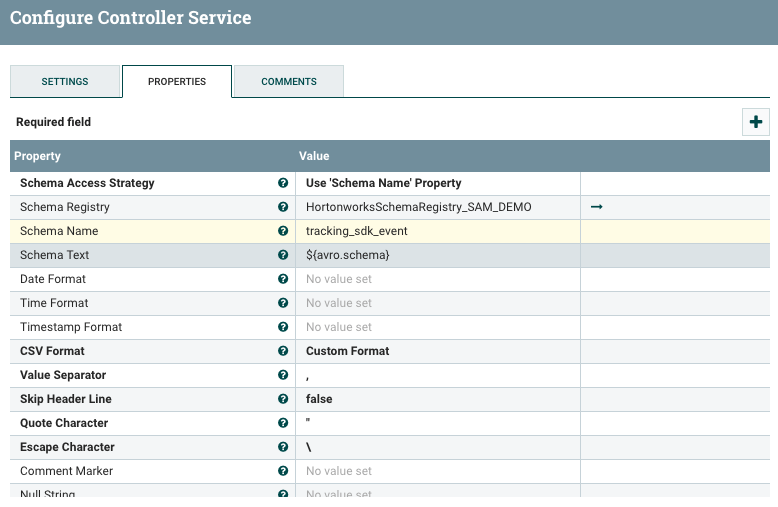

CSVReader 1.2.0.3.0.1.1-5 & AvroRecordSetWriter 1.2.0.3.0.1.1-5 are as follows.

And my avro schema in the registry is similar to this with bunch of more string fields.

{

"type": "record",

"name": "tracking_sdk_event",

"fields": [

{

"name": "timeStamp",

"type": "long",

"default": null

},

{

"name": "isoTime",

"type": "string",

"default": null

}

]

}@Bryan Bende

After changing the "Schema Write Strategy" to "Hortonworks Content Encoded Schema Reference" I'm getting an error with the timeStamp field. I have attached an image of it.

Created 09-20-2017 01:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If you want to have a default value of "null" then the type of your field needs to be a union of null and the real type.

For example, for timestamp you would need: "type": ["long", "null"]