Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Error while installing SmartSense HST Agent vi...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Error while installing SmartSense HST Agent via Ambari

- Labels:

-

Apache Ambari

-

Hortonworks SmartSense

Created 06-23-2016 03:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm getting the below error while installing SmartSense agent.

Can anyone help me with this error and explain why it is coming ?

thanks

stderr: /var/lib/ambari-agent/data/errors-2660.txt

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/scripts/hst_agent.py", line 22, in <module>

HSTScript('agent').execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 219, in execute

method(env)

File "/var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/scripts/hst_script.py", line 43, in install

self.hst_deploy_component_specific_config()

File "/var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/scripts/hst_script.py", line 302, in hst_deploy_component_specific_config

self.hst_deploy_config(conf_file_name, conf_params)

File "/var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/scripts/hst_script.py", line 333, in hst_deploy_config

config.set('server', 'hostname', params.hst_server_host)

File "/usr/lib64/python2.6/ConfigParser.py", line 377, in set

raise NoSectionError(section)

ConfigParser.NoSectionError: No section: 'server'stdout: /var/lib/ambari-agent/data/output-2660.txt

2016-06-23 20:45:48,736 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 20:45:48,737 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 20:45:48,737 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 20:45:48,763 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 20:45:48,763 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 20:45:48,789 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 20:45:48,789 - Ensuring that hadoop has the correct symlink structure

2016-06-23 20:45:48,789 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 20:45:48,791 - Group['spark'] {}

2016-06-23 20:45:48,792 - Group['hadoop'] {}

2016-06-23 20:45:48,792 - Group['users'] {}

2016-06-23 20:45:48,793 - Group['knox'] {}

2016-06-23 20:45:48,793 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,794 - User['storm'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,795 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,795 - User['oozie'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 20:45:48,796 - User['atlas'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,797 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,798 - User['falcon'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 20:45:48,798 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 20:45:48,799 - User['accumulo'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,800 - User['mahout'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,801 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,801 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 20:45:48,802 - User['flume'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,803 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,804 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,805 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,805 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,806 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,807 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,808 - User['knox'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,809 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 20:45:48,809 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-06-23 20:45:48,811 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2016-06-23 20:45:48,815 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] due to not_if

2016-06-23 20:45:48,816 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'recursive': True, 'mode': 0775, 'cd_access': 'a'}

2016-06-23 20:45:48,817 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-06-23 20:45:48,818 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2016-06-23 20:45:48,821 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] due to not_if

2016-06-23 20:45:48,822 - Group['hdfs'] {}

2016-06-23 20:45:48,822 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'hdfs']}

2016-06-23 20:45:48,823 - Directory['/etc/hadoop'] {'mode': 0755}

2016-06-23 20:45:48,842 - File['/usr/hdp/current/hadoop-client/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2016-06-23 20:45:48,843 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 0777}

2016-06-23 20:45:48,859 - Repository['HDP-2.4'] {'base_url': 'http://public-repo-1.hortonworks.com/HDP/centos6/2.x/updates/2.4.0.0', 'action': ['create'], 'components': ['HDP', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'HDP', 'mirror_list': None}

2016-06-23 20:45:48,868 - File['/etc/yum.repos.d/HDP.repo'] {'content': '[HDP-2.4]\nname=HDP-2.4\nbaseurl=http://public-repo-1.hortonworks.com/HDP/centos6/2.x/updates/2.4.0.0\n\npath=/\nenabled=1\ngpgcheck=0'}

2016-06-23 20:45:48,869 - Repository['HDP-UTILS-1.1.0.20'] {'base_url': 'http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.20/repos/centos6', 'action': ['create'], 'components': ['HDP-UTILS', 'main'], 'repo_template': '[{{repo_id}}]\nname={{repo_id}}\n{% if mirror_list %}mirrorlist={{mirror_list}}{% else %}baseurl={{base_url}}{% endif %}\n\npath=/\nenabled=1\ngpgcheck=0', 'repo_file_name': 'HDP-UTILS', 'mirror_list': None}

2016-06-23 20:45:48,873 - File['/etc/yum.repos.d/HDP-UTILS.repo'] {'content': '[HDP-UTILS-1.1.0.20]\nname=HDP-UTILS-1.1.0.20\nbaseurl=http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.20/repos/centos6\n\npath=/\nenabled=1\ngpgcheck=0'}

2016-06-23 20:45:48,873 - Package['unzip'] {}

2016-06-23 20:45:48,981 - Skipping installation of existing package unzip

2016-06-23 20:45:48,982 - Package['curl'] {}

2016-06-23 20:45:48,997 - Skipping installation of existing package curl

2016-06-23 20:45:48,997 - Package['hdp-select'] {}

2016-06-23 20:45:49,010 - Skipping installation of existing package hdp-select

installing using command: {sudo} rpm -qa | grep smartsense- || {sudo} yum -y install smartsense-hst || {sudo} rpm -i /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Command to be executed:{sudo} rpm -qa | grep smartsense- || {sudo} yum -y install smartsense-hst || {sudo} rpm -i /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Normalized command: rpm -qa | grep smartsense- || yum -y install smartsense-hst || rpm -i /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Exit code: 1

stdout: Loaded plugins: product-id, security, subscription-manager

Setting up Install Process

No package smartsense-hst available.

stderr: This system is receiving updates from Red Hat Subscription Management.

Error: Nothing to do

error: File not found by glob: /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Waiting 5 seconds for next retry

Command to be executed:{sudo} rpm -qa | grep smartsense- || {sudo} yum -y install smartsense-hst || {sudo} rpm -i /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Normalized command: rpm -qa | grep smartsense- || yum -y install smartsense-hst || rpm -i /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Exit code: 1

stdout: Loaded plugins: product-id, security, subscription-manager

Setting up Install Process

No package smartsense-hst available.

stderr: This system is receiving updates from Red Hat Subscription Management.

Error: Nothing to do

error: File not found by glob: /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Waiting 5 seconds for next retry

Command to be executed:{sudo} rpm -qa | grep smartsense- || {sudo} yum -y install smartsense-hst || {sudo} rpm -i /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Normalized command: rpm -qa | grep smartsense- || yum -y install smartsense-hst || rpm -i /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Exit code: 1

stdout: Loaded plugins: product-id, security, subscription-manager

Setting up Install Process

No package smartsense-hst available.

stderr: This system is receiving updates from Red Hat Subscription Management.

Error: Nothing to do

error: File not found by glob: /var/lib/ambari-agent/cache/stacks/HDP/2.1/services/SMARTSENSE/package/files/rpm/*.rpm

Command to be executed:{sudo} mkdir -p /etc/hst

Normalized command: mkdir -p /etc/hst

Exit code: 0

stdout:

stderr:

Command to be executed:{sudo} chown -R root:root /etc/hst

Normalized command: chown -R root:root /etc/hst

Exit code: 0

stdout:

stderr:

Command to be executed:{sudo} mkdir -p /var/log/hst

Normalized command: mkdir -p /var/log/hst

Exit code: 0

stdout:

stderr:

Command to be executed:{sudo} chown -R root:root /var/log/hst

Normalized command: chown -R root:root /var/log/hst

Exit code: 0

stdout:

stderr:

Command to be executed:{sudo} mkdir -p /var/log/hst

Normalized command: mkdir -p /var/log/hst

Exit code: 0

stdout:

stderr:

Command to be executed:{sudo} chown -R root:root /var/log/hst

Normalized command: chown -R root:root /var/log/hst

Exit code: 0

stdout:

stderr:

Command to be executed:{sudo} mkdir -p /etc/hst/conf

Normalized command: mkdir -p /etc/hst/conf

Exit code: 0

stdout:

stderr:

Command to be executed:{sudo} chown -R root:root /etc/hst/conf

Normalized command: chown -R root:root /etc/hst/conf

Exit code: 0

stdout:

stderr:

Command to be executed:{sudo} mkdir -p /var/lib/smartsense/hst-agent

Normalized command: mkdir -p /var/lib/smartsense/hst-agent

Exit code: 0

stdout:

stderr:

Command to be executed:{sudo} chown -R root:root /var/lib/smartsense/hst-agent

Normalized command: chown -R root:root /var/lib/smartsense/hst-agent

Exit code: 0

stdout:

stderr:

Created on 06-24-2016 12:41 AM - edited 08-18-2019 05:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

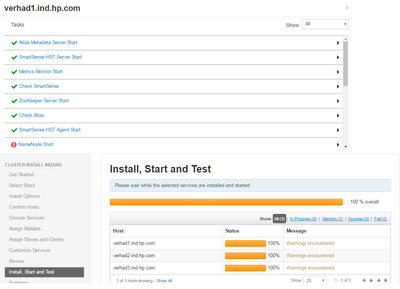

Hi @Jitendra Yadav, I followed your above suggestion for installing smartsense and it worked like a charm. thanks :-) Now the installer is not able to start namenode and failed with below error. Also no retry option is available now so how to retry(screenshot attached).

stderr: /var/lib/ambari-agent/data/errors-2760.txt

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/namenode.py", line 401, in <module>

NameNode().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 219, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/namenode.py", line 102, in start

namenode(action="start", hdfs_binary=hdfs_binary, upgrade_type=upgrade_type, env=env)

File "/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/hdfs_namenode.py", line 146, in namenode

create_log_dir=True

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/utils.py", line 267, in service

Execute(daemon_cmd, not_if=process_id_exists_command, environment=hadoop_env_exports)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 154, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 158, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 121, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 238, in action_run

tries=self.resource.tries, try_sleep=self.resource.try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 70, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 92, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 140, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 291, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of 'ambari-sudo.sh su hdfs -l -s /bin/bash -c 'ulimit -c unlimited ; /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode'' returned 1. starting namenode, logging to /var/log/hadoop/hdfs/hadoop-hdfs-namenode-VerHad1.outstdout: /var/lib/ambari-agent/data/output-2760.txt

2016-06-23 21:21:41,300 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 21:21:41,300 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 21:21:41,300 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 21:21:41,325 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 21:21:41,325 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 21:21:41,350 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 21:21:41,350 - Ensuring that hadoop has the correct symlink structure

2016-06-23 21:21:41,350 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 21:21:41,495 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 21:21:41,495 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 21:21:41,495 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 21:21:41,528 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 21:21:41,528 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 21:21:41,559 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 21:21:41,560 - Ensuring that hadoop has the correct symlink structure

2016-06-23 21:21:41,560 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 21:21:41,562 - Group['spark'] {}

2016-06-23 21:21:41,564 - Group['hadoop'] {}

2016-06-23 21:21:41,564 - Group['users'] {}

2016-06-23 21:21:41,565 - Group['knox'] {}

2016-06-23 21:21:41,565 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,566 - User['storm'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,567 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,568 - User['oozie'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 21:21:41,569 - User['atlas'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,570 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,571 - User['falcon'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 21:21:41,572 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 21:21:41,573 - User['accumulo'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,574 - User['mahout'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,575 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,578 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 21:21:41,579 - User['flume'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,580 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,581 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,582 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,583 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,584 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,585 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,587 - User['knox'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,588 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,589 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-06-23 21:21:41,591 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2016-06-23 21:21:41,597 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] due to not_if

2016-06-23 21:21:41,598 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'recursive': True, 'mode': 0775, 'cd_access': 'a'}

2016-06-23 21:21:41,599 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-06-23 21:21:41,601 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2016-06-23 21:21:41,606 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] due to not_if

2016-06-23 21:21:41,607 - Group['hdfs'] {}

2016-06-23 21:21:41,607 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'hdfs']}

2016-06-23 21:21:41,608 - Directory['/etc/hadoop'] {'mode': 0755}

2016-06-23 21:21:41,638 - File['/usr/hdp/current/hadoop-client/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2016-06-23 21:21:41,639 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 0777}

2016-06-23 21:21:41,661 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2016-06-23 21:21:41,669 - Skipping Execute[('setenforce', '0')] due to not_if

2016-06-23 21:21:41,670 - Directory['/var/log/hadoop'] {'owner': 'root', 'mode': 0775, 'group': 'hadoop', 'recursive': True, 'cd_access': 'a'}

2016-06-23 21:21:41,674 - Directory['/var/run/hadoop'] {'owner': 'root', 'group': 'root', 'recursive': True, 'cd_access': 'a'}

2016-06-23 21:21:41,674 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'recursive': True, 'cd_access': 'a'}

2016-06-23 21:21:41,682 - File['/usr/hdp/current/hadoop-client/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2016-06-23 21:21:41,686 - File['/usr/hdp/current/hadoop-client/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2016-06-23 21:21:41,687 - File['/usr/hdp/current/hadoop-client/conf/log4j.properties'] {'content': ..., 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2016-06-23 21:21:41,710 - File['/usr/hdp/current/hadoop-client/conf/hadoop-metrics2.properties'] {'content': Template('hadoop-metrics2.properties.j2'), 'owner': 'hdfs'}

2016-06-23 21:21:41,711 - File['/usr/hdp/current/hadoop-client/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2016-06-23 21:21:41,722 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop'}

2016-06-23 21:21:41,728 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2016-06-23 21:21:42,061 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 21:21:42,061 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 21:21:42,062 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 21:21:42,086 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 21:21:42,087 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 21:21:42,110 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 21:21:42,111 - Ensuring that hadoop has the correct symlink structure

2016-06-23 21:21:42,111 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 21:21:42,112 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 21:21:42,112 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 21:21:42,113 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 21:21:42,137 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 21:21:42,138 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 21:21:42,161 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 21:21:42,161 - Ensuring that hadoop has the correct symlink structure

2016-06-23 21:21:42,161 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 21:21:42,170 - Directory['/etc/security/limits.d'] {'owner': 'root', 'group': 'root', 'recursive': True}

2016-06-23 21:21:42,177 - File['/etc/security/limits.d/hdfs.conf'] {'content': Template('hdfs.conf.j2'), 'owner': 'root', 'group': 'root', 'mode': 0644}

2016-06-23 21:21:42,178 - XmlConfig['hadoop-policy.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,190 - Generating config: /usr/hdp/current/hadoop-client/conf/hadoop-policy.xml

2016-06-23 21:21:42,191 - File['/usr/hdp/current/hadoop-client/conf/hadoop-policy.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,201 - XmlConfig['ssl-client.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,212 - Generating config: /usr/hdp/current/hadoop-client/conf/ssl-client.xml

2016-06-23 21:21:42,213 - File['/usr/hdp/current/hadoop-client/conf/ssl-client.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,219 - Directory['/usr/hdp/current/hadoop-client/conf/secure'] {'owner': 'root', 'group': 'hadoop', 'recursive': True, 'cd_access': 'a'}

2016-06-23 21:21:42,220 - XmlConfig['ssl-client.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf/secure', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,231 - Generating config: /usr/hdp/current/hadoop-client/conf/secure/ssl-client.xml

2016-06-23 21:21:42,231 - File['/usr/hdp/current/hadoop-client/conf/secure/ssl-client.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,241 - XmlConfig['ssl-server.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,252 - Generating config: /usr/hdp/current/hadoop-client/conf/ssl-server.xml

2016-06-23 21:21:42,253 - File['/usr/hdp/current/hadoop-client/conf/ssl-server.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,260 - XmlConfig['hdfs-site.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,274 - Generating config: /usr/hdp/current/hadoop-client/conf/hdfs-site.xml

2016-06-23 21:21:42,274 - File['/usr/hdp/current/hadoop-client/conf/hdfs-site.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,324 - XmlConfig['core-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hdfs', 'configurations': ...}

2016-06-23 21:21:42,334 - Generating config: /usr/hdp/current/hadoop-client/conf/core-site.xml

2016-06-23 21:21:42,335 - File['/usr/hdp/current/hadoop-client/conf/core-site.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,360 - File['/usr/hdp/current/hadoop-client/conf/slaves'] {'content': Template('slaves.j2'), 'owner': 'hdfs'}

2016-06-23 21:21:42,361 - Directory['/hadoop/hdfs/namenode'] {'owner': 'hdfs', 'recursive': True, 'group': 'hadoop', 'mode': 0755, 'cd_access': 'a'}

2016-06-23 21:21:42,362 - Called service start with upgrade_type: None

2016-06-23 21:21:42,362 - Ranger admin not installed

2016-06-23 21:21:42,373 - Execute['ls /hadoop/hdfs/namenode | wc -l | grep -q ^0$'] {}

2016-06-23 21:21:42,378 - Execute['yes Y | hdfs --config /usr/hdp/current/hadoop-client/conf namenode -format'] {'path': ['/usr/hdp/current/hadoop-client/bin'], 'user': 'hdfs'}

2016-06-23 21:21:45,451 - Directory['/hadoop/hdfs/namenode/namenode-formatted/'] {'recursive': True}

2016-06-23 21:21:45,451 - Creating directory Directory['/hadoop/hdfs/namenode/namenode-formatted/'] since it doesn't exist.

2016-06-23 21:21:45,454 - File['/etc/hadoop/conf/dfs.exclude'] {'owner': 'hdfs', 'content': Template('exclude_hosts_list.j2'), 'group': 'hadoop'}

2016-06-23 21:21:45,454 - Writing File['/etc/hadoop/conf/dfs.exclude'] because it doesn't exist

2016-06-23 21:21:45,455 - Changing owner for /etc/hadoop/conf/dfs.exclude from 0 to hdfs

2016-06-23 21:21:45,455 - Changing group for /etc/hadoop/conf/dfs.exclude from 0 to hadoop

2016-06-23 21:21:45,455 - Option for start command:

2016-06-23 21:21:45,455 - Directory['/var/run/hadoop'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 0755}

2016-06-23 21:21:45,456 - Changing owner for /var/run/hadoop from 0 to hdfs

2016-06-23 21:21:45,456 - Changing group for /var/run/hadoop from 0 to hadoop

2016-06-23 21:21:45,456 - Directory['/var/run/hadoop/hdfs'] {'owner': 'hdfs', 'recursive': True}

2016-06-23 21:21:45,456 - Directory['/var/log/hadoop/hdfs'] {'owner': 'hdfs', 'recursive': True}

2016-06-23 21:21:45,457 - File['/var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid'] {'action': ['delete'], 'not_if': 'ambari-sudo.sh -H -E test -f /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid'}

2016-06-23 21:21:45,461 - Execute['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'ulimit -c unlimited ; /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode''] {'environment': {'HADOOP_LIBEXEC_DIR': '/usr/hdp/current/hadoop-client/libexec'}, 'not_if': 'ambari-sudo.sh -H -E test -f /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid'}

Created 06-23-2016 03:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you able to list it manually on that node?

yum list 'smartsense*'

Created 06-23-2016 03:41 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can you please share the output below command?

cat /etc/yum.repos.d/ambari.repo

Created 06-23-2016 03:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

below is the output of the above command, seems like the repo is pointing to an old version.

[root@VerHad1 services]# cat /etc/yum.repos.d/ambari.repo #VERSION_NUMBER=2.1.0-1470 [Updates-ambari-2.1.0] name=ambari-2.1.0 - Updates baseurl=http://public-repo-1.hortonworks.com/ambari/centos6/2.x/updates/2.1.0 gpgcheck=1 gpgkey=http://public-repo-1.hortonworks.com/ambari/centos6/RPM-GPG-KEY/RPM-GPG-KEY-Jenkins enabled=1 priority=1 [root@VerHad1 services]#

Created 06-23-2016 03:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Smartsense bundle comes with ambari 2.2+ repos, since you are using 2.1 then you need to download it from support website.

please follow below doc. https://docs.hortonworks.com/HDPDocuments/SS1/SmartSense-1.1.0/bk_smartsense_admin/bk_smartsense_adm...

Created 06-24-2016 07:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for confirming, Lets close this thread after accepting this answer and work on new issue through https://community.hortonworks.com/questions/41451/ambari-server-failed-to-start-namenode-after-insta...

Created 06-23-2016 03:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

no not able to list that package. below is the output

[root@VerHad1 services]# yum list 'smartsense*' Loaded plugins: product-id, security, subscription-manager This system is receiving updates from Red Hat Subscription Management. rhel-6-server-rpms | 3.7 kB 00:00 rhel-ha-for-rhel-6-server-rpms | 3.7 kB 00:00 rhel-lb-for-rhel-6-server-rpms | 3.7 kB 00:00 Error: No matching Packages to list

Created on 06-24-2016 12:41 AM - edited 08-18-2019 05:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Jitendra Yadav, I followed your above suggestion for installing smartsense and it worked like a charm. thanks :-) Now the installer is not able to start namenode and failed with below error. Also no retry option is available now so how to retry(screenshot attached).

stderr: /var/lib/ambari-agent/data/errors-2760.txt

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/namenode.py", line 401, in <module>

NameNode().execute()

File "/usr/lib/python2.6/site-packages/resource_management/libraries/script/script.py", line 219, in execute

method(env)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/namenode.py", line 102, in start

namenode(action="start", hdfs_binary=hdfs_binary, upgrade_type=upgrade_type, env=env)

File "/usr/lib/python2.6/site-packages/ambari_commons/os_family_impl.py", line 89, in thunk

return fn(*args, **kwargs)

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/hdfs_namenode.py", line 146, in namenode

create_log_dir=True

File "/var/lib/ambari-agent/cache/common-services/HDFS/2.1.0.2.0/package/scripts/utils.py", line 267, in service

Execute(daemon_cmd, not_if=process_id_exists_command, environment=hadoop_env_exports)

File "/usr/lib/python2.6/site-packages/resource_management/core/base.py", line 154, in __init__

self.env.run()

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 158, in run

self.run_action(resource, action)

File "/usr/lib/python2.6/site-packages/resource_management/core/environment.py", line 121, in run_action

provider_action()

File "/usr/lib/python2.6/site-packages/resource_management/core/providers/system.py", line 238, in action_run

tries=self.resource.tries, try_sleep=self.resource.try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 70, in inner

result = function(command, **kwargs)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 92, in checked_call

tries=tries, try_sleep=try_sleep)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 140, in _call_wrapper

result = _call(command, **kwargs_copy)

File "/usr/lib/python2.6/site-packages/resource_management/core/shell.py", line 291, in _call

raise Fail(err_msg)

resource_management.core.exceptions.Fail: Execution of 'ambari-sudo.sh su hdfs -l -s /bin/bash -c 'ulimit -c unlimited ; /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode'' returned 1. starting namenode, logging to /var/log/hadoop/hdfs/hadoop-hdfs-namenode-VerHad1.outstdout: /var/lib/ambari-agent/data/output-2760.txt

2016-06-23 21:21:41,300 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 21:21:41,300 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 21:21:41,300 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 21:21:41,325 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 21:21:41,325 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 21:21:41,350 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 21:21:41,350 - Ensuring that hadoop has the correct symlink structure

2016-06-23 21:21:41,350 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 21:21:41,495 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 21:21:41,495 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 21:21:41,495 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 21:21:41,528 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 21:21:41,528 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 21:21:41,559 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 21:21:41,560 - Ensuring that hadoop has the correct symlink structure

2016-06-23 21:21:41,560 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 21:21:41,562 - Group['spark'] {}

2016-06-23 21:21:41,564 - Group['hadoop'] {}

2016-06-23 21:21:41,564 - Group['users'] {}

2016-06-23 21:21:41,565 - Group['knox'] {}

2016-06-23 21:21:41,565 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,566 - User['storm'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,567 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,568 - User['oozie'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 21:21:41,569 - User['atlas'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,570 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,571 - User['falcon'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 21:21:41,572 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 21:21:41,573 - User['accumulo'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,574 - User['mahout'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,575 - User['spark'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,578 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['users']}

2016-06-23 21:21:41,579 - User['flume'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,580 - User['kafka'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,581 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,582 - User['sqoop'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,583 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,584 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,585 - User['hbase'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,587 - User['knox'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,588 - User['hcat'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop']}

2016-06-23 21:21:41,589 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-06-23 21:21:41,591 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2016-06-23 21:21:41,597 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa'] due to not_if

2016-06-23 21:21:41,598 - Directory['/tmp/hbase-hbase'] {'owner': 'hbase', 'recursive': True, 'mode': 0775, 'cd_access': 'a'}

2016-06-23 21:21:41,599 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2016-06-23 21:21:41,601 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] {'not_if': '(test $(id -u hbase) -gt 1000) || (false)'}

2016-06-23 21:21:41,606 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh hbase /home/hbase,/tmp/hbase,/usr/bin/hbase,/var/log/hbase,/tmp/hbase-hbase'] due to not_if

2016-06-23 21:21:41,607 - Group['hdfs'] {}

2016-06-23 21:21:41,607 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'hdfs']}

2016-06-23 21:21:41,608 - Directory['/etc/hadoop'] {'mode': 0755}

2016-06-23 21:21:41,638 - File['/usr/hdp/current/hadoop-client/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2016-06-23 21:21:41,639 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 0777}

2016-06-23 21:21:41,661 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2016-06-23 21:21:41,669 - Skipping Execute[('setenforce', '0')] due to not_if

2016-06-23 21:21:41,670 - Directory['/var/log/hadoop'] {'owner': 'root', 'mode': 0775, 'group': 'hadoop', 'recursive': True, 'cd_access': 'a'}

2016-06-23 21:21:41,674 - Directory['/var/run/hadoop'] {'owner': 'root', 'group': 'root', 'recursive': True, 'cd_access': 'a'}

2016-06-23 21:21:41,674 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'recursive': True, 'cd_access': 'a'}

2016-06-23 21:21:41,682 - File['/usr/hdp/current/hadoop-client/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2016-06-23 21:21:41,686 - File['/usr/hdp/current/hadoop-client/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2016-06-23 21:21:41,687 - File['/usr/hdp/current/hadoop-client/conf/log4j.properties'] {'content': ..., 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2016-06-23 21:21:41,710 - File['/usr/hdp/current/hadoop-client/conf/hadoop-metrics2.properties'] {'content': Template('hadoop-metrics2.properties.j2'), 'owner': 'hdfs'}

2016-06-23 21:21:41,711 - File['/usr/hdp/current/hadoop-client/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2016-06-23 21:21:41,722 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop'}

2016-06-23 21:21:41,728 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2016-06-23 21:21:42,061 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 21:21:42,061 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 21:21:42,062 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 21:21:42,086 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 21:21:42,087 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 21:21:42,110 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 21:21:42,111 - Ensuring that hadoop has the correct symlink structure

2016-06-23 21:21:42,111 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 21:21:42,112 - The hadoop conf dir /usr/hdp/current/hadoop-client/conf exists, will call conf-select on it for version 2.4.0.0-169

2016-06-23 21:21:42,112 - Checking if need to create versioned conf dir /etc/hadoop/2.4.0.0-169/0

2016-06-23 21:21:42,113 - call['conf-select create-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False, 'stderr': -1}

2016-06-23 21:21:42,137 - call returned (1, '/etc/hadoop/2.4.0.0-169/0 exist already', '')

2016-06-23 21:21:42,138 - checked_call['conf-select set-conf-dir --package hadoop --stack-version 2.4.0.0-169 --conf-version 0'] {'logoutput': False, 'sudo': True, 'quiet': False}

2016-06-23 21:21:42,161 - checked_call returned (0, '/usr/hdp/2.4.0.0-169/hadoop/conf -> /etc/hadoop/2.4.0.0-169/0')

2016-06-23 21:21:42,161 - Ensuring that hadoop has the correct symlink structure

2016-06-23 21:21:42,161 - Using hadoop conf dir: /usr/hdp/current/hadoop-client/conf

2016-06-23 21:21:42,170 - Directory['/etc/security/limits.d'] {'owner': 'root', 'group': 'root', 'recursive': True}

2016-06-23 21:21:42,177 - File['/etc/security/limits.d/hdfs.conf'] {'content': Template('hdfs.conf.j2'), 'owner': 'root', 'group': 'root', 'mode': 0644}

2016-06-23 21:21:42,178 - XmlConfig['hadoop-policy.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,190 - Generating config: /usr/hdp/current/hadoop-client/conf/hadoop-policy.xml

2016-06-23 21:21:42,191 - File['/usr/hdp/current/hadoop-client/conf/hadoop-policy.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,201 - XmlConfig['ssl-client.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,212 - Generating config: /usr/hdp/current/hadoop-client/conf/ssl-client.xml

2016-06-23 21:21:42,213 - File['/usr/hdp/current/hadoop-client/conf/ssl-client.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,219 - Directory['/usr/hdp/current/hadoop-client/conf/secure'] {'owner': 'root', 'group': 'hadoop', 'recursive': True, 'cd_access': 'a'}

2016-06-23 21:21:42,220 - XmlConfig['ssl-client.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf/secure', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,231 - Generating config: /usr/hdp/current/hadoop-client/conf/secure/ssl-client.xml

2016-06-23 21:21:42,231 - File['/usr/hdp/current/hadoop-client/conf/secure/ssl-client.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,241 - XmlConfig['ssl-server.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,252 - Generating config: /usr/hdp/current/hadoop-client/conf/ssl-server.xml

2016-06-23 21:21:42,253 - File['/usr/hdp/current/hadoop-client/conf/ssl-server.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,260 - XmlConfig['hdfs-site.xml'] {'owner': 'hdfs', 'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'configuration_attributes': {}, 'configurations': ...}

2016-06-23 21:21:42,274 - Generating config: /usr/hdp/current/hadoop-client/conf/hdfs-site.xml

2016-06-23 21:21:42,274 - File['/usr/hdp/current/hadoop-client/conf/hdfs-site.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': None, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,324 - XmlConfig['core-site.xml'] {'group': 'hadoop', 'conf_dir': '/usr/hdp/current/hadoop-client/conf', 'mode': 0644, 'configuration_attributes': {}, 'owner': 'hdfs', 'configurations': ...}

2016-06-23 21:21:42,334 - Generating config: /usr/hdp/current/hadoop-client/conf/core-site.xml

2016-06-23 21:21:42,335 - File['/usr/hdp/current/hadoop-client/conf/core-site.xml'] {'owner': 'hdfs', 'content': InlineTemplate(...), 'group': 'hadoop', 'mode': 0644, 'encoding': 'UTF-8'}

2016-06-23 21:21:42,360 - File['/usr/hdp/current/hadoop-client/conf/slaves'] {'content': Template('slaves.j2'), 'owner': 'hdfs'}

2016-06-23 21:21:42,361 - Directory['/hadoop/hdfs/namenode'] {'owner': 'hdfs', 'recursive': True, 'group': 'hadoop', 'mode': 0755, 'cd_access': 'a'}

2016-06-23 21:21:42,362 - Called service start with upgrade_type: None

2016-06-23 21:21:42,362 - Ranger admin not installed

2016-06-23 21:21:42,373 - Execute['ls /hadoop/hdfs/namenode | wc -l | grep -q ^0$'] {}

2016-06-23 21:21:42,378 - Execute['yes Y | hdfs --config /usr/hdp/current/hadoop-client/conf namenode -format'] {'path': ['/usr/hdp/current/hadoop-client/bin'], 'user': 'hdfs'}

2016-06-23 21:21:45,451 - Directory['/hadoop/hdfs/namenode/namenode-formatted/'] {'recursive': True}

2016-06-23 21:21:45,451 - Creating directory Directory['/hadoop/hdfs/namenode/namenode-formatted/'] since it doesn't exist.

2016-06-23 21:21:45,454 - File['/etc/hadoop/conf/dfs.exclude'] {'owner': 'hdfs', 'content': Template('exclude_hosts_list.j2'), 'group': 'hadoop'}

2016-06-23 21:21:45,454 - Writing File['/etc/hadoop/conf/dfs.exclude'] because it doesn't exist

2016-06-23 21:21:45,455 - Changing owner for /etc/hadoop/conf/dfs.exclude from 0 to hdfs

2016-06-23 21:21:45,455 - Changing group for /etc/hadoop/conf/dfs.exclude from 0 to hadoop

2016-06-23 21:21:45,455 - Option for start command:

2016-06-23 21:21:45,455 - Directory['/var/run/hadoop'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 0755}

2016-06-23 21:21:45,456 - Changing owner for /var/run/hadoop from 0 to hdfs

2016-06-23 21:21:45,456 - Changing group for /var/run/hadoop from 0 to hadoop

2016-06-23 21:21:45,456 - Directory['/var/run/hadoop/hdfs'] {'owner': 'hdfs', 'recursive': True}

2016-06-23 21:21:45,456 - Directory['/var/log/hadoop/hdfs'] {'owner': 'hdfs', 'recursive': True}

2016-06-23 21:21:45,457 - File['/var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid'] {'action': ['delete'], 'not_if': 'ambari-sudo.sh -H -E test -f /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid'}

2016-06-23 21:21:45,461 - Execute['ambari-sudo.sh su hdfs -l -s /bin/bash -c 'ulimit -c unlimited ; /usr/hdp/current/hadoop-client/sbin/hadoop-daemon.sh --config /usr/hdp/current/hadoop-client/conf start namenode''] {'environment': {'HADOOP_LIBEXEC_DIR': '/usr/hdp/current/hadoop-client/libexec'}, 'not_if': 'ambari-sudo.sh -H -E test -f /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid && ambari-sudo.sh -H -E pgrep -F /var/run/hadoop/hdfs/hadoop-hdfs-namenode.pid'}

Created 06-05-2017 05:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am also having the same issue but

bash-4.2$ cat /etc/yum.repos.d/ambari.repo cat: /etc/yum.repos.d/ambari.repo: No such file or directory

Created 06-05-2017 05:23 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My ambari version is Version2.2.2.0

and HDP version is HDP-2.3.4.0-3485

bash-4.2$ yum list 'smartsense*' Loaded plugins: product-id, search-disabled-repos, subscription-manager HDP-2.3 175/175 HDP-UTILS-1.1.0.20 44/44 puppetlabs-deps 12/12 puppetlabs-products 66/66 rhel-7-server-eus-rpms 8407/8407 rhel-7-server-optional-rpms 7122/7122 rhel-7-server-rpms 8450/8450 rhel-7-server-thirdparty-oracle-java-rpms 170/170 rhel-rs-for-rhel-7-server-eus-rpms 162/162 usps-addons 6/6 Error: No matching Packages to list bash-4.2$ cat /etc/yum.repos.d/ambari.repo

cat /etc/yum.repos.d/ambari.repo cat: /etc/yum.repos.d/ambari.repo: No such file or directory