Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Errors in GetHDFS\PutHDFS using HDF running on...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Errors in GetHDFS\PutHDFS using HDF running on local machine on to Sandbox or Cluster.

- Labels:

-

Apache NiFi

Created 08-03-2016 07:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

Firstly , I am new to HADOOP and HDF and trying to use these tools. 🙂

We are trying to do a POC at our company by trying to upload files in to HADOOP (HW Sandbox ) from my local laptop where HDF (1.2.0.0)is installed.

I am getting different errors and based on some reading I did , it looks like I need to open ports and\or do port forwarding etc. I did all I can think of but still getting those errors.

Do you know what kind of configurations\settings\ports that I may have to change to get this working.??

I can provide more details about what I am trying and errors that I am seeing.

This is what I did..

1.Copy core-site,hdfs-site xml files from sandbox and copied on to my laptop (windows 10), referenced those in the get\put processes.

2.Tried to upload a file in to /user/ maria_dev/ folder.

Got these errors.

PutHDFS[id=2d926905-af62-4f87-9f23-e1fd8b7bf505] failed to invoke @OnScheduled method due to java.lang.RuntimeException: Failed while executing one of processor's OnScheduled task.; processor will not be scheduled to run for 30000 milliseconds: java.lang.RuntimeException: Failed while executing one of processor's OnScheduled task.

3. then I changed the core-site file , replaced the sandbox.hartowrks.com with 127.0.0.1

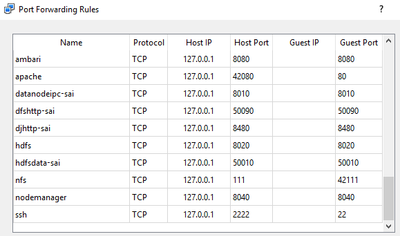

Added port forward rule in VM to ports 50010,8010 (most of the other ports are already forwarded by default)

ERROR

2d926905-af62-4f87-9f23-e1fd8b7bf505

PutHDFS[id=2d926905-af62-4f87-9f23-e1fd8b7bf505] Failed to write to HDFS due to org.apache.nifi.processor.exception.ProcessException: IOException thrown from PutHDFS[id=2d926905-af62-4f87-9f23-e1fd8b7bf505]: org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /user/maria_dev/.nifi-applog.16.14.11.gz could only be replicated to 0 nodes instead of minReplication (=1). There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:1588)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getNewBlockTargets(FSNamesystem.java:3116)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:3040)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:789)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:492)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:969)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2151)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2147)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2145)

: org.apache.nifi.processor.exception.ProcessException: IOException thrown from PutHDFS[id=2d926905-af62-4f87-9f23-e1fd8b7bf505]: org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /user/maria_dev/.nifi-applog.16.14.11.gz could only be replicated to 0 nodes instead of minReplication (=1). There are 1 datanode(s) running and 1 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:1588)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getNewBlockTargets(FSNamesystem.java:3116)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:3040)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:789)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:492)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:969)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2151)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2147)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2145)

Thanks for your help.

Created 08-03-2016 07:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I’ve seen this too, I believe the errors are mostly red herrings. I believe somewhere in the stack trace will be a statement that the client can’t connect to the data node, and it will list the internal IP (10.0.2.15, e.g.) instead of 127.0.0.1. That causes the minReplication issue, etc.

This setting is supposed to fix it: https://hadoop.apache.org/docs/r2.7.2/hadoop-project-dist/hadoop-hdfs/HdfsMultihoming.html#Clients_u...

It is supposed to make the client(s) do DNS resolution, but I never got it working. If it does work, then when the NameNode or whoever tells the client where the DataNode is, it gives back a hostname instead of an IP, which should then resolve on the client (may need to update /etc/hosts) to localhost (since the sandbox is using NAT). The ports also need to be directly-forwarded (50010 I believe).

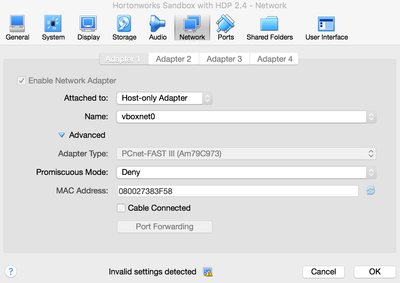

An alternative could be to switch the sandbox to a Host-Only Adapter, then it has its own IP. However IIRC Hadoop hard codes (at least used to) the IP down in its bowels so I’m not sure that would work by itself either.

@bbende has some ideas in another HCC post as well: https://community.hortonworks.com/questions/47083/nifi-puthdfs-error.html

Created 08-03-2016 07:29 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I’ve seen this too, I believe the errors are mostly red herrings. I believe somewhere in the stack trace will be a statement that the client can’t connect to the data node, and it will list the internal IP (10.0.2.15, e.g.) instead of 127.0.0.1. That causes the minReplication issue, etc.

This setting is supposed to fix it: https://hadoop.apache.org/docs/r2.7.2/hadoop-project-dist/hadoop-hdfs/HdfsMultihoming.html#Clients_u...

It is supposed to make the client(s) do DNS resolution, but I never got it working. If it does work, then when the NameNode or whoever tells the client where the DataNode is, it gives back a hostname instead of an IP, which should then resolve on the client (may need to update /etc/hosts) to localhost (since the sandbox is using NAT). The ports also need to be directly-forwarded (50010 I believe).

An alternative could be to switch the sandbox to a Host-Only Adapter, then it has its own IP. However IIRC Hadoop hard codes (at least used to) the IP down in its bowels so I’m not sure that would work by itself either.

@bbende has some ideas in another HCC post as well: https://community.hortonworks.com/questions/47083/nifi-puthdfs-error.html

Created 11-29-2016 01:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am trying to read files from the HDFS which is inside the HDP 2.5.

I downloaded the configuration files from the ambari webportal for the HDFS and placed them in my Nifi folder.

I added the ports in the Virtualbox's port forwarding setting.

016-11-29 14:08:20,562 WARN [Timer-Driven Process Thread-5] org.apache.hadoop.hdfs.DFSClient Failed to connect to /172.17.0.2:50010 for block, add to deadNodes and continue. java.net.ConnectException: Connection timed out: no further information java.net.ConnectException: Connection timed out: no further information

When i add the configuration that you have specified above i get the follwoing error:

2016-11-29 13:57:05,194 WARN [Timer-Driven Process Thread-9] org.apache.hadoop.hdfs.DFSClient Failed to connect to sandbox.hortonworks.com/127.0.0.1:50010 for block, add to deadNodes and continue. java.io.IOException: An established connection was aborted by the software in your host machine java.io.IOException: An established connection was aborted by the software in your host machine

Can you help me figure out how to get it working? how can i know what is preventing the conncetion from being established to get the data. I do see that it is able to read the number of files and the names of the files. It is only when it tries to do the data transfer, it fails.

Regards

Created 11-29-2016 01:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2016-11-29 14:50:59,544 INFO [Write-Ahead Local State Provider Maintenance] org.wali.MinimalLockingWriteAheadLog org.wali.MinimalLockingWriteAheadLog@6dc5e857 checkpointed with 3 Records and 0 Swap Files in 25 milliseconds (Stop-the-world time = 11 milliseconds, Clear Edit Logs time = 9 millis), max Transaction ID 8 2016-11-29 14:51:06,659 WARN [Timer-Driven Process Thread-7] o.apache.hadoop.hdfs.BlockReaderFactory I/O error constructing remote block reader. java.io.IOException: An existing connection was forcibly closed by the remote host at sun.nio.ch.SocketDispatcher.read0(Native Method) ~[na:1.8.0_111]

2016-11-29 14:51:06,659 WARN [Timer-Driven Process Thread-7] org.apache.hadoop.hdfs.DFSClient Failed to connect to sandbox.hortonworks.com/127.0.0.1:50010 for block, add to deadNodes and continue. java.io.IOException: An existing connection was forcibly closed by the remote host java.io.IOException: An existing connection was forcibly closed by the remote host

at java.lang.Thread.run(Thread.java:745) [na:1.8.0_111] 2016-11-29 14:51:06,660 WARN [Timer-Driven Process Thread-7] org.apache.hadoop.hdfs.DFSClient Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv No live nodes contain current block Block locations: 172.17.0.2:50010 Dead nodes: 172.17.0.2:50010. Throwing a BlockMissingException 2016-11-29 14:51:06,660 WARN [Timer-Driven Process Thread-7] org.apache.hadoop.hdfs.DFSClient Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv No live nodes contain current block Block locations: 172.17.0.2:50010 Dead nodes: 172.17.0.2:50010. Throwing a BlockMissingException 2016-11-29 14:51:06,660 WARN [Timer-Driven Process Thread-7] org.apache.hadoop.hdfs.DFSClient DFS Read org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv at org.apache.hadoop.hdfs.DFSInputStream.chooseDataNode(DFSInputStream.java:889) [hadoop-hdfs-2.6.2.jar:na]

2016-11-29 14:51:06,660 ERROR [Timer-Driven Process Thread-7] o.apache.nifi.processors.hadoop.GetHDFS GetHDFS[id=abb1f7a5-0158-1000-f1d4-ef83203b4aa1] Error retrieving file hdfs://sandbox.hortonworks.com:8020/user/admin/Data/trucks.csv from HDFS due to org.apache.nifi.processor.exception.FlowFileAccessException: Failed to import data from org.apache.hadoop.hdfs.client.HdfsDataInputStream@7bea77c5 for StandardFlowFileRecord[uuid=34551c53-72ad-40fa-927d-5ac60fe6d83e,claim=,offset=0,name=712611918461157,size=0] due to org.apache.nifi.processor.exception.FlowFileAccessException: Unable to create ContentClaim due to org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv: org.apache.nifi.processor.exception.FlowFileAccessException: Failed to import data from org.apache.hadoop.hdfs.client.HdfsDataInputStream@7bea77c5 for StandardFlowFileRecord[uuid=34551c53-72ad-40fa-927d-5ac60fe6d83e,claim=,offset=0,name=712611918461157,size=0] due to org.apache.nifi.processor.exception.FlowFileAccessException: Unable to create ContentClaim due to org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv 2016-11-29 14:51:06,661 ERROR [Timer-Driven Process Thread-7] o.apache.nifi.processors.hadoop.GetHDFS org.apache.nifi.processor.exception.FlowFileAccessException: Failed to import data from org.apache.hadoop.hdfs.client.HdfsDataInputStream@7bea77c5 for StandardFlowFileRecord[uuid=34551c53-72ad-40fa-927d-5ac60fe6d83e,claim=,offset=0,name=712611918461157,size=0] due to org.apache.nifi.processor.exception.FlowFileAccessException: Unable to create ContentClaim due to org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv at org.apache.nifi.controller.repository.StandardProcessSession.importFrom(StandardProcessSession.java:2479) ~[na:na]

Caused by: org.apache.nifi.processor.exception.FlowFileAccessException: Unable to create ContentClaim due to org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv at org.apache.nifi.controller.repository.StandardProcessSession.importFrom(StandardProcessSession.java:2472) ~[na:na] ... 14 common frames omitted

Created 08-03-2016 08:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please confirm the value of your dfs.namenode.rpc-address in hdfs-site file? make sure you configure that port in port forward.

Created on 08-03-2016 08:30 PM - edited 08-18-2019 04:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,I have this in the hdfs-site file

<property><name>dfs.namenode.rpc-address</name><value>0.0.0.0:8020</value></property>

in these are some of the port forwarding rules in my VM..Created on 08-04-2016 01:54 AM - edited 08-18-2019 04:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Saikrishna,

I was able to connect GetHDFS from Apache NiFi (not HDF, but it should work with HDF, too) running on my local pc (a host machine), to HDP sandbox VM. However, I had to change VM network setting from NAT to Host-only Adapter.

The reason why I had to do this, is, after NiFi communicates with HDFS Namenode (sandbox.hortonworks.com:8020), Namenode returns Datanode address, which is a private IP(10.0.2.15:50010) address of the VM, and with NAT, it can't be accessed from the host machine. I saw following exception in nifi log:

2016-08-04 10:11:38,106 WARN [Timer-Driven Process Thread-9] o.apache.hadoop.hdfs.BlockReaderFactory I/O error constructing remote block reader. org.apache.hadoop.net.ConnectTimeoutException: 60000 millis timeout while waiting for channel to be ready for connect. ch : java.nio.channels.SocketChannel[connection-pending remote=/10.0.2.15:50010] at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:533) ~[hadoop-common-2.6.2.jar:na]

Here are steps that I did:

1. Change Sandbox VM network from NAT to Host-only Adapter

2. Restart Sandbox VM

3. Login to the Sandbox VM and use ifconfig command to get its ip address, in my case 192.168.99.100

4. add an entry in /etc/hosts on my host machine, in my case: 192.168.99.100 sandbox.hortonworks.com

5. check connectivity by telnet: telnet sandbox.hortonworks.com 8020

6. Restart NiFi (HDF)

I didn't have to change core-site.xml nor hdfs-site.xml. Copying those from sandbox, then setting those from processor configuration 'Hadoop Configuration Resources' should work fine.

Hope this helps!

Created 08-04-2016 04:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

It is working after I added the property as suggested by @Matt Burgess to my local hdfs-site.xml in his reply above.

so there are 3 things I did..

added below property to hdfs-site.xml

<property><name>dfs.client.use.datanode.hostname</name><value>true</value></property>

added a port forwarding rule in my VM to 50010

added 127.0.0.1 localhost sandbox.hortonworks.com to my local hosts file.

Thanks everyone.

Created 11-30-2016 01:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am trying to read files from the HDFS which is inside the HDP 2.5.

I downloaded the configuration files from the ambari webportal for the HDFS and placed them in my Nifi folder.

I added the ports in the Virtualbox's port forwarding setting.

016-11-29 14:08:20,562 WARN [Timer-Driven Process Thread-5] org.apache.hadoop.hdfs.DFSClient Failed to connect to /172.17.0.2:50010 for block, add to deadNodes and continue. java.net.ConnectException: Connection timed out: no further information java.net.ConnectException: Connection timed out: no further information

When i add the configuration that you have specified at

i get the follwoing error:

2016-11-29 13:57:05,194 WARN [Timer-Driven Process Thread-9] org.apache.hadoop.hdfs.DFSClient Failed to connect to sandbox.hortonworks.com/127.0.0.1:50010 for block, add to deadNodes and continue. java.io.IOException: An established connection was aborted by the software in your host machine java.io.IOException: An established connection was aborted by the software in your host machine

Can you help me figure out how to get it working? how can i know what is preventing the conncetion from being established to get the data. I do see that it is able to read the number of files and the names of the files. It is only when it tries to do the data transfer, it fails.

Regards

Created 11-30-2016 01:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2016-11-29 14:50:59,544 INFO [Write-Ahead Local State Provider Maintenance] org.wali.MinimalLockingWriteAheadLog org.wali.MinimalLockingWriteAheadLog@6dc5e857 checkpointed with 3 Records and 0 Swap Files in 25 milliseconds (Stop-the-world time = 11 milliseconds, Clear Edit Logs time = 9 millis), max Transaction ID 8 2016-11-29 14:51:06,659 WARN [Timer-Driven Process Thread-7] o.apache.hadoop.hdfs.BlockReaderFactory I/O error constructing remote block reader. java.io.IOException: An existing connection was forcibly closed by the remote host at sun.nio.ch.SocketDispatcher.read0(Native Method) ~[na:1.8.0_111]

2016-11-29 14:51:06,659 WARN [Timer-Driven Process Thread-7] org.apache.hadoop.hdfs.DFSClient Failed to connect to sandbox.hortonworks.com/127.0.0.1:50010 for block, add to deadNodes and continue. java.io.IOException: An existing connection was forcibly closed by the remote host java.io.IOException: An existing connection was forcibly closed by the remote host

at java.lang.Thread.run(Thread.java:745) [na:1.8.0_111] 2016-11-29 14:51:06,660 WARN [Timer-Driven Process Thread-7] org.apache.hadoop.hdfs.DFSClient Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv No live nodes contain current block Block locations: 172.17.0.2:50010 Dead nodes: 172.17.0.2:50010. Throwing a BlockMissingException 2016-11-29 14:51:06,660 WARN [Timer-Driven Process Thread-7] org.apache.hadoop.hdfs.DFSClient Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv No live nodes contain current block Block locations: 172.17.0.2:50010 Dead nodes: 172.17.0.2:50010. Throwing a BlockMissingException 2016-11-29 14:51:06,660 WARN [Timer-Driven Process Thread-7] org.apache.hadoop.hdfs.DFSClient DFS Read org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv at org.apache.hadoop.hdfs.DFSInputStream.chooseDataNode(DFSInputStream.java:889) [hadoop-hdfs-2.6.2.jar:na]

2016-11-29 14:51:06,660 ERROR [Timer-Driven Process Thread-7] o.apache.nifi.processors.hadoop.GetHDFS GetHDFS[id=abb1f7a5-0158-1000-f1d4-ef83203b4aa1] Error retrieving file hdfs://sandbox.hortonworks.com:8020/user/admin/Data/trucks.csv from HDFS due to org.apache.nifi.processor.exception.FlowFileAccessException: Failed to import data from org.apache.hadoop.hdfs.client.HdfsDataInputStream@7bea77c5 for StandardFlowFileRecord[uuid=34551c53-72ad-40fa-927d-5ac60fe6d83e,claim=,offset=0,name=712611918461157,size=0] due to org.apache.nifi.processor.exception.FlowFileAccessException: Unable to create ContentClaim due to org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv: org.apache.nifi.processor.exception.FlowFileAccessException: Failed to import data from org.apache.hadoop.hdfs.client.HdfsDataInputStream@7bea77c5 for StandardFlowFileRecord[uuid=34551c53-72ad-40fa-927d-5ac60fe6d83e,claim=,offset=0,name=712611918461157,size=0] due to org.apache.nifi.processor.exception.FlowFileAccessException: Unable to create ContentClaim due to org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv 2016-11-29 14:51:06,661 ERROR [Timer-Driven Process Thread-7] o.apache.nifi.processors.hadoop.GetHDFS org.apache.nifi.processor.exception.FlowFileAccessException: Failed to import data from org.apache.hadoop.hdfs.client.HdfsDataInputStream@7bea77c5 for StandardFlowFileRecord[uuid=34551c53-72ad-40fa-927d-5ac60fe6d83e,claim=,offset=0,name=712611918461157,size=0] due to org.apache.nifi.processor.exception.FlowFileAccessException: Unable to create ContentClaim due to org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv at org.apache.nifi.controller.repository.StandardProcessSession.importFrom(StandardProcessSession.java:2479) ~[na:na]

Caused by: org.apache.nifi.processor.exception.FlowFileAccessException: Unable to create ContentClaim due to org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1464254149-172.17.0.2-1477381671113:blk_1073742577_1761 file=/user/admin/Data/trucks.csv at org.apache.nifi.controller.repository.StandardProcessSession.importFrom(StandardProcessSession.java:2472) ~[na:na] ... 14 common frames omitted