I m using Spark 2.4.5, Hive 3.1.2, Hadoop 3.2.1. While running hive in spark i got the following exception,

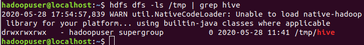

Exception in thread "main" org.apache.spark.sql.AnalysisException: java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be writable. Current permissions are: rwxrwxr-x;

This is my source code,

package com.spark.hiveconnect import java.io.File import org.apache.spark.sql.{Row, SaveMode, SparkSession} object sourceToHIve { case class Record(key: Int, value: String) def main(args: Array[String]){ val warehouseLocation = new File("spark-warehouse").getAbsolutePath val spark = SparkSession .builder() .appName("Spark Hive Example").master("local") .config("spark.sql.warehouse.dir", warehouseLocation) .enableHiveSupport() .getOrCreate() import spark.implicits._ import spark.sql sql("CREATE TABLE IF NOT EXISTS src (key INT, value STRING) USING hive") sql("LOAD DATA LOCAL INPATH '/usr/local/spark3/examples/src/main/resources/kv1.txt' INTO TABLE src") sql("SELECT * FROM src").show() spark.stop() } }

This is my sbt file

name := "SparkHive"version := "0.1"scalaVersion := "2.12.10"libraryDependencies += "org.apache.spark" %% "spark-core" % "2.4.5"

libraryDependencies += "org.apache.spark" %% "spark-sql" % "2.4.5"

libraryDependencies += "mysql" % "mysql-connector-java" % "8.0.19"

libraryDependencies += "org.apache.spark" %% "spark-hive" % "2.4.5"

How can solve this Issue? While observing the console i also saw this statement, is this the reason why i am getting this issue.

20/05/28 14:03:04 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(UDHAV.MAHATA); groups with view permissions: Set(); users with modify permissions: Set(UDHAV.MAHATA); groups with modify permissions: Set()

Can anyone help me?

Thank You!