Support Questions

- Cloudera Community

- Support

- Support Questions

- Explanation of Tez task counters.

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Explanation of Tez task counters.

- Labels:

-

Apache Hive

Created on 06-14-2016 05:22 AM - edited 08-19-2019 01:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello.

I have a query like

SELECT a , b , c , d , count(1) e FROM XXX WHERE created_date = '20160613' AND a is not null GROUP BY a , b , c , d

And It took 17 minutes in total which several times longer than I expected.

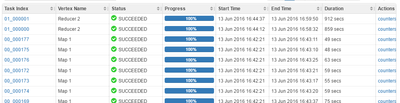

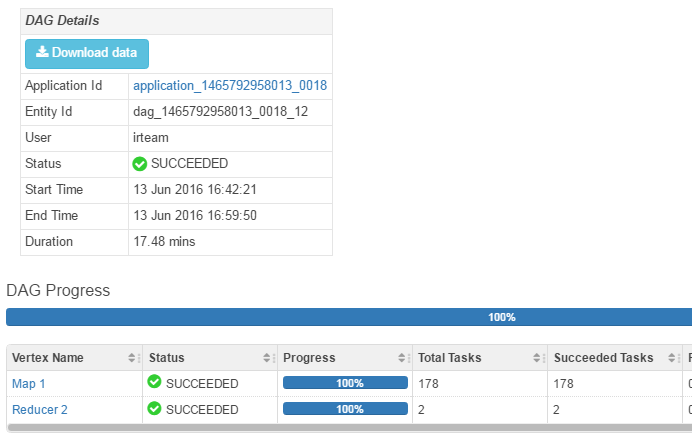

This is the result of TEZ DAG. Most of the time spent on Reduce job(15 minutes) .

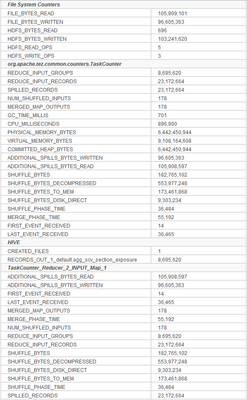

And this is the task counter of one of the reducer task.

With this data could you please point out the time consuming factor of the reduce task?

Simply give more reducer will reduce total DAG time?

Hive 1.2, Hadoop 2, Tez 0.6 using. and the table format of hive is AVRO.

Created 06-14-2016 08:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not sure what you mean with consuming factor. You can see that the reducer took 15 min and had 23m records as input. You can also see that the shuffle had 500MB. Which should not take 15 minutes in the reducer to count by group. So I am wondering if you by any chance do not have enough memory for the reducers and they cannot keep the groups ( 8m ) in memory or something.

You should definitely increase the number of reducers. Since you have 8m groups and both tasks took a long time ( so most likely not a single huge group ) you can essentially create as many as you have task slots in the cluster. But I would also look at my hive memory configuration to see if I would increase the task memory and have a look at what happens on the machines running a reducer since aggregating 23m rows should not take 15 minutes.

Quick way to test with more reducers:

SET MAPRED.REDUCE.TASKS = x; ( where x is the number of task slots in your cluster )

Quick way to test with more RAM:

set hive.tez.java.opts="-Xmx3400m";

set hive.tez.container.size = 4096;

where the Xmx RAM value is 75-90% of the container size. Depending on your level of conservatism.

Created 06-14-2016 08:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Not sure what you mean with consuming factor. You can see that the reducer took 15 min and had 23m records as input. You can also see that the shuffle had 500MB. Which should not take 15 minutes in the reducer to count by group. So I am wondering if you by any chance do not have enough memory for the reducers and they cannot keep the groups ( 8m ) in memory or something.

You should definitely increase the number of reducers. Since you have 8m groups and both tasks took a long time ( so most likely not a single huge group ) you can essentially create as many as you have task slots in the cluster. But I would also look at my hive memory configuration to see if I would increase the task memory and have a look at what happens on the machines running a reducer since aggregating 23m rows should not take 15 minutes.

Quick way to test with more reducers:

SET MAPRED.REDUCE.TASKS = x; ( where x is the number of task slots in your cluster )

Quick way to test with more RAM:

set hive.tez.java.opts="-Xmx3400m";

set hive.tez.container.size = 4096;

where the Xmx RAM value is 75-90% of the container size. Depending on your level of conservatism.

Created 06-14-2016 08:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It turned out to be log level problem.(It had set to debug).

And I will also try to increase mapper count. ( each container's heap size set to high enough)

Thanks @Benjamin Leonhardi

btw, Is there any document which explains each counter name and value in detail?

Created 06-14-2016 09:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good you fixed it. I would just read a good hadoop book and understand the MapCombinerShuffleReduce process in detail. After that the majority of markers should be pretty self evident.