Support Questions

- Cloudera Community

- Support

- Support Questions

- Exploring Data with Apache Pig from the Grunt shel...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Exploring Data with Apache Pig from the Grunt shell

- Labels:

-

Apache Pig

Created on 02-10-2016 02:28 AM - edited 08-19-2019 02:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello friends -

I seem to be stuck once again as I work my way through the Hortonworks Tutorials. I am currently working "Exploring Data with Apache Pig from the Grunt shell" and stuck on the step "DUMP Movies;"

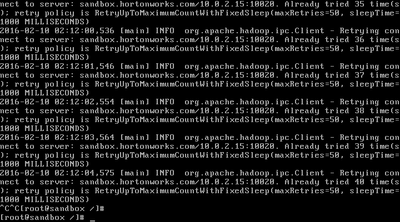

I seem to me stuck in a loop that is waiting on "sandbox.hortonworks.com/10.0.2.15/:10020. Already tried 38 times ............"

I have attached a screen for clarification.

There was one funky twist in the instruction; the tutorial calls for loading movies.txt to /user/hadoop, and several comments suggested this was a type, and the location /user/hue should be used instead. That is the only variation on the instructions I have made knowingly.

Any assistance, as always, is greatly appreciated.

Mike

Created 02-10-2016 02:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mike Vogt How much memory have you allocated to Sandbox? Make sure that you shutdown hbase and any other component that you don't need.

Can you share screenshot of ambari?

Created 02-10-2016 02:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mike Vogt How much memory have you allocated to Sandbox? Make sure that you shutdown hbase and any other component that you don't need.

Can you share screenshot of ambari?

Created 02-10-2016 02:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mike Vogt Make sure HDFS, Mapreduce and yarn is running

port 10020 belongs job history server.

Created 02-10-2016 02:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Port seems odd, what user are you running the tutorial as? @Mike Vogt I suggest creating the user you are running as so if you are root

sudo -u hdfs hdfs dfs -mkdir /user/root

sudo -u hdfs hdfs dfs -chown -R user/root

Then

hdfs dfs -put Movies.txt ./

Created 02-11-2016 12:18 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Artem -

I appreciate you looking into this. I checked the directories and permissions and they were all in order.

The issue turned out to be yarn and mapreduce needing restarts. So I restarted, and got through the immediate crisis.

But I do appreciate you taking a look nonetheless.

Cheers,

Mike

Created 02-11-2016 12:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Aha! I checked Mapreduce and yarn, and they both were requiring restarts. Completed the task, and back on track.

Thanks again for your patience and guidance.

Created 02-11-2016 12:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mike Vogt Thanks for following up!!! 🙂