Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Failed to index Provenance Events org.apache.l...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

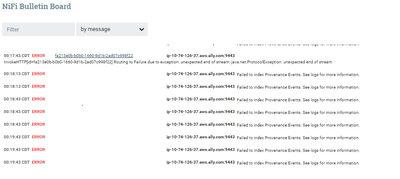

Failed to index Provenance Events org.apache.lucene.store.AlreadyClosedException: this IndexWriter is closed || Caused by: java.nio.file.FileSystemException

- Labels:

-

Apache NiFi

Created on

04-05-2022

10:29 PM

- last edited on

04-05-2022

11:31 PM

by

VidyaSargur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

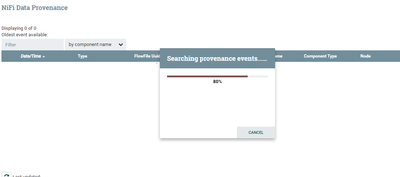

Nifi provenance data is not visible and remains at 80% in searching for provenance events after upgrading from 1.13.0 to 1.15.3

Below are the error logs :

2022-04-05 23:51:22,905 ERROR [Index Provenance Events-2] o.a.n.p.index.lucene.EventIndexTask Failed to index Provenance Events

org.apache.lucene.store.AlreadyClosedException: this IndexWriter is closed

at org.apache.lucene.index.IndexWriter.ensureOpen(IndexWriter.java:877)

at org.apache.lucene.index.IndexWriter.ensureOpen(IndexWriter.java:891)

at org.apache.lucene.index.IndexWriter.updateDocuments(IndexWriter.java:1468)

at org.apache.lucene.index.IndexWriter.addDocuments(IndexWriter.java:1444)

at org.apache.nifi.provenance.lucene.LuceneEventIndexWriter.index(LuceneEventIndexWriter.java:70)

at org.apache.nifi.provenance.index.lucene.EventIndexTask.index(EventIndexTask.java:202)

at org.apache.nifi.provenance.index.lucene.EventIndexTask.run(EventIndexTask.java:113)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.nio.file.FileSystemException: /provenance_repo/provenance_repository/lucene-8-index-1647749380623/_vd_Lucene80_0.dvd: Too many open files

at sun.nio.fs.UnixException.translateToIOException(UnixException.java:91)

at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:102)

at sun.nio.fs.UnixException.rethrowAsIOException(UnixException.java:107)

at sun.nio.fs.UnixFileSystemProvider.newByteChannel(UnixFileSystemProvider.java:214)

at java.nio.file.spi.FileSystemProvider.newOutputStream(FileSystemProvider.java:434)

at java.nio.file.Files.newOutputStream(Files.java:216)

at org.apache.lucene.store.FSDirectory$FSIndexOutput.<init>(FSDirectory.java:410)

at org.apache.lucene.store.FSDirectory$FSIndexOutput.<init>(FSDirectory.java:406)

at org.apache.lucene.store.FSDirectory.createOutput(FSDirectory.java:254)

at org.apache.lucene.store.LockValidatingDirectoryWrapper.createOutput(LockValidatingDirectoryWrapper.java:44)

at org.apache.lucene.store.TrackingDirectoryWrapper.createOutput(TrackingDirectoryWrapper.java:43)

at org.apache.lucene.codecs.lucene80.Lucene80DocValuesConsumer.<init>(Lucene80DocValuesConsumer.java:79)

at org.apache.lucene.codecs.lucene80.Lucene80DocValuesFormat.fieldsConsumer(Lucene80DocValuesFormat.java:161)

at org.apache.lucene.codecs.perfield.PerFieldDocValuesFormat$FieldsWriter.getInstance(PerFieldDocValuesFormat.java:227)

at org.apache.lucene.codecs.perfield.PerFieldDocValuesFormat$FieldsWriter.getInstance(PerFieldDocValuesFormat.java:163)

at org.apache.lucene.codecs.perfield.PerFieldDocValuesFormat$FieldsWriter.addNumericField(PerFieldDocValuesFormat.java:109)

at org.apache.lucene.index.NumericDocValuesWriter.flush(NumericDocValuesWriter.java:108)

at org.apache.lucene.index.DefaultIndexingChain.writeDocValues(DefaultIndexingChain.java:345)

at org.apache.lucene.index.DefaultIndexingChain.flush(DefaultIndexingChain.java:225)

at org.apache.lucene.index.DocumentsWriterPerThread.flush(DocumentsWriterPerThread.java:350)

at org.apache.lucene.index.DocumentsWriter.doFlush(DocumentsWriter.java:476)

at org.apache.lucene.index.DocumentsWriter.flushAllThreads(DocumentsWriter.java:656)

at org.apache.lucene.index.IndexWriter.prepareCommitInternal(IndexWriter.java:3365)

at org.apache.lucene.index.IndexWriter.commitInternal(IndexWriter.java:3771)

at org.apache.lucene.index.IndexWriter.commit(IndexWriter.java:3729)

at org.apache.nifi.provenance.lucene.LuceneEventIndexWriter.commit(LuceneEventIndexWriter.java:101)

at org.apache.nifi.provenance.index.lucene.EventIndexTask.commit(EventIndexTask.java:253)

at org.apache.nifi.provenance.index.lucene.EventIndexTask.index(EventIndexTask.java:232)

... 6 common frames omitted

@MattWho Please help on this

Created 04-18-2022 05:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Neil_1992

I agree that the first step here is to increase the open file limit for the user that owns your NiFi process.

check your current ulimit by becoming the user the user that owns your NiFi process and executing the "ulimit -a" command.

You can also inspect the /etc/security/limits.comf file.

NiFi can open a very large number of open files. The more FlowFile load, the larger the dataflows, the more concurrent tasks, etc all contribute to open file handles.

I recommend setting the ulimit to a very large value like 999999, restarting NiFi and seeing if your issue persists.

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt

Created 04-25-2022 11:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

we need to increase open file limit for nifi as a service not user , so add the below line in your

nifi.service file and restart the server , it should resolve this issue

LimitNOFILE=999999

Created 04-07-2022 02:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Neil_1992

I've got the same error after upgrade from 1.11.4 to 1.15.3

For this moment, I don't have any information about this error.

Hope to found it one day.

regards

Created 04-07-2022 02:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@MattWho , can you please help us on this , looks like this is common with Nifi 1.15.3 version which happens to be the latest one

Created 04-08-2022 12:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Neil_1992 & @maykiwogno

While we wait for our Nifi Guru @MattWho review, Wish to provide a bit of information on the Lucene Exception. It appears Nifi Provenance Repository uses Lucene for indexing & the AlreadyClosedException means the Lucene Core being accessed has been Closed already, owing to FileSystemException with "Too Many Open Files" for the one of the Core Content "/provenance_repo/provenance_repository/lucene-8-index-1647749380623/_vd_Lucene80_0.dvd".

Once AlreadyClosedException is reported, Restarting the Lucene Service would ensure the Cores are initialized afresh. Wish to check if your Team have attempted to increase the OpenFileLimit of the User running the Nifi Process to manage the FileSystemException with "Too Many Open Files" & restart Nifi, which I assume would restart the Lucene Cores as well.

Note that the above answer is provided from Lucene perspective as I am not a Nifi Expert. My only intention to get your team unblocked, if the issue is preventing any Nifi concerns.

Regards, Smarak

Created 04-18-2022 06:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your valuable inputs

Created 04-18-2022 05:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Neil_1992

I agree that the first step here is to increase the open file limit for the user that owns your NiFi process.

check your current ulimit by becoming the user the user that owns your NiFi process and executing the "ulimit -a" command.

You can also inspect the /etc/security/limits.comf file.

NiFi can open a very large number of open files. The more FlowFile load, the larger the dataflows, the more concurrent tasks, etc all contribute to open file handles.

I recommend setting the ulimit to a very large value like 999999, restarting NiFi and seeing if your issue persists.

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt

Created 04-18-2022 06:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Adding below to /etc/security/limits.conf and Restart the nodes twice with adding nodes one after another syncing with primary resolved the Issue

* hard nofile 500000

* soft nofile 500000

root hard nofile 500000

root soft nofile 500000

nifi hard nofile 500000

nifi soft nofile 500000

Created 04-19-2022 12:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

For my experience, when we restart the nifi, the message disaspeared for one or two days and come back again.

Let check in one week.

Created 04-19-2022 12:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @MattWho

I have opened a issue here : https://issues.apache.org/jira/browse/NIFI-9572

I've checked and rechecked the number of open files and it is never up than +10000

I've already thought to increase the number of open files in the /etc/security/limit.d/90-nifi.conf

but there are two things

- Why are we got this limit but not in with nifi 1.11.4

- And the number 999999 is done by experience or it is high limit ?

Regards

Created 04-19-2022 12:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have found the root cause to be some of the nars of 1.15.3 version , When i removed those and replaced with same nars from 1.13.0 , the issue did not appear , below is the list of nars i removed later replaced with 1.13.0 version

sudo mv /apps/nifi-1.15.3/lib/nifi-distributed-cache-services-nar-1.15.3.nar /apps

sudo mv /apps/nifi-1.15.3/lib/nifi-hadoop-nar-1.15.3.nar /apps/

sudo mv /apps/nifi-1.15.3/lib/nifi-standard-nar-1.15.3.nar /apps

sudo mv /apps/nifi-1.15.3/lib/nifi-scripting-nar-1.15.3.nar /apps/