Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Failed to upload Tez tar file to HDFS

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Failed to upload Tez tar file to HDFS

- Labels:

-

HDFS

Created 01-10-2023 02:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

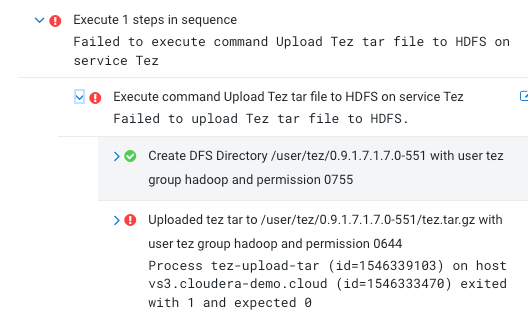

I'm trying the trial Installation and I got this issue in Command Details step of adding a cluster

I tried to do it manually using this command:

hdfs dfs -put /opt/cloudera/parcels/CDH-7.1.7-1.cdh7.1.7.p0.15945976/lib/tez/tez.tar.gz /user/tez/0.9.1.7.1.7.0-551

and I got the following output:

23/01/10 04:29:29 WARN hdfs.DataStreamer: DataStreamer Exception

org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /user/tez/0.9.1.7.1.7.0-551/tez.tar.gz._COPYING_ could only be written to 0 of the 1 minReplication nodes. There are 0 datanode(s) running and 0 node(s) are excluded in this operation.

at org.apache.hadoop.hdfs.server.blockmanagement.BlockManager.chooseTarget4NewBlock(BlockManager.java:2280)

at org.apache.hadoop.hdfs.server.namenode.FSDirWriteFileOp.chooseTargetForNewBlock(FSDirWriteFileOp.java:294)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getAdditionalBlock(FSNamesystem.java:2827)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.addBlock(NameNodeRpcServer.java:874)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.addBlock(ClientNamenodeProtocolServerSideTranslatorPB.java:589)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:533)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1070)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:989)

at org.apache.hadoop.ipc.Server$RpcCall.run(Server.java:917)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1898)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2894)

at org.apache.hadoop.ipc.Client.getRpcResponse(Client.java:1562)

at org.apache.hadoop.ipc.Client.call(Client.java:1508)

at org.apache.hadoop.ipc.Client.call(Client.java:1405)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:233)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:118)

at com.sun.proxy.$Proxy9.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.addBlock(ClientNamenodeProtocolTranslatorPB.java:523)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:431)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeMethod(RetryInvocationHandler.java:166)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invoke(RetryInvocationHandler.java:158)

at org.apache.hadoop.io.retry.RetryInvocationHandler$Call.invokeOnce(RetryInvocationHandler.java:96)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:362)

at com.sun.proxy.$Proxy10.addBlock(Unknown Source)

at org.apache.hadoop.hdfs.DFSOutputStream.addBlock(DFSOutputStream.java:1122)

at org.apache.hadoop.hdfs.DataStreamer.locateFollowingBlock(DataStreamer.java:1880)

at org.apache.hadoop.hdfs.DataStreamer.nextBlockOutputStream(DataStreamer.java:1682)

at org.apache.hadoop.hdfs.DataStreamer.run(DataStreamer.java:719)

put: File /user/tez/0.9.1.7.1.7.0-551/tez.tar.gz._COPYING_ could only be written to 0 of the 1 minReplication nodes. There are 0 datanode(s) running and 0 node(s) are excluded in this operation.

Any support you can provide is much appreciated.

Created 01-10-2023 10:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @baabdullah ,

The error indicates that your DataNode is down. Could you please confirm if that's the scenario?

Error:

could only be written to 0 of the 1 minReplication nodes. There are 0 datanode(s) running and 0 node(s) are excluded in this operation.

Could you please check the data node logs to identify the exact issue? Maybe you need to check the heap size (one of the many possible reasons).

Hope this helps,

Tarun

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs-up button.